#安装kind服务

install.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

|

root@master01:~# cat install.sh

#!/bin/bash

date

set -v

# create registry container unless it already exists

reg_name='kind-registry'

reg_port='5001'

if [ "$(docker inspect -f '{{.State.Running}}' "${reg_name}" 2>/dev/null || true)" != 'true' ]; then

docker run \

-d --restart=always -p "127.0.0.1:${reg_port}:5000" --name "${reg_name}" \

registry:2

fi

# 1.prep noCNI env

cat <<EOF | kind create cluster --name=clab-calico-ipip-crosssubnet --image=kindest/node:v1.23.4 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."localhost:${reg_port}"]

endpoint = ["http://${reg_name}:5000"]

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# 定义节点使用的 pod 网段

podSubnet: "10.244.0.0/16"

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.10

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.11

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.10

node-labels: "rack=rack1"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.11

node-labels: "rack=rack1"

EOF

# connect the registry to the cluster network if not already connected

if [ "$(docker inspect -f='{{json .NetworkSettings.Networks.kind}}' "${reg_name}")" = 'null' ]; then

docker network connect "kind" "${reg_name}"

fi

# Document the local registry

# https://github.com/kubernetes/enhancements/tree/master/keps/sig-cluster-lifecycle/generic/1755-communicating-a-local-registry

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: local-registry-hosting

namespace: kube-public

data:

localRegistryHosting.v1: |

host: "localhost:${reg_port}"

help: "https://kind.sigs.k8s.io/docs/user/local-registry/"

EOF

# 2.remove taints

kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/master:NoSchedule-

kubectl get nodes -o wide

# 3.install necessary tools

# cd /usr/bin/

# curl -o calicoctl -O -L "https://gh.api.99988866.xyz/https://github.com/projectcalico/calico/releases/download/v3.23.2/calicoctl-linux-amd64"

# chmod +x calicoctl

for i in $(docker ps -a --format "table {{.Names}}" | grep calico)

do

echo $i

docker cp /usr/bin/calicoctl $i:/usr/bin/calicoctl

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

|

执行脚本安装kind

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

|

root@master01:~# ./install.sh

Thu Jan 23 05:33:22 UTC 2025

# create registry container unless it already exists

reg_name='kind-registry'

reg_port='5001'

if [ "$(docker inspect -f '{{.State.Running}}' "${reg_name}" 2>/dev/null || true)" != 'true' ]; then

docker run \

-d --restart=always -p "127.0.0.1:${reg_port}:5000" --name "${reg_name}" \

registry:2

fi

# 1.prep noCNI env

cat <<EOF | kind create cluster --name=clab-calico-ipip-crosssubnet --image=kindest/node:v1.23.4 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."localhost:${reg_port}"]

endpoint = ["http://${reg_name}:5000"]

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# 定义节点使用的 pod 网段

podSubnet: "10.244.0.0/16"

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.10

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.11

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.10

node-labels: "rack=rack1"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.11

node-labels: "rack=rack1"

EOF

Creating cluster "clab-calico-ipip-crosssubnet" ...

✓ Ensuring node image (kindest/node:v1.23.4) 🖼

✓ Preparing nodes 📦 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-clab-calico-ipip-crosssubnet"

You can now use your cluster with:

kubectl cluster-info --context kind-clab-calico-ipip-crosssubnet

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

# connect the registry to the cluster network if not already connected

if [ "$(docker inspect -f='{{json .NetworkSettings.Networks.kind}}' "${reg_name}")" = 'null' ]; then

docker network connect "kind" "${reg_name}"

fi

# Document the local registry

# https://github.com/kubernetes/enhancements/tree/master/keps/sig-cluster-lifecycle/generic/1755-communicating-a-local-registry

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: local-registry-hosting

namespace: kube-public

data:

localRegistryHosting.v1: |

host: "localhost:${reg_port}"

help: "https://kind.sigs.k8s.io/docs/user/local-registry/"

EOF

configmap/local-registry-hosting created

# 2.remove taints

kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/master:NoSchedule-

node/clab-calico-ipip-crosssubnet-control-plane untainted

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-calico-ipip-crosssubnet-control-plane NotReady control-plane,master 25s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker NotReady <none> 6s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker2 NotReady <none> 6s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker3 NotReady <none> 6s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

# 3.install necessary tools

# cd /usr/bin/

# curl -o calicoctl -O -L "https://gh.api.99988866.xyz/https://github.com/projectcalico/calico/releases/download/v3.23.2/calicoctl-linux-amd64"

# chmod +x calicoctl

for i in $(docker ps -a --format "table {{.Names}}" | grep calico)

do

echo $i

docker cp /usr/bin/calicoctl $i:/usr/bin/calicoctl

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

clab-calico-ipip-crosssubnet-control-plane

Successfully copied 59.3MB to clab-calico-ipip-crosssubnet-control-plane:/usr/bin/calicoctl

Successfully copied 92.7kB to clab-calico-ipip-crosssubnet-control-plane:/usr/bin/ping

clab-calico-ipip-crosssubnet-worker2

Successfully copied 59.3MB to clab-calico-ipip-crosssubnet-worker2:/usr/bin/calicoctl

Successfully copied 92.7kB to clab-calico-ipip-crosssubnet-worker2:/usr/bin/ping

clab-calico-ipip-crosssubnet-worker3

Successfully copied 59.3MB to clab-calico-ipip-crosssubnet-worker3:/usr/bin/calicoctl

Successfully copied 92.7kB to clab-calico-ipip-crosssubnet-worker3:/usr/bin/ping

clab-calico-ipip-crosssubnet-worker

Successfully copied 59.3MB to clab-calico-ipip-crosssubnet-worker:/usr/bin/calicoctl

Successfully copied 92.7kB to clab-calico-ipip-crosssubnet-worker:/usr/bin/ping

root@master01:~#

|

注意清理kind的命令如下所示

1

2

|

# name 后面的是集群的名称

kind delete cluster --name=clab-calico-ipip-crosssubnet

|

查看一下安装后的结果

1

2

3

4

5

6

|

root@master01:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-calico-ipip-crosssubnet-control-plane NotReady control-plane,master 2m55s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker NotReady <none> 2m36s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker2 NotReady <none> 2m36s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker3 NotReady <none> 2m36s v1.23.4 <none> <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

|

containerlab 来创建容器环境

Containerlab 是一个开源工具,用于在容器化环境中创建和管理网络实验室。它提供了一个命令行界面(CLI),用于编排和管理基于容器的网络实验室。以下是一些主要特点:

-

实验室即代码(Lab as Code):通过定义拓扑文件(clab 文件)以声明的方式定义实验室。

-

网络操作系统(NOS):专注于容器化的网络操作系统,如 Nokia SR Linux、Arista cEOS、Cisco XRd 等。

-

虚拟机节点支持:通过与 vrnetlab 的集成,可以同时运行虚拟化和容器化的节点。

-

多种拓扑支持:支持创建各种复杂的网络拓扑,如 Super Spine、Spine-Leaf、CLOS 等。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

root@kind:~# brctl addbr br-pool0

root@kind:~# ifconfig br-pool0 up

root@kind:~# brctl addbr br-pool1

root@kind:~# ifconfig br-pool1 up

root@kind:~# ip a l

13: br-pool0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether aa:c1:ab:7c:2a:ea brd ff:ff:ff:ff:ff:ff

inet6 fe80::6cd2:4bff:feed:9203/64 scope link

valid_lft forever preferred_lft forever

14: br-pool1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether aa:c1:ab:63:29:9e brd ff:ff:ff:ff:ff:ff

inet6 fe80::4b0:daff:fe68:be88/64 scope link

valid_lft forever preferred_lft forever

|

containerlab是通过yaml来设定网络环境,接下来我们建立这个虚拟网络

clab 网络拓扑文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

|

root@master01:~/calico-clab# cat calico.ipip.crosssubnet.clab.yml

# calico.ipip.crosssubnet.clab.yml

name: calico-ipip-crosssubnet

topology:

nodes:

gw0:

kind: linux

image: 10.7.20.12:5000/vyos/vyos:latest

cmd: /sbin/init

binds:

- /lib/modules:/lib/modules

- ./startup-conf/gw0-boot.cfg:/opt/vyatta/etc/config/config.boot

br-pool0:

kind: bridge

br-pool1:

kind: bridge

server1:

kind: linux

image: 10.7.20.12:5000/aeciopires/nettools:latest

# 复用节点网络,共享网络命名空间

network-mode: container:clab-calico-ipip-crosssubnet-control-plane

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.5.10/24 dev net0

- ip route replace default via 10.1.5.1

server2:

kind: linux

image: 10.7.20.12:5000/aeciopires/nettools:latest

# 复用节点网络,共享网络命名空间

network-mode: container:clab-calico-ipip-crosssubnet-worker

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.5.11/24 dev net0

- ip route replace default via 10.1.5.1

server3:

kind: linux

image: 10.7.20.12:5000/aeciopires/nettools:latest

# 复用节点网络,共享网络命名空间

network-mode: container:clab-calico-ipip-crosssubnet-worker2

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.8.10/24 dev net0

- ip route replace default via 10.1.8.1

server4:

kind: linux

image: 10.7.20.12:5000/aeciopires/nettools:latest

# 复用节点网络,共享网络命名空间

network-mode: container:clab-calico-ipip-crosssubnet-worker3

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.8.11/24 dev net0

- ip route replace default via 10.1.8.1

links:

- endpoints: ["br-pool0:br-pool0-net0", "server1:net0"]

- endpoints: ["br-pool0:br-pool0-net1", "server2:net0"]

- endpoints: ["br-pool1:br-pool1-net0", "server3:net0"]

- endpoints: ["br-pool1:br-pool1-net1", "server4:net0"]

- endpoints: ["gw0:eth1", "br-pool0:br-pool0-net2"]

- endpoints: ["gw0:eth2", "br-pool1:br-pool1-net2"]

|

还需要一个gw0-boot.cfg配置文件指定网络的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

|

# ./startup-conf/gw0-boot.cfg

interfaces {

ethernet eth1 {

address 10.1.5.1/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth2 {

address 10.1.8.1/24

duplex auto

smp-affinity auto

speed auto

}

loopback lo {

}

}

# 配置 nat 信息,gw0 网络下的其他服务器可以访问外网

nat {

source {

rule 100 {

outbound-interface eth0

source {

address 10.1.0.0/16

}

translation {

address masquerade

}

}

}

}

system {

config-management {

commit-revisions 100

}

console {

device ttyS0 {

speed 9600

}

}

host-name gw0

login {

user vyos {

authentication {

encrypted-password $6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/

plaintext-password ""

}

level admin

}

}

ntp {

server 0.pool.ntp.org {

}

server 1.pool.ntp.org {

}

server 2.pool.ntp.org {

}

}

syslog {

global {

facility all {

level info

}

facility protocols {

level debug

}

}

}

time-zone UTC

}

/* Warning: Do not remove the following line. */

/* === vyatta-config-version: "qos@1:dhcp-server@5:webgui@1:pppoe-server@2:webproxy@2:firewall@5:pptp@1:dns-forwarding@1:mdns@1:quagga@7:webproxy@1:snmp@1:system@10:conntrack@1:l2tp@1:broadcast-relay@1:dhcp-relay@2:conntrack-sync@1:vrrp@2:ipsec@5:ntp@1:config-management@1:wanloadbalance@3:ssh@1:nat@4:zone-policy@1:cluster@1" === */

/* Release version: 1.2.8 */

|

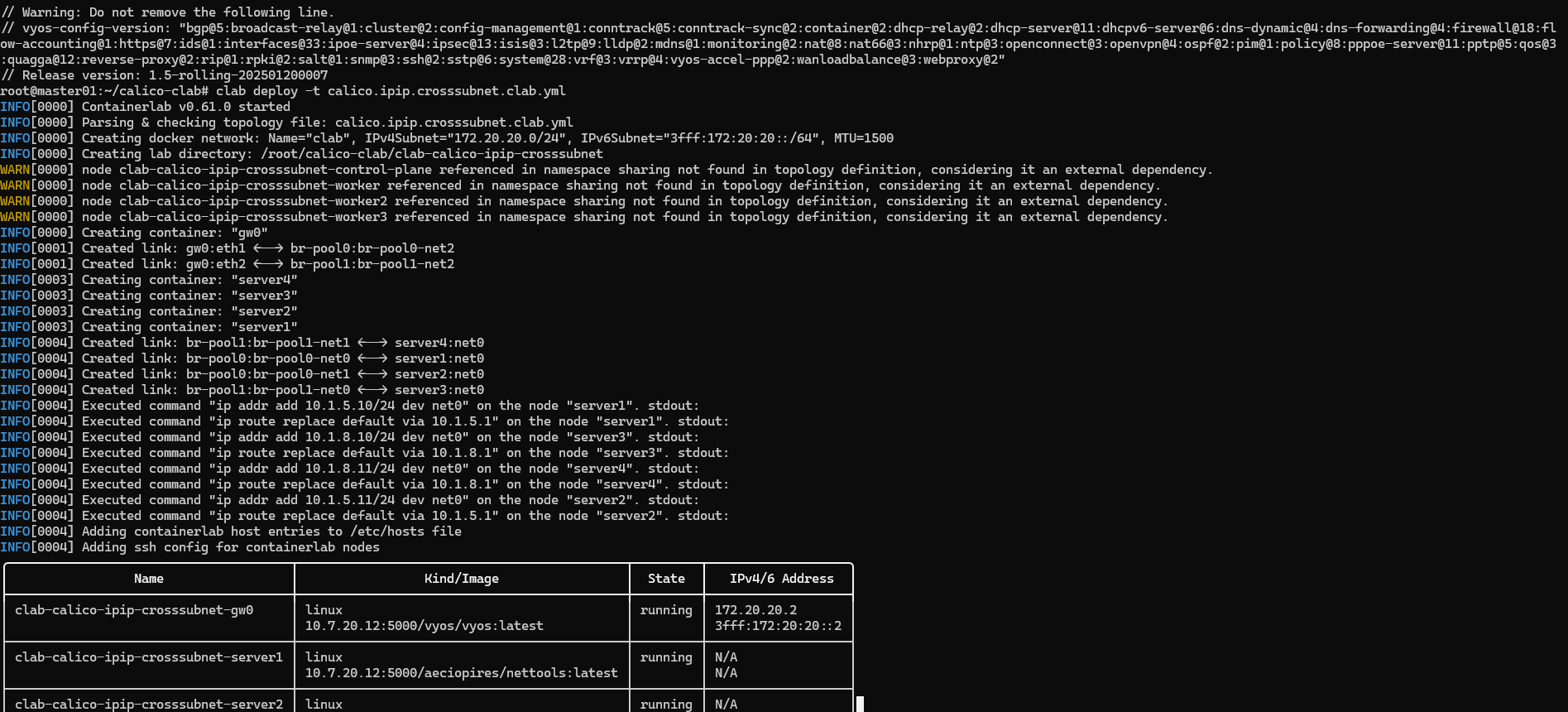

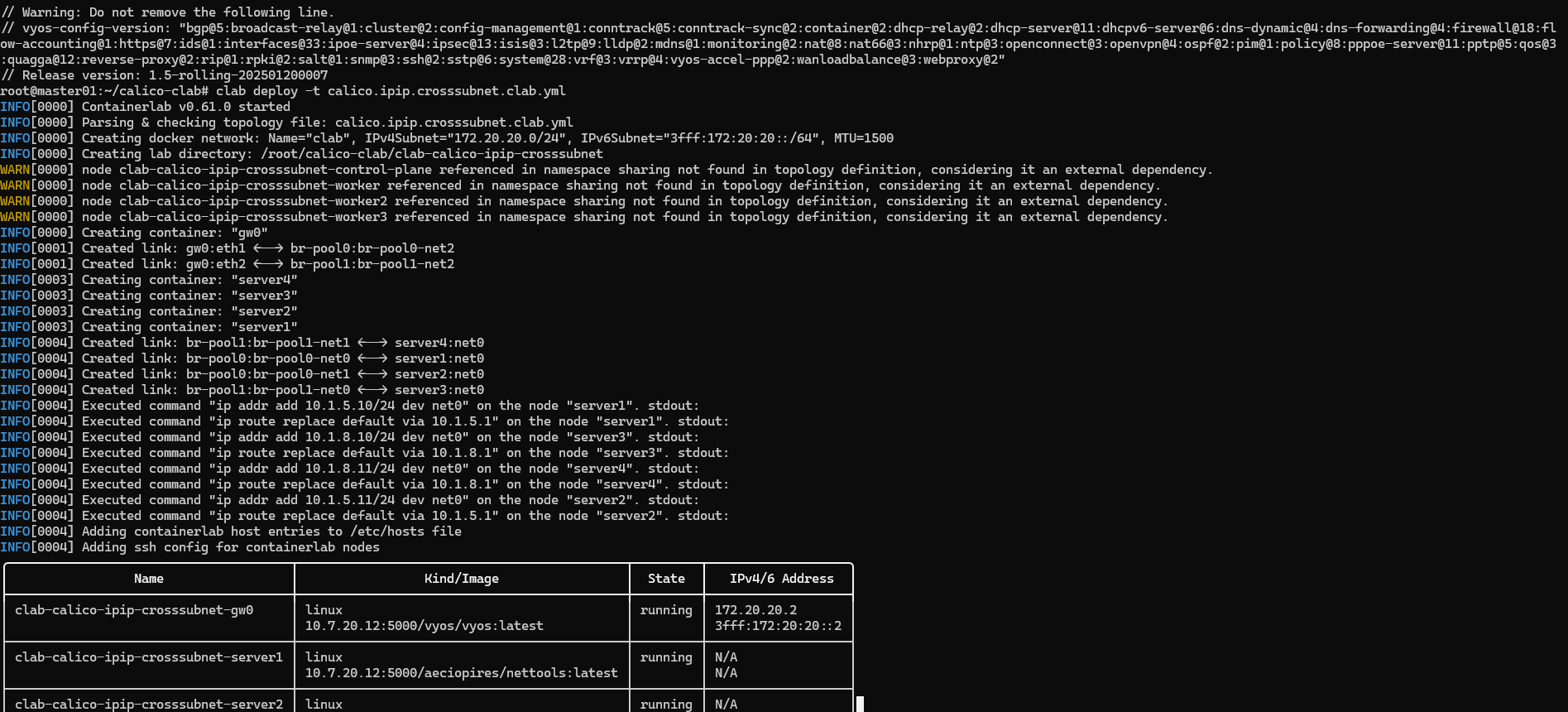

部署一下服务

1

2

3

4

5

6

7

|

# tree -L 2 ./

./

├── calico.ipip.crosssubnet.clab.yml

└── startup-conf

└── gw0-boot.cfg

# clab deploy -t calico.ipip.crosssubnet.clab.yml

|

部署命令

1

|

clab deploy -t calico.ipip.crosssubnet.clab.yml

|

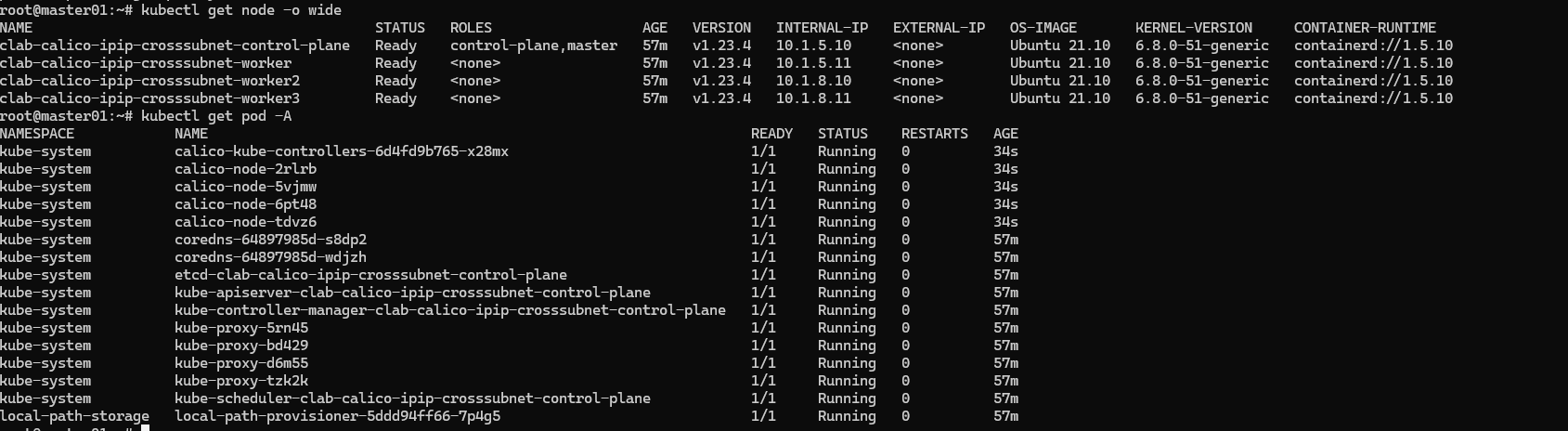

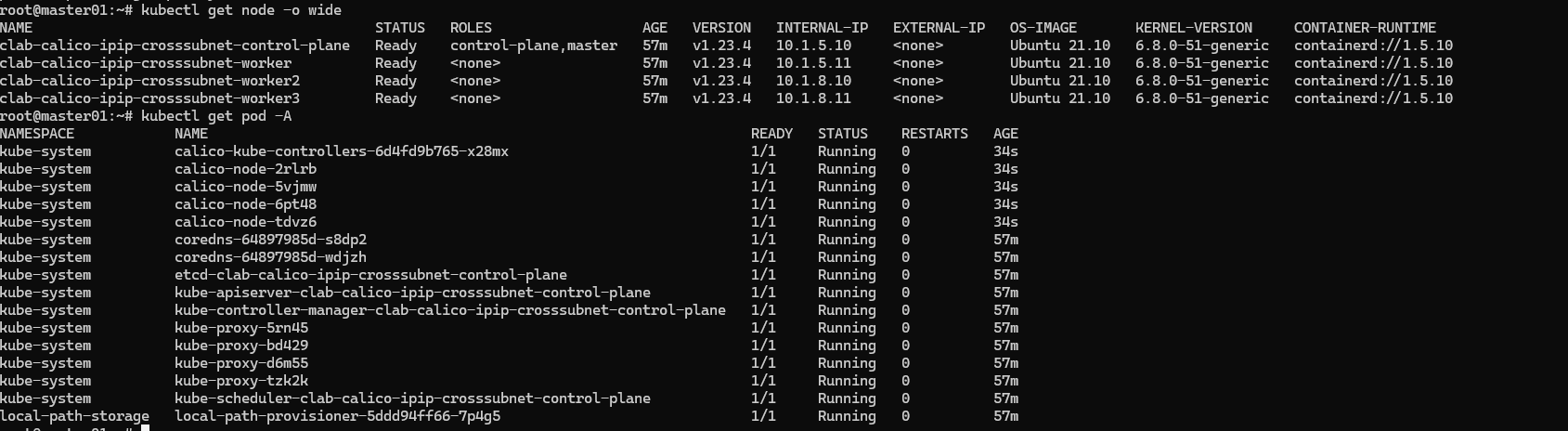

接下来检查集群状态

接下来检查集群状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

root@master01:~/calico-clab# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-calico-ipip-crosssubnet-control-plane NotReady control-plane,master 32m v1.23.4 10.1.5.10 <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker NotReady <none> 32m v1.23.4 10.1.5.11 <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker2 NotReady <none> 32m v1.23.4 10.1.8.10 <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

clab-calico-ipip-crosssubnet-worker3 NotReady <none> 32m v1.23.4 10.1.8.11 <none> Ubuntu 21.10 6.8.0-51-generic containerd://1.5.10

root@master01:~/calico-clab# docker exec -it clab-calico-ipip-crosssubnet-control-plane ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

342: eth0@if343: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:3/64 scope link

valid_lft forever preferred_lft forever

349: net0@if350: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:bb:d9:d0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.5.10/24 scope global net0

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:febb:d9d0/64 scope link

valid_lft forever preferred_lft forever

root@master01:~/calico-clab# docker exec -it clab-calico-ipip-crosssubnet-control-plane ip r s

default via 10.1.5.1 dev net0

10.1.5.0/24 dev net0 proto kernel scope link src 10.1.5.10

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

|

路由信息添加10.1.5.0的路由信息

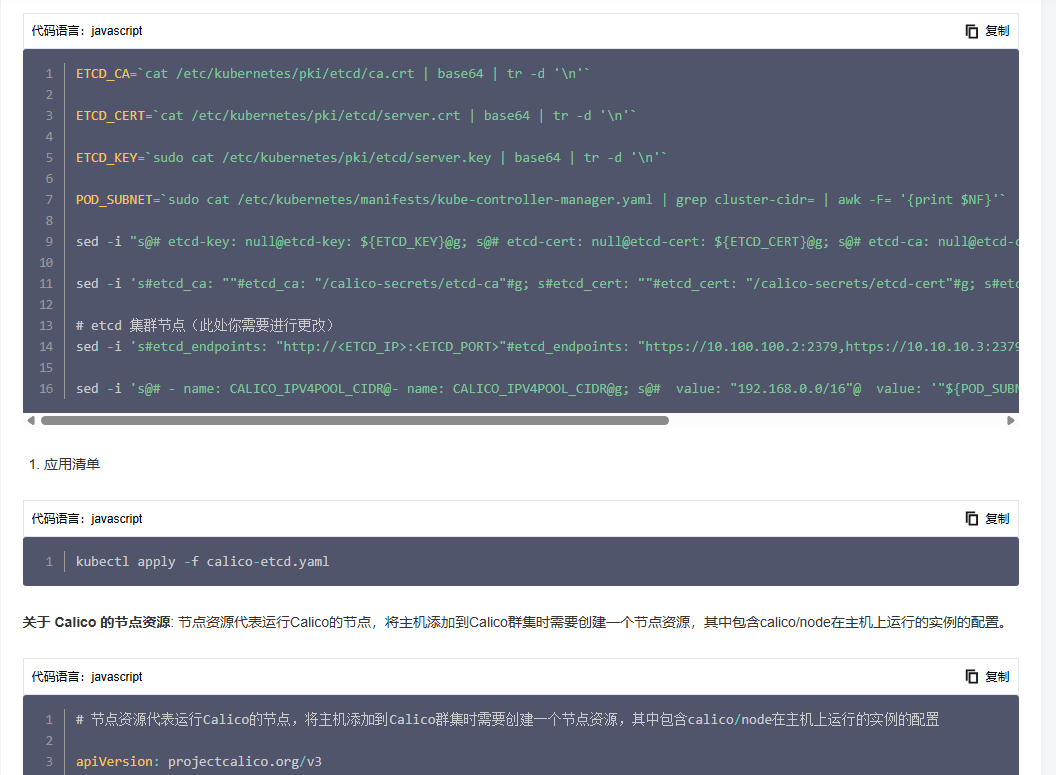

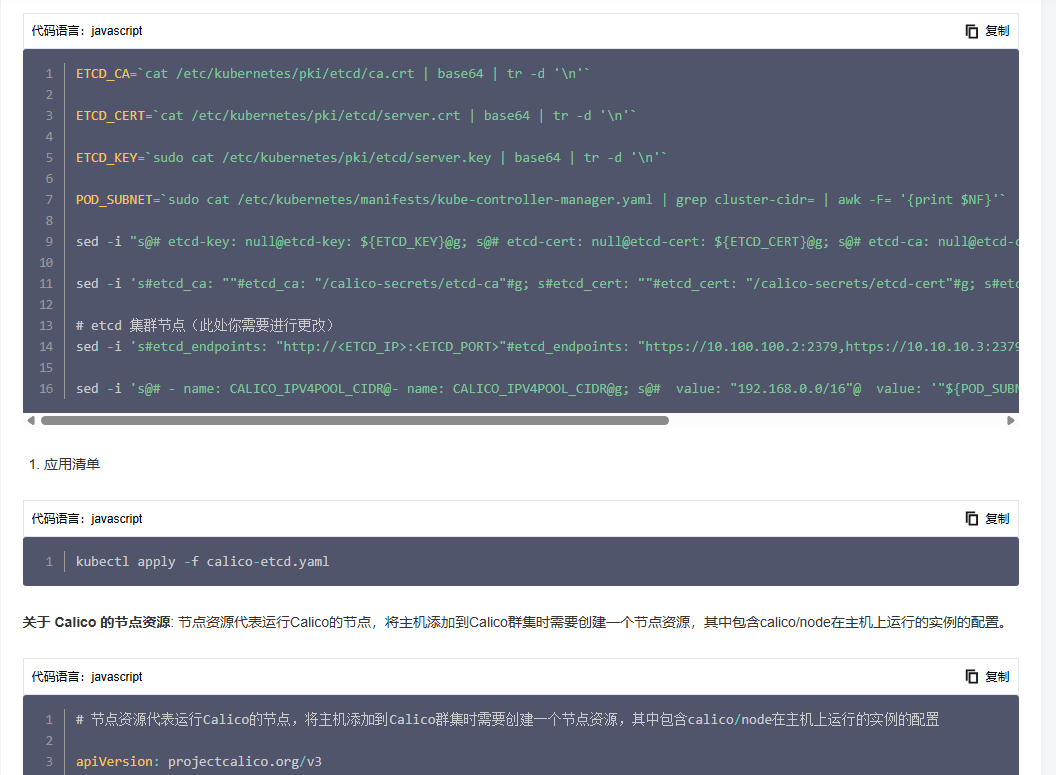

安装 calico 服务

calico.yaml 参数解释

- name: CALICO_IPV4POOL_IPIP

- 含义: 用于启用或禁用 IPIP(IP in IP)隧道。

value: "CrossSubnet": IPIP 隧道会在跨越不同子网的情况下启用,而在同一个子网内的流量不会使用 IPIP 隧道。

安装一下calico

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

root@master01:~# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

|

检查一下集群状态

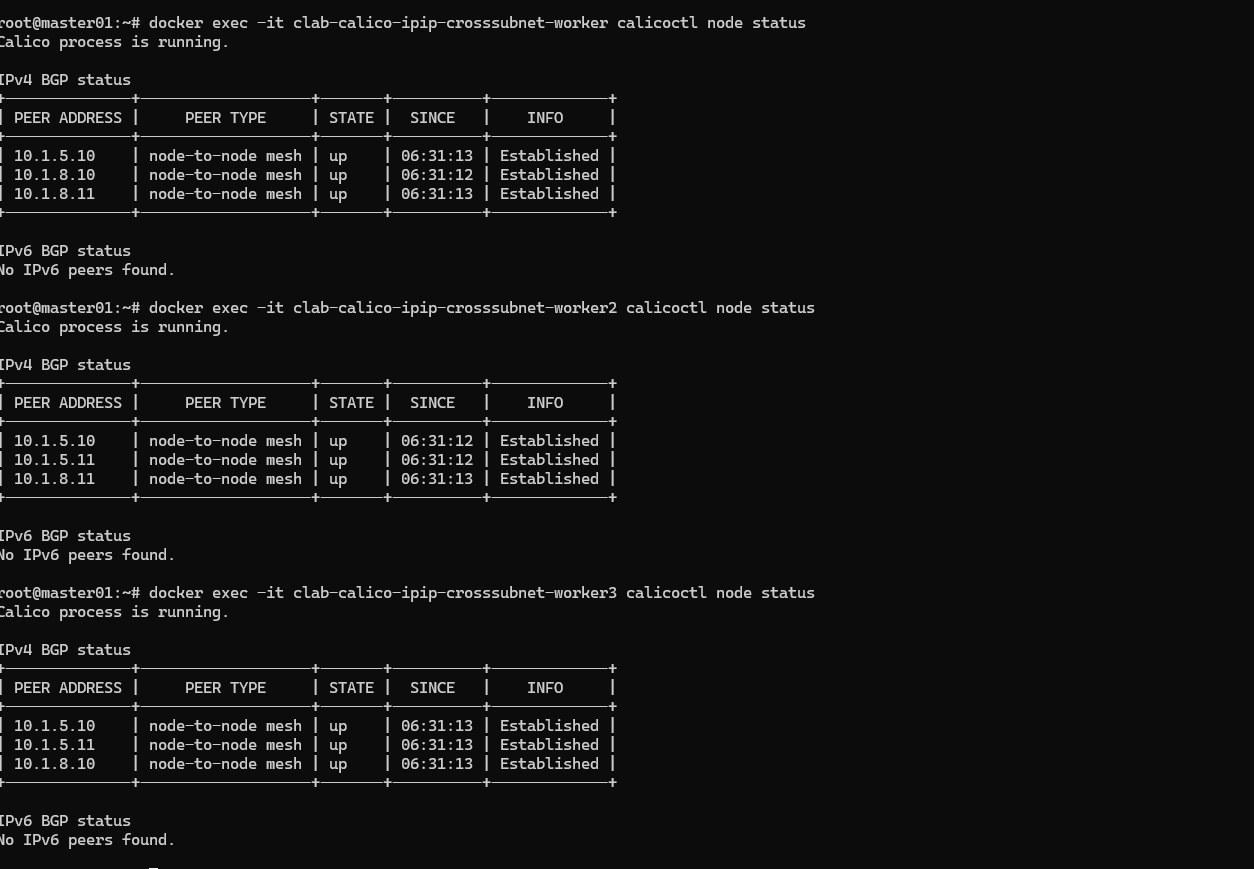

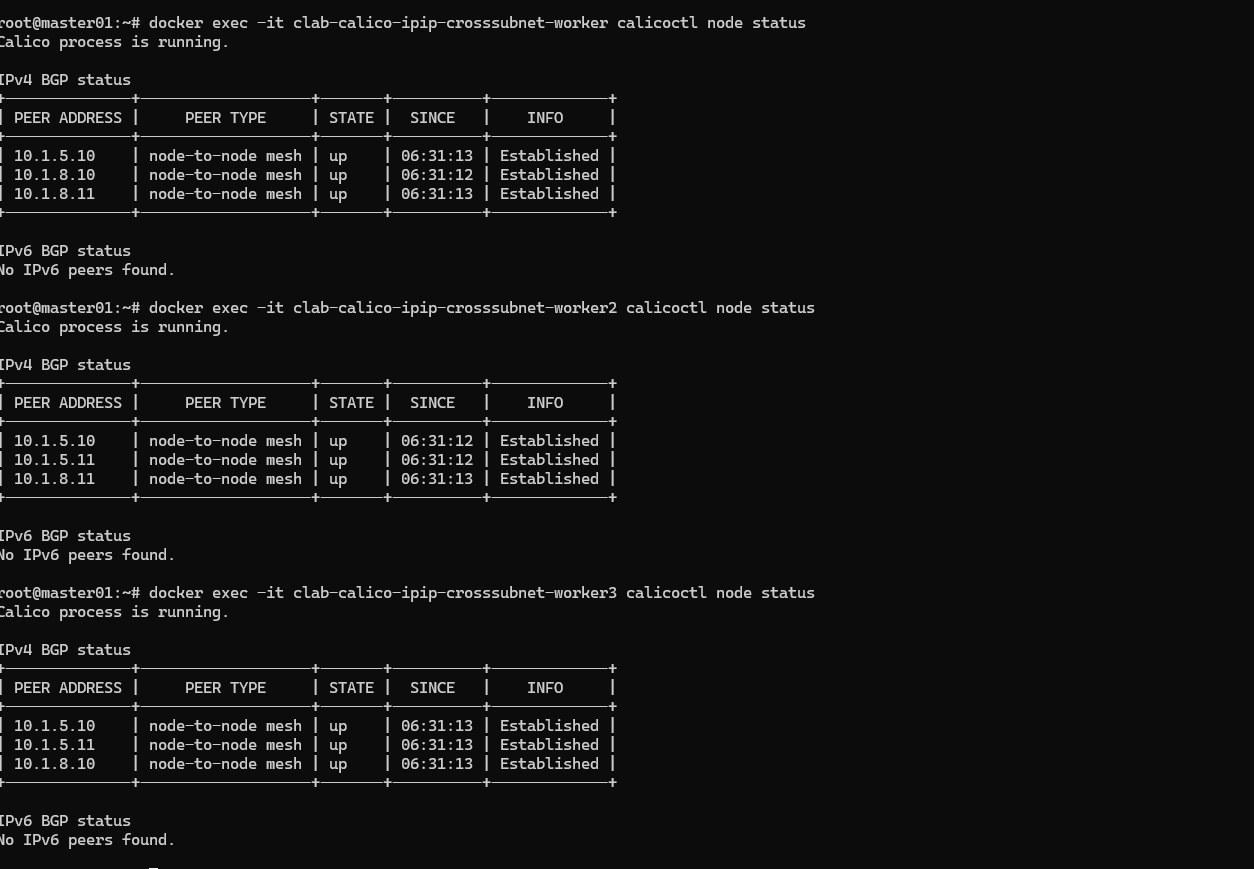

查看一下calico对应的node状态

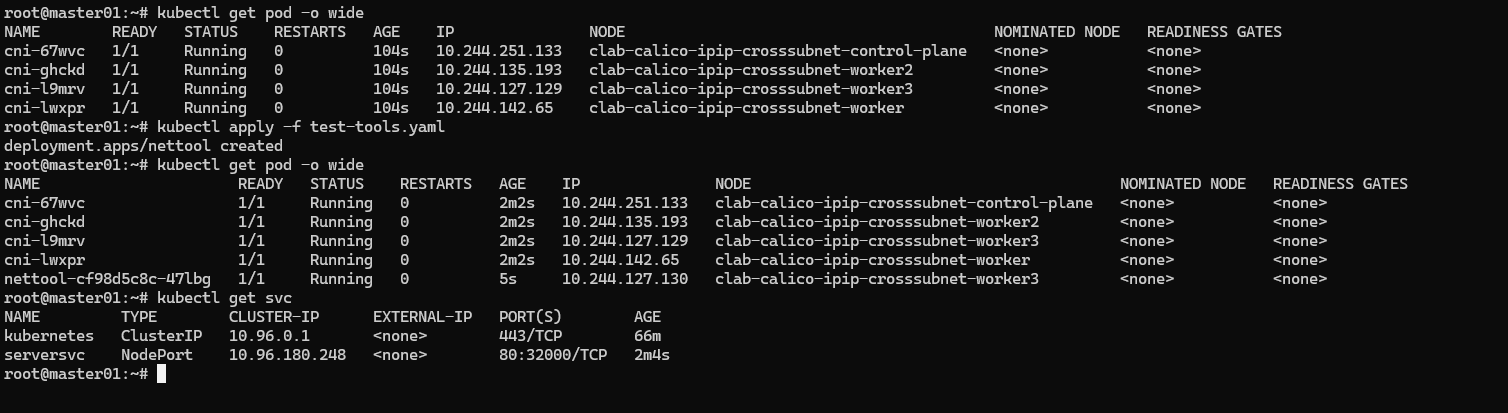

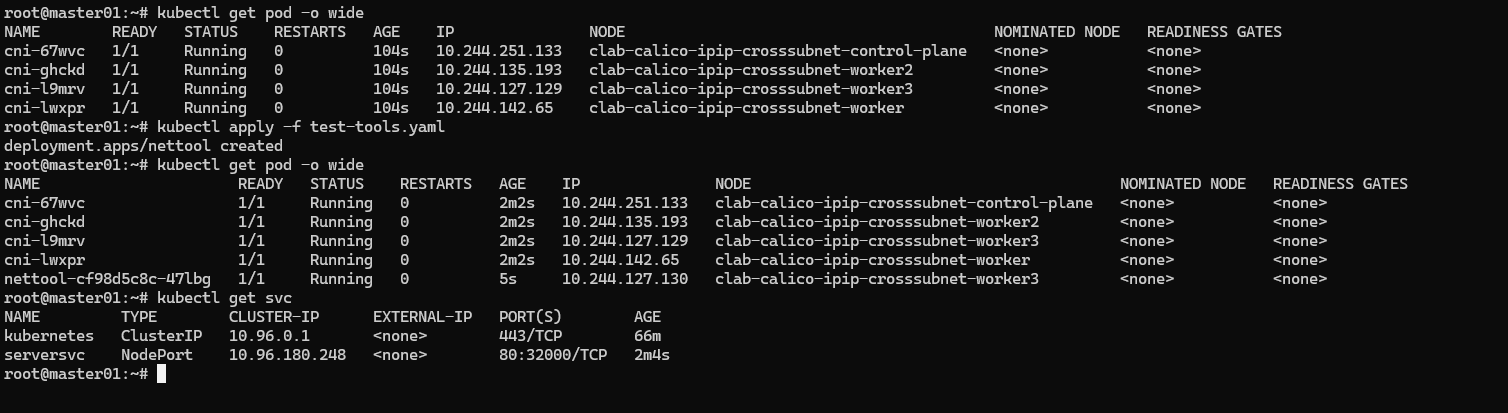

开始测试网络

使用nettool跑的pod

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

#root@master01:~# cat test/cni.yaml

apiVersion: apps/v1

kind: DaemonSet

#kind: Deployment

metadata:

labels:

app: cni

name: cni

spec:

#replicas: 1

selector:

matchLabels:

app: cni

template:

metadata:

labels:

app: cni

spec:

containers:

- image: localhost:5001/devops/nettools:0.9

command: ["sleep", "36000"]

name: nettoolbox

securityContext:

privileged: true

---

apiVersion: v1

kind: Service

metadata:

name: serversvc

spec:

type: NodePort

selector:

app: cni

ports:

- name: cni

port: 80

targetPort: 80

nodePort: 32000

|

test-tools,yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

#root@master01:~# cat test-tools.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nettool

labels:

app: nettool

spec:

replicas: 1

selector:

matchLabels:

app: nettool

template:

metadata:

labels:

app: nettool

spec:

nodeSelector:

kubernetes.io/hostname: clab-calico-ipip-crosssubnet-worker2

containers:

- name: nettool

image: localhost:5001/devops/nettools:0.9

imagePullPolicy: Always

command: ["sleep", "36000"]

securityContext:

privileged: true

|

部署一下服务

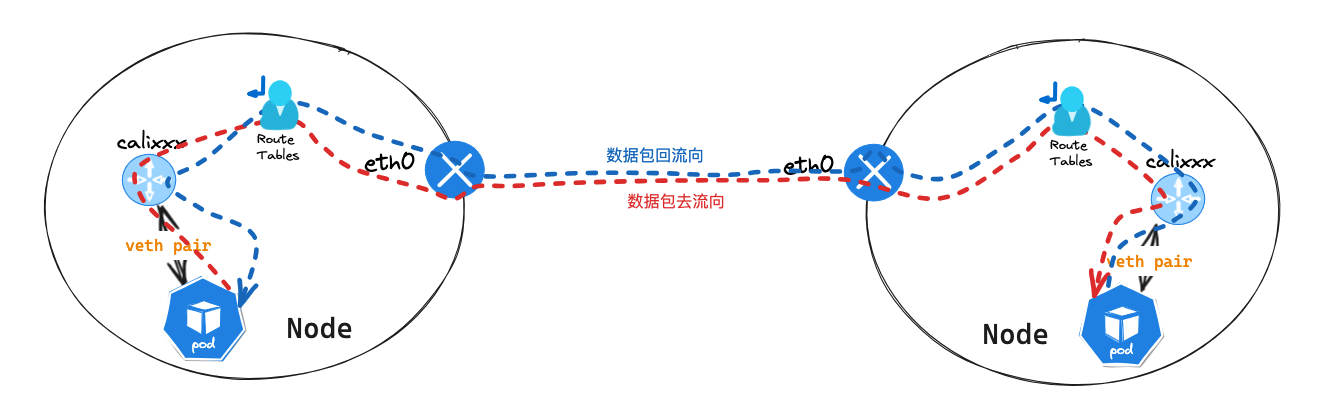

三、 测试网络

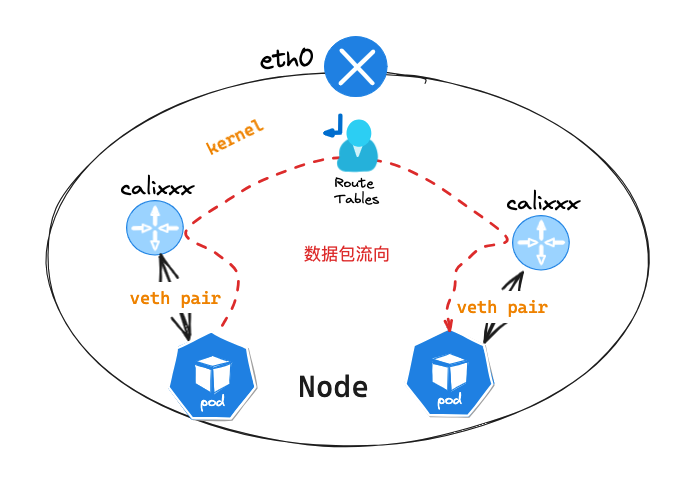

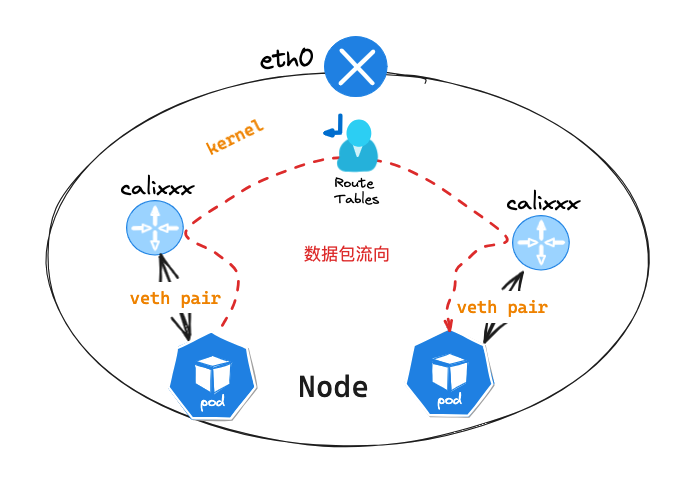

同节点 Pod 网络通信

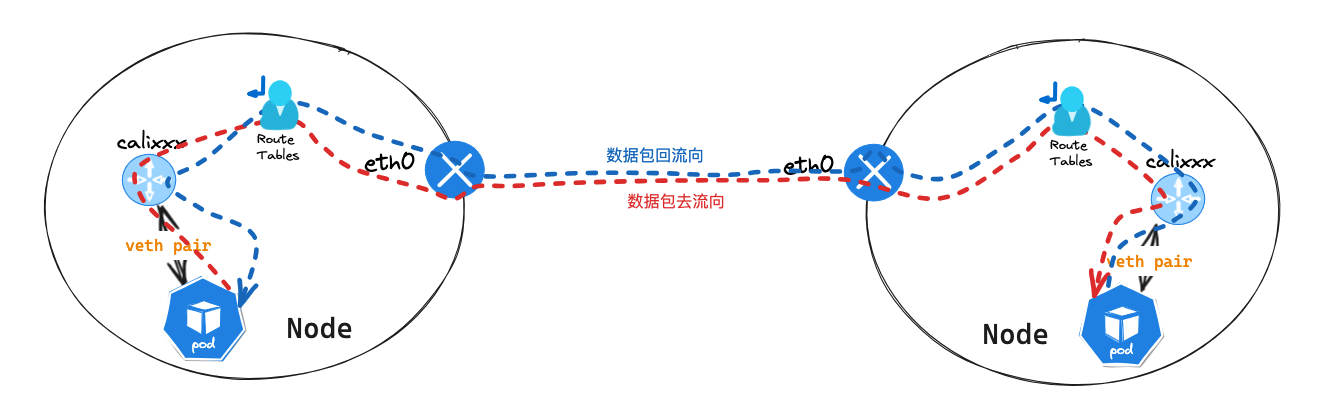

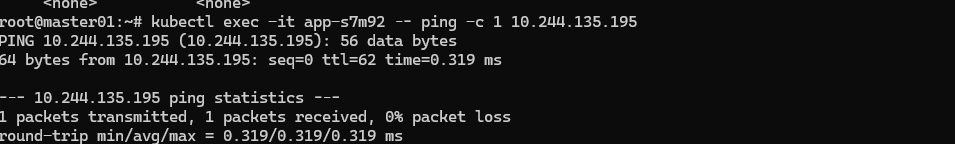

跨节点同Node网段 Pod 网络通讯

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

root@master01:~/calico-clab# kubectl exec -it nettool-cf98d5c8c-vndfb -- ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host proto kernel_lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default

link/ether 3e:41:6e:b8:36:aa brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.244.142.66/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::3c41:6eff:feb8:36aa/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

root@master01:~/calico-clab# kubectl exec -it nettool-cf98d5c8c-vndfb -- ip r s

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

|

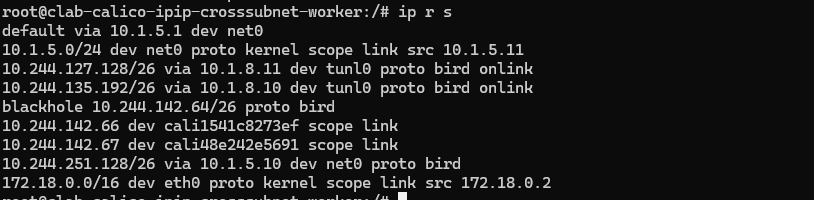

查看 Pod 信息发现在 calico 中主机的 IP 地址为 32 位掩码,意味着该 IP 地址是单个主机的唯一标识,而不是一个子网。这个主机访问其他 IP 均会走路由到达

查看pod所在Node节点的信息

查看一下路由信息

查看一下路由信息

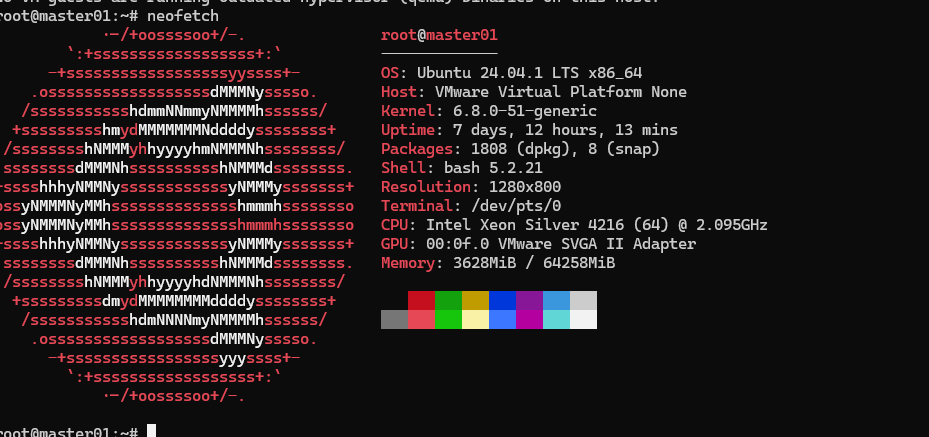

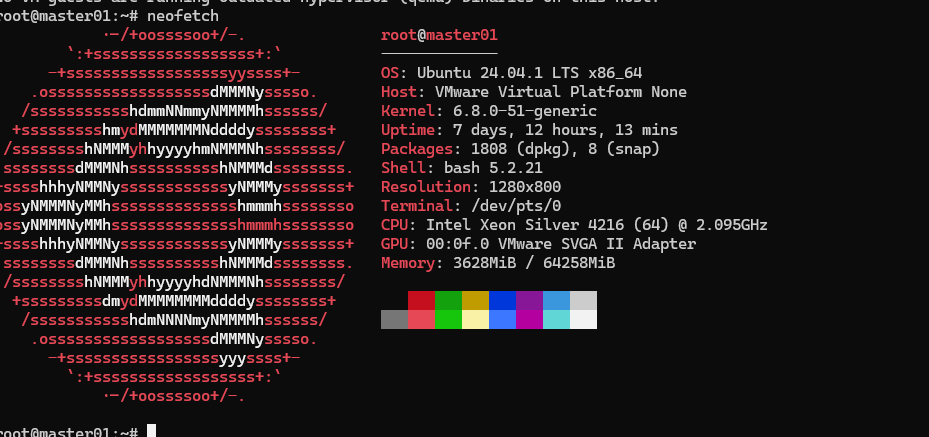

使用的操作是neofetch

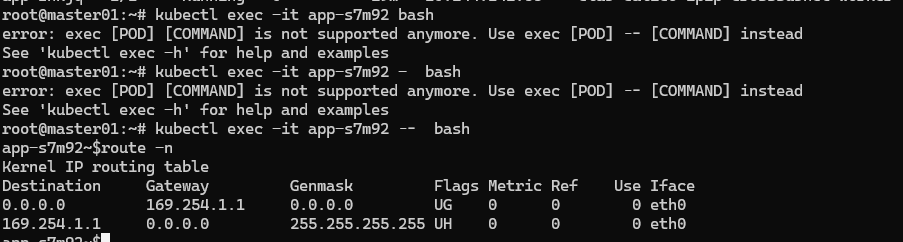

这个操作系统造成的问题是kubectl exec -it 会卡住。

估计版本太old了。

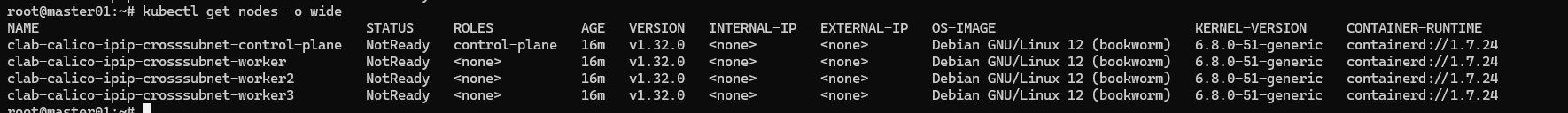

接下来换个kind集群实时,删除指定image

这个操作系统造成的问题是kubectl exec -it 会卡住。

估计版本太old了。

接下来换个kind集群实时,删除指定image

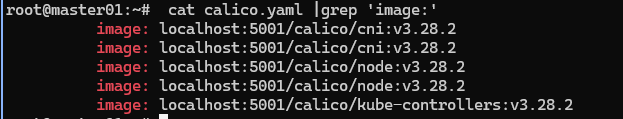

下载镜像

下载镜像

1

2

3

4

5

6

7

8

9

10

11

|

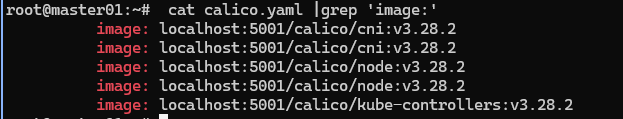

docker pull 10.7.20.12:5000/calico/cni:v3.28.2

docker pull 10.7.20.12:5000/calico/node:v3.28.2

docker pull 10.7.20.12:5000/calico/kube-controllers:v3.28.2

docker tag 10.7.20.12:5000/calico/cni:v3.28.2 localhost:5001/calico/cni:v3.28.2

docker tag 10.7.20.12:5000/calico/node:v3.28.2 localhost:5001/calico/node:v3.28.2

docker tag 10.7.20.12:5000/calico/kube-controllers:v3.28.2 localhost:5001/calico/kube-controllers:v3.28.2

docker push localhost:5001/calico/cni:v3.28.2

docker push localhost:5001/calico/node:v3.28.2

docker push localhost:5001/calico/kube-controllers:v3.28.2

|

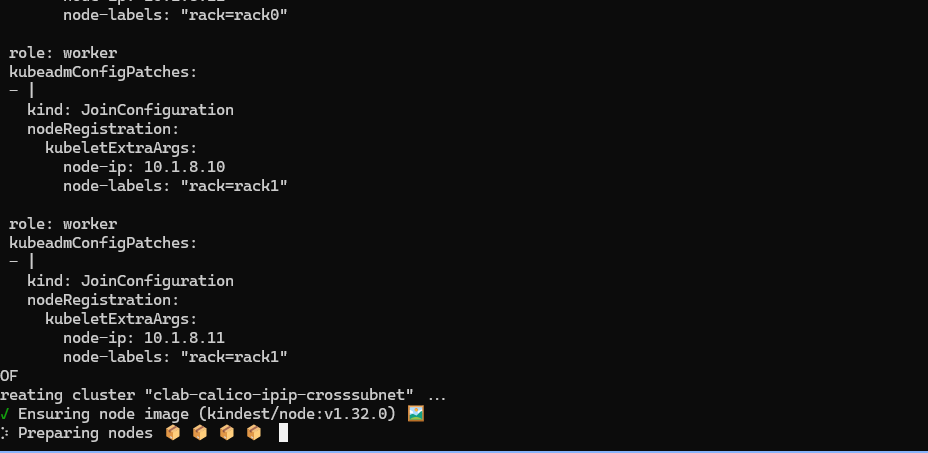

这个受阻了,再换个镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

|

#install.sh

root@master01:~# cat install.sh

#!/bin/bash

date

set -v

# create registry container unless it already exists

reg_name='kind-registry'

reg_port='5001'

if [ "$(docker inspect -f '{{.State.Running}}' "${reg_name}" 2>/dev/null || true)" != 'true' ]; then

docker run \

-d --restart=always -p "127.0.0.1:${reg_port}:5000" --name "${reg_name}" \

registry:2

fi

# 1.prep noCNI env

cat <<EOF | kind create cluster --name=clab-calico-ipip-crosssubnet --image=kindest/node:v1.27.3 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."localhost:${reg_port}"]

endpoint = ["http://${reg_name}:5000"]

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# 定义节点使用的 pod 网段

podSubnet: "10.244.0.0/16"

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.10

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.11

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.10

node-labels: "rack=rack1"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.11

node-labels: "rack=rack1"

EOF

# connect the registry to the cluster network if not already connected

if [ "$(docker inspect -f='{{json .NetworkSettings.Networks.kind}}' "${reg_name}")" = 'null' ]; then

docker network connect "kind" "${reg_name}"

fi

# Document the local registry

# https://github.com/kubernetes/enhancements/tree/master/keps/sig-cluster-lifecycle/generic/1755-communicating-a-local-registry

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: local-registry-hosting

namespace: kube-public

data:

localRegistryHosting.v1: |

host: "localhost:${reg_port}"

help: "https://kind.sigs.k8s.io/docs/user/local-registry/"

EOF

# 2.remove taints

kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/master:NoSchedule-

kubectl get nodes -o wide

# 3.install necessary tools

# cd /usr/bin/

# curl -o calicoctl -O -L "https://gh.api.99988866.xyz/https://github.com/projectcalico/calico/releases/download/v3.23.2/calicoctl-linux-amd64"

# chmod +x calicoctl

for i in $(docker ps -a --format "table {{.Names}}" | grep calico)

do

echo $i

docker cp /usr/bin/calicoctl $i:/usr/bin/calicoctl

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/deb.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

|

1

2

3

4

5

6

7

8

9

10

11

|

docker pull 10.7.20.12:5000/calico/cni:v3.23.5

docker pull 10.7.20.12:5000/calico/node:v3.23.5

docker pull 10.7.20.12:5000/calico/kube-controllers:v3.23.5

docker tag 10.7.20.12:5000/calico/cni:v3.23.5 localhost:5001/calico/cni:v3.23.5

docker tag 10.7.20.12:5000/calico/node:v3.23.5 localhost:5001/calico/node:v3.23.5

docker tag 10.7.20.12:5000/calico/kube-controllers:v3.23.5 localhost:5001/calico/kube-controllers:v3.23.5

docker push localhost:5001/calico/cni:v3.23.5

docker push localhost:5001/calico/node:v3.23.5

docker push localhost:5001/calico/kube-controllers:v3.23.5

|

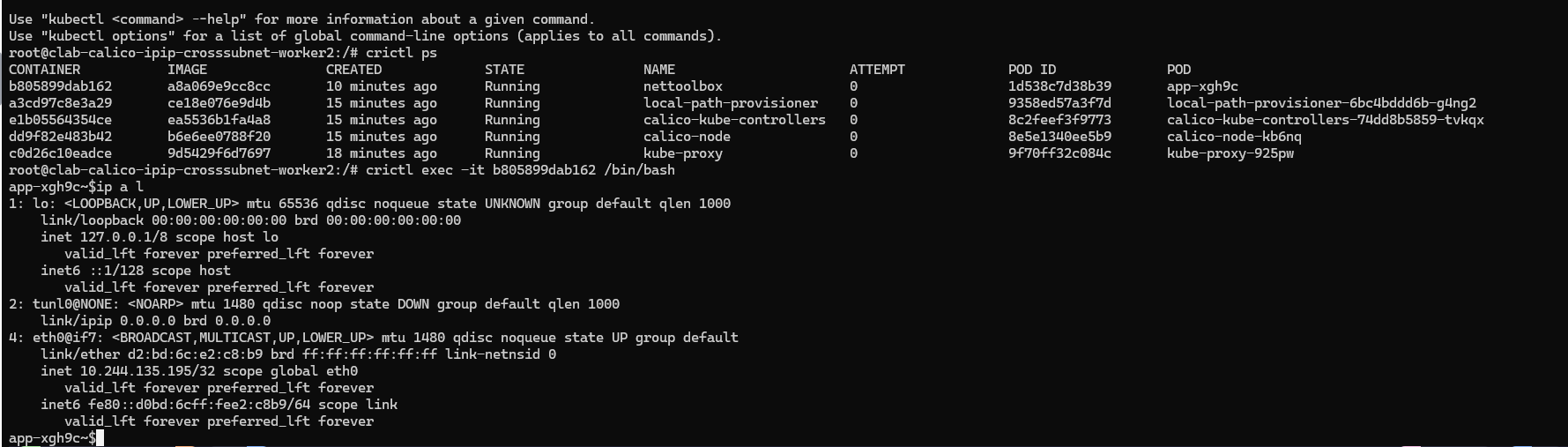

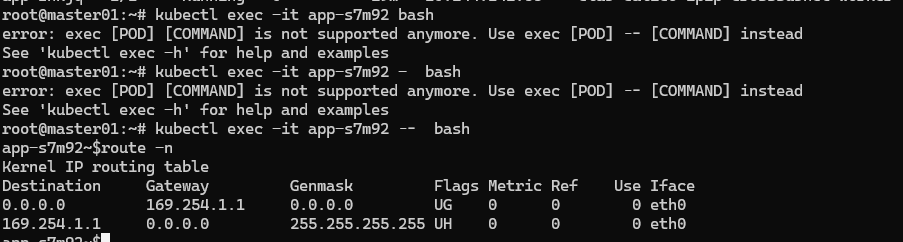

如果kubectl exec 不能连到对应的pod,可以直接再对应的节点上去执行命令即可。

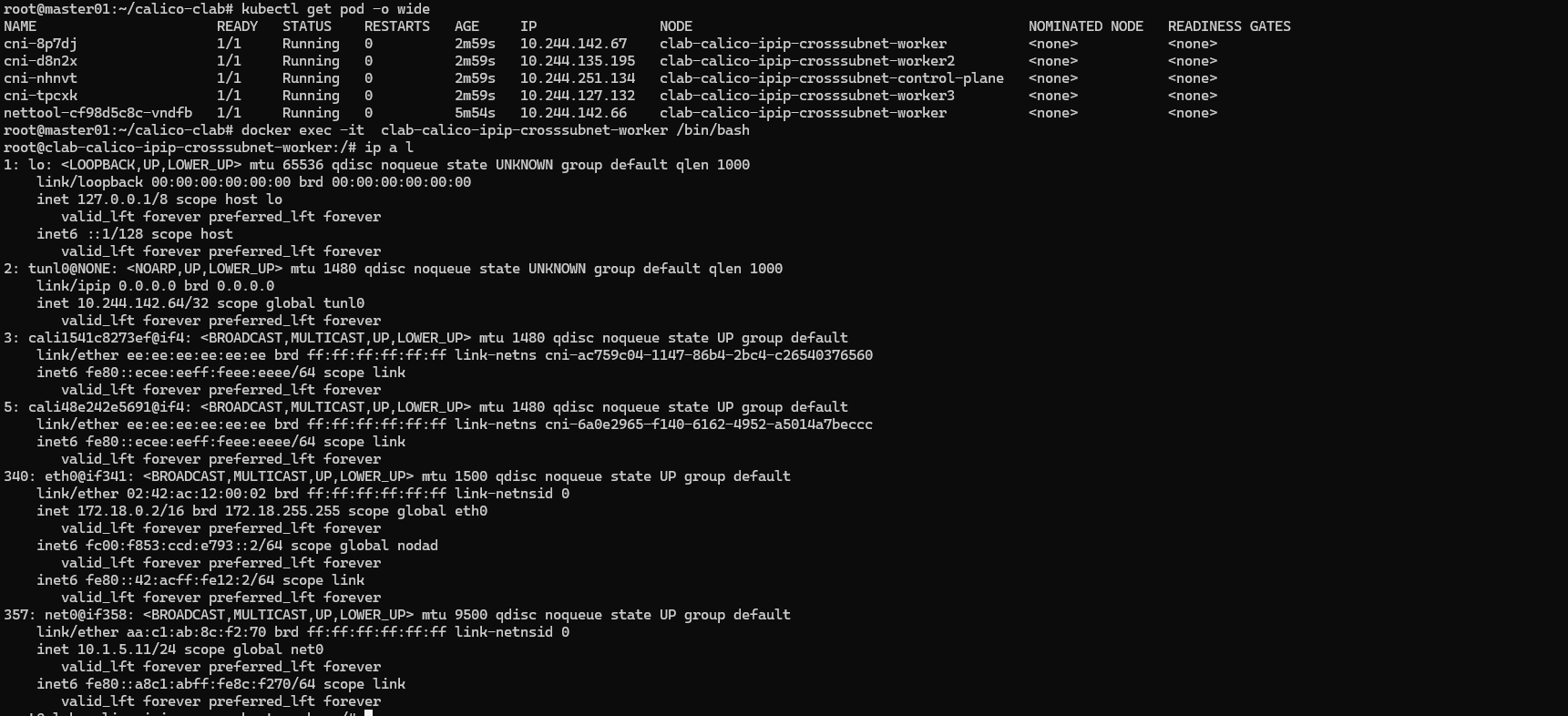

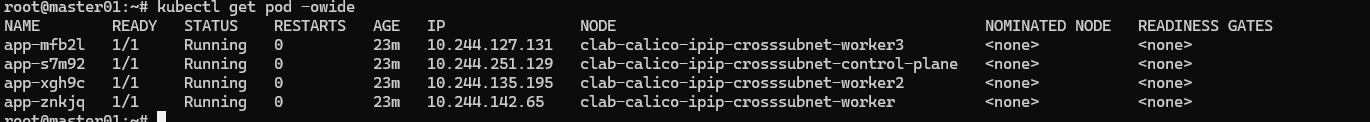

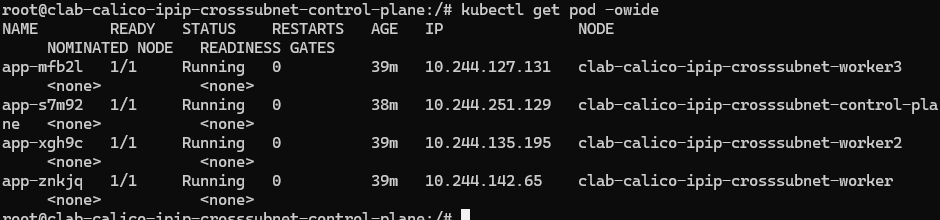

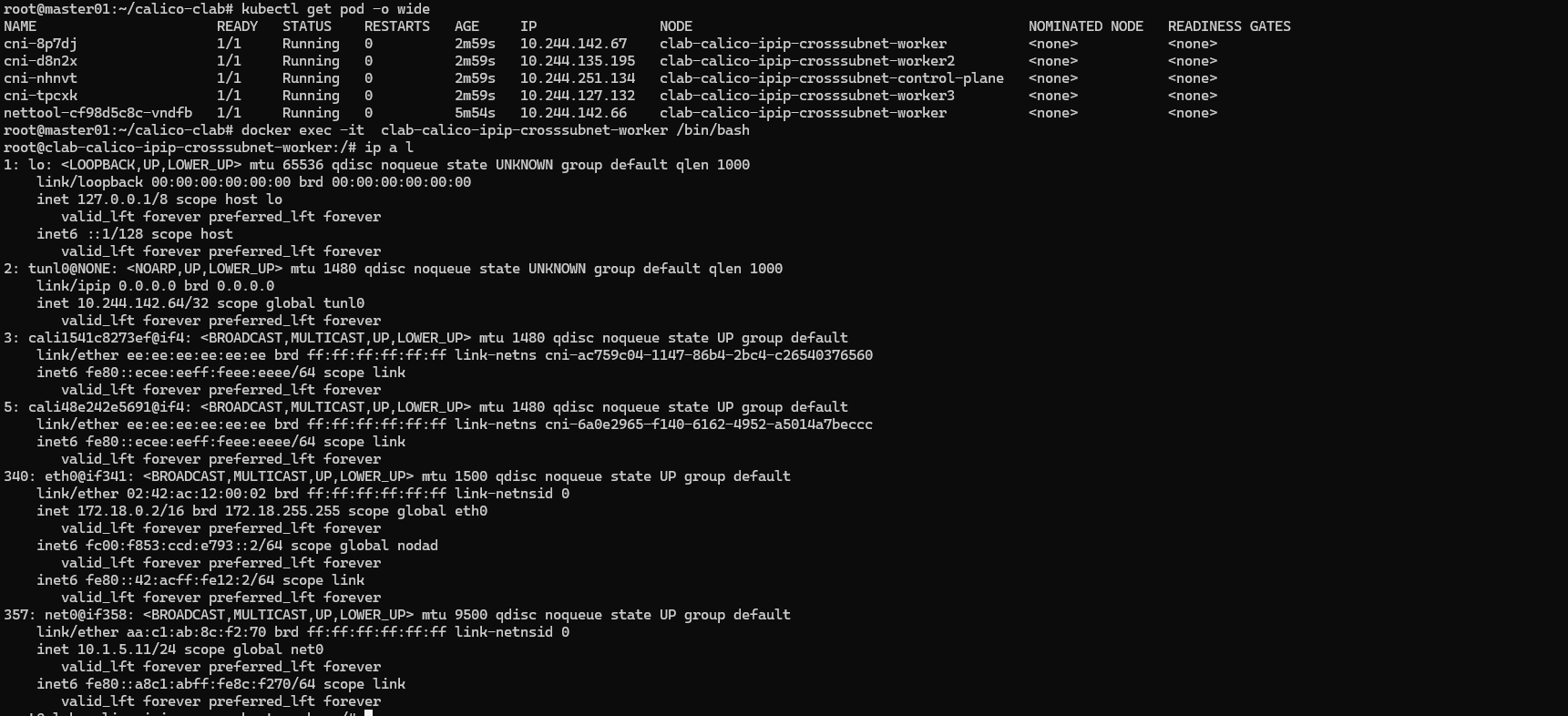

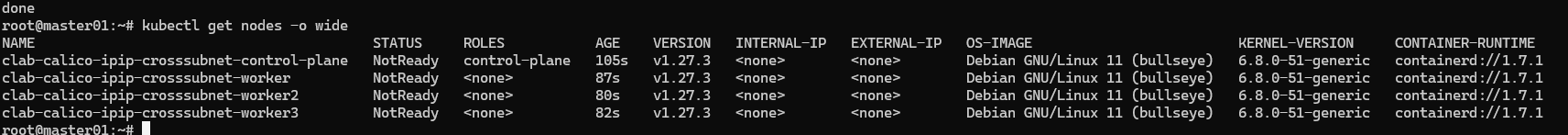

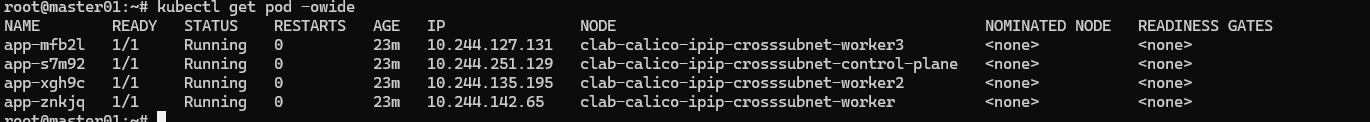

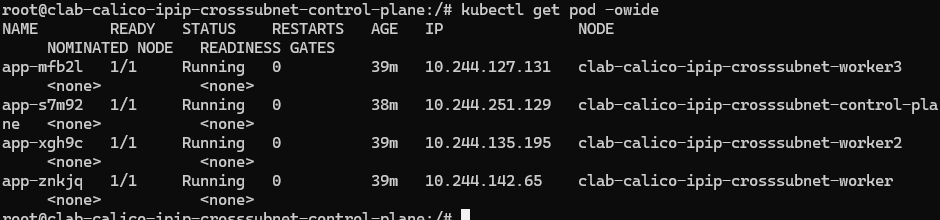

查看pod

查看pod

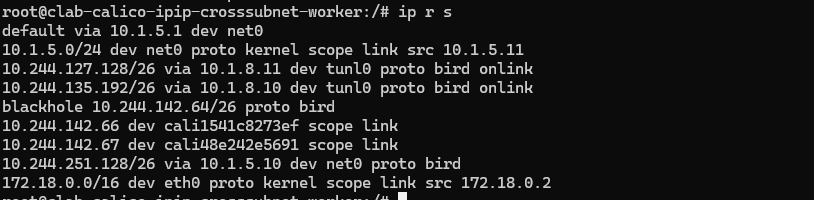

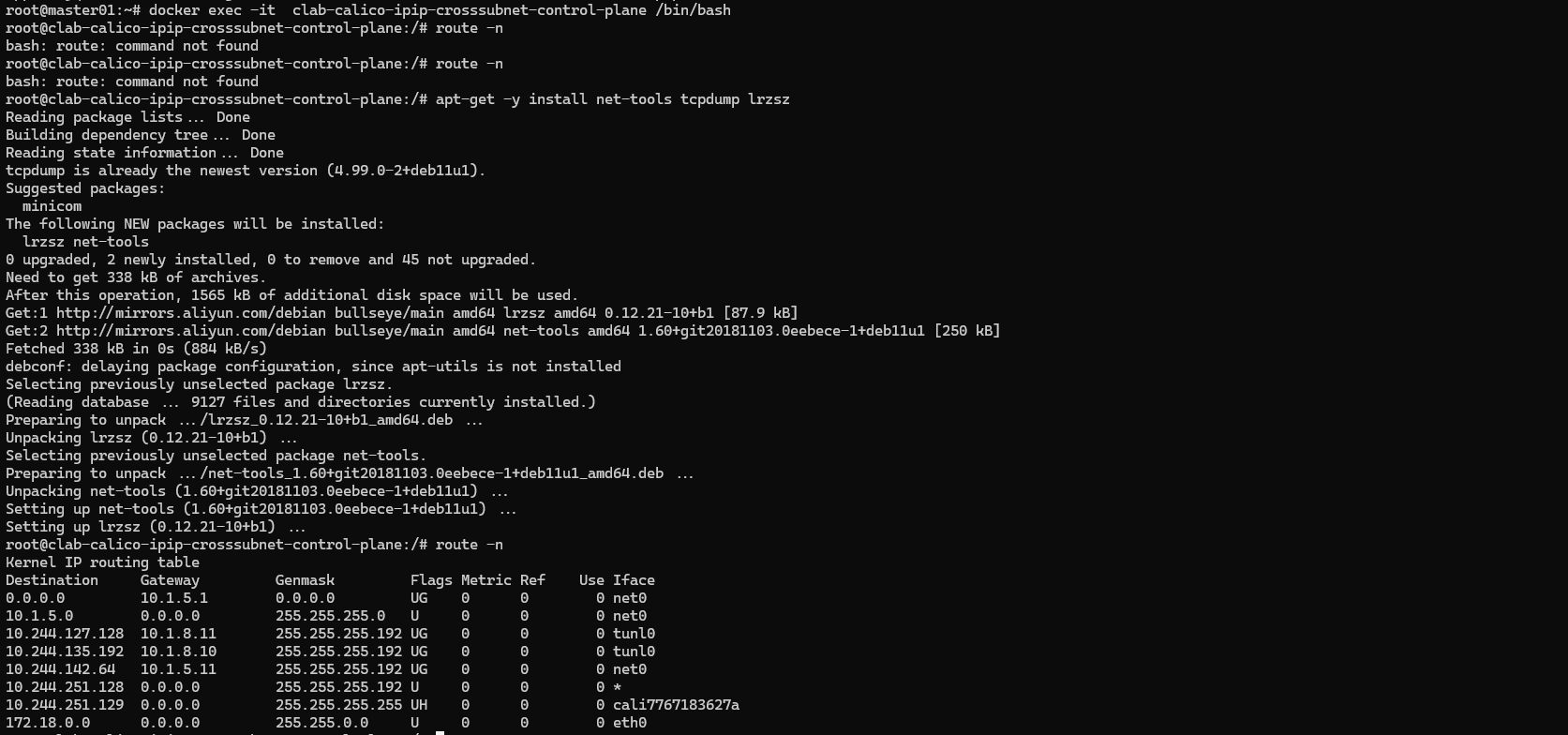

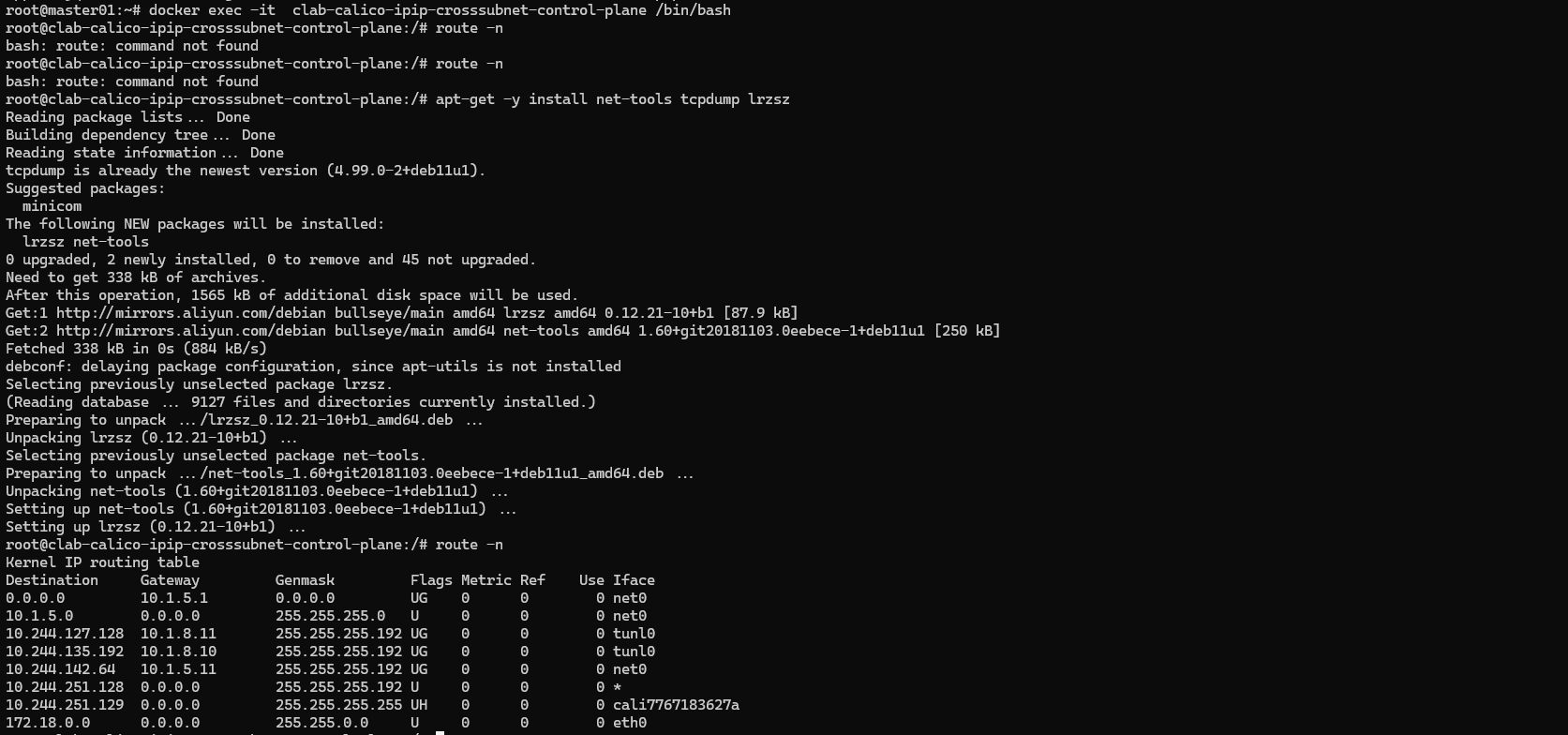

去master上看看路由策略

去master上看看路由策略

可以看到如果去往10.244.142.64/26 网段内的地址则通过net0接口传出去,

通过去往 10.244.127.128/26、10.244.135.192/26 网段内的地址,通过tunl0 接口传出去。

再去master节点上的pod看看

可以看到如果去往10.244.142.64/26 网段内的地址则通过net0接口传出去,

通过去往 10.244.127.128/26、10.244.135.192/26 网段内的地址,通过tunl0 接口传出去。

再去master节点上的pod看看

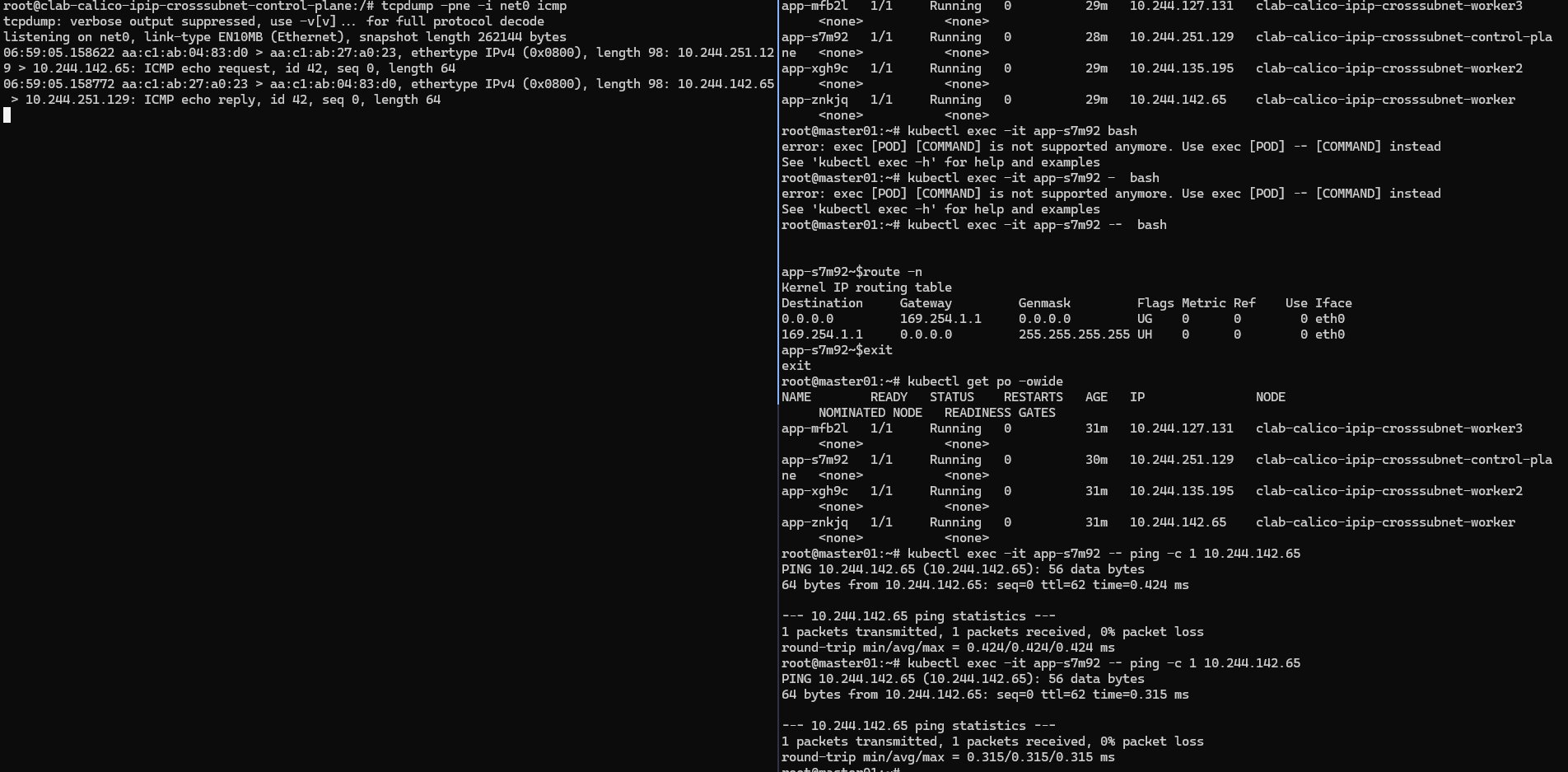

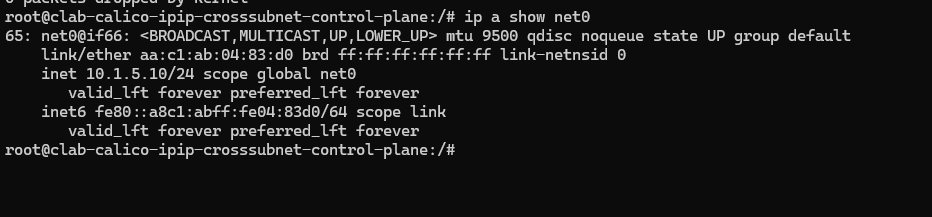

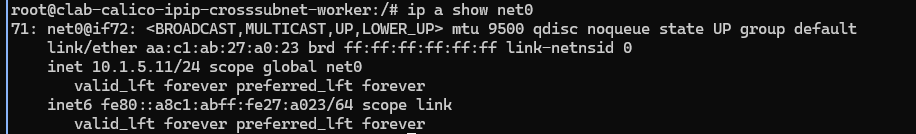

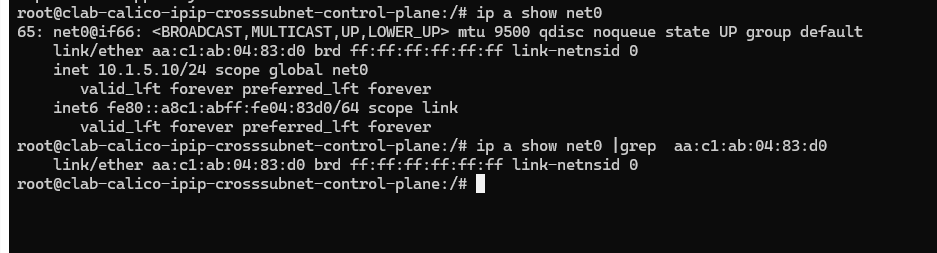

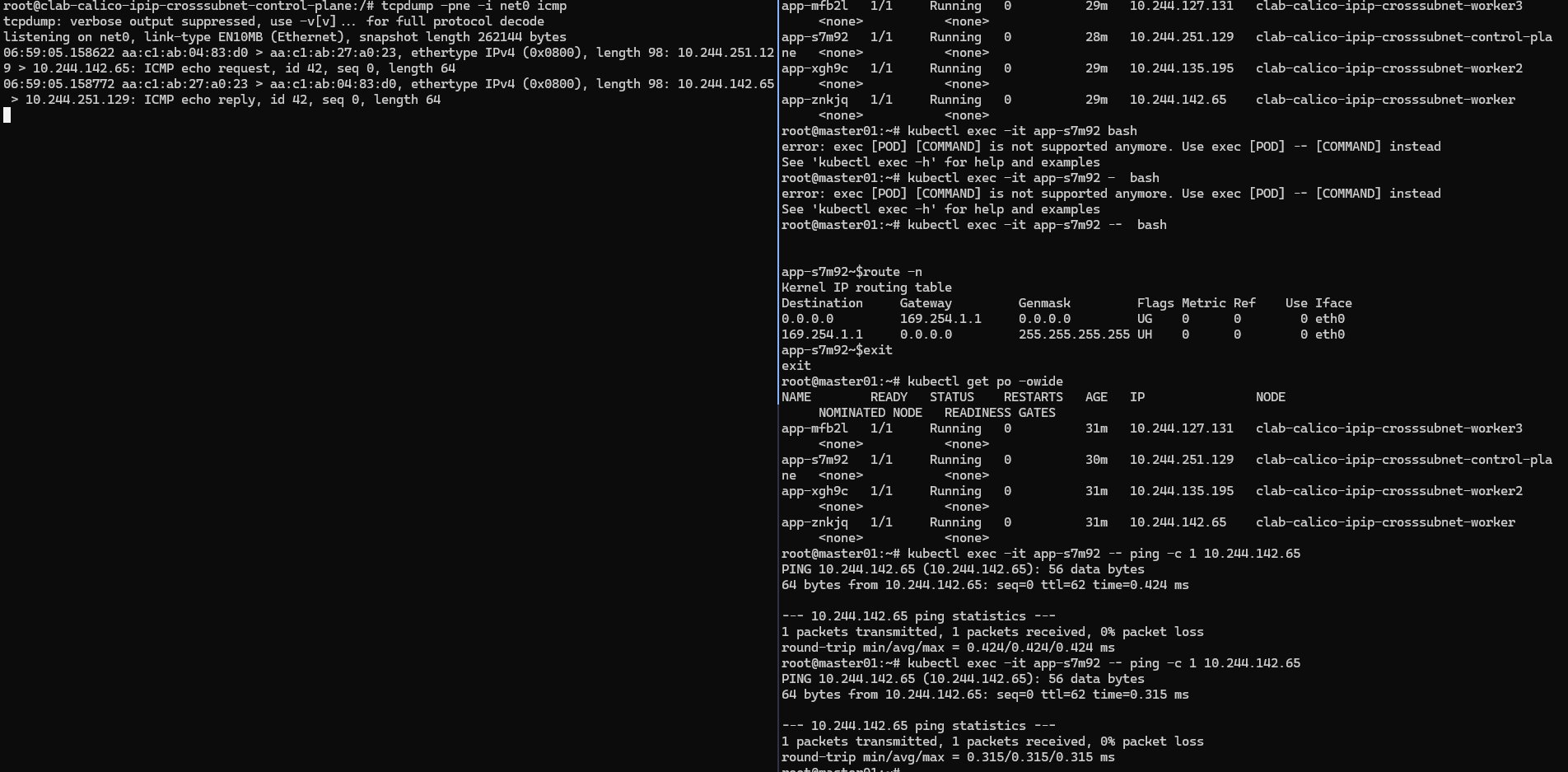

抓包同子网的pod通信

可以看到抓包数据中: 源/目的ip 均为srcpoddstpod。 源/目的Mac 均为: 源pod所在宿主机net0网卡/目的pod所在宿主机net0网卡 的Mac地址。

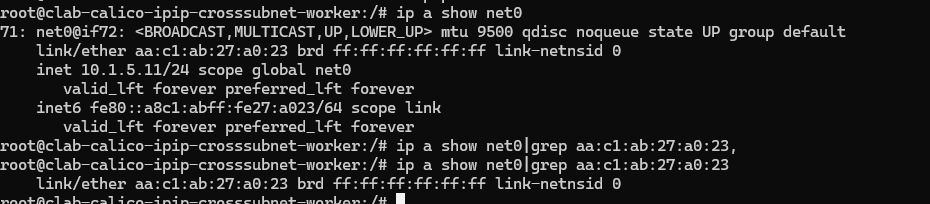

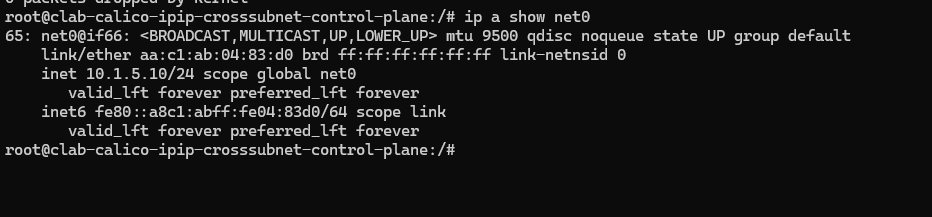

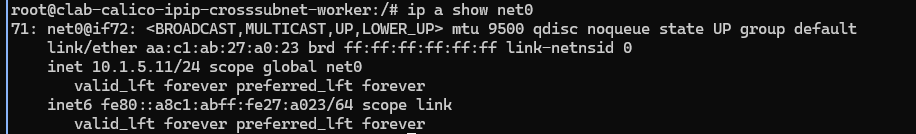

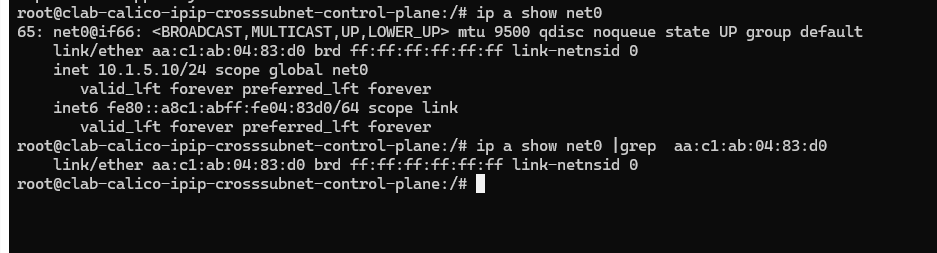

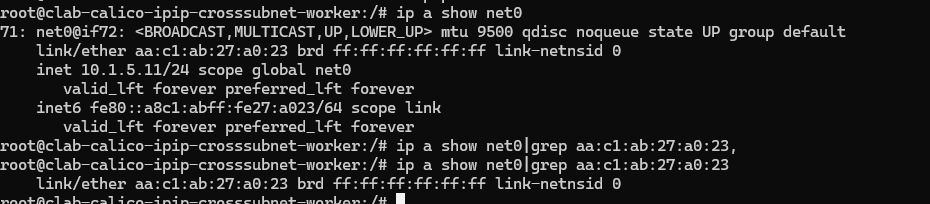

查看mac地址对应上的

查看上面的报文,没有ipip封装。

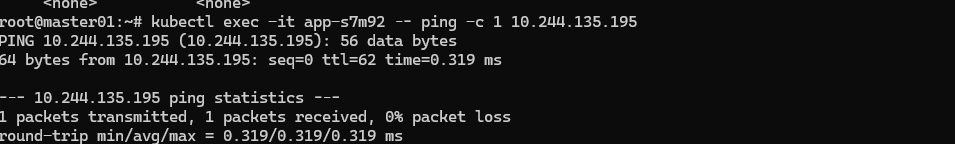

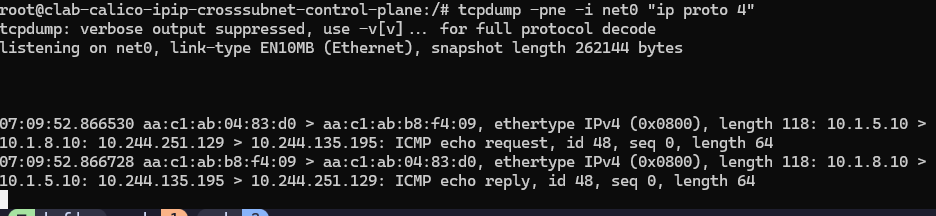

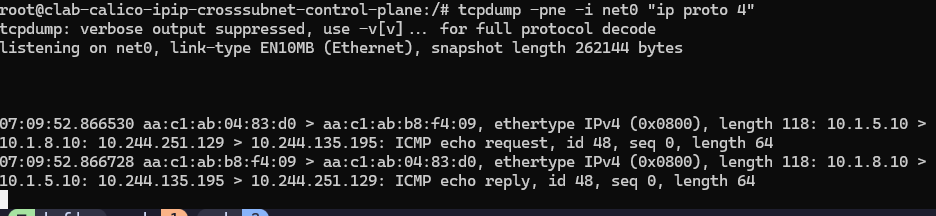

跨子网pod通信

从master上的pod到worker2节点。

抓包位置为源pod所在宿主机(master节点)net0网卡:

从master上的pod到worker2节点。

抓包位置为源pod所在宿主机(master节点)net0网卡:

可以发现抓包信息中携带了两层ip信息: 跨子网需要进行ipip的封装

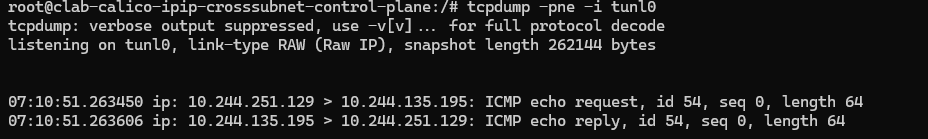

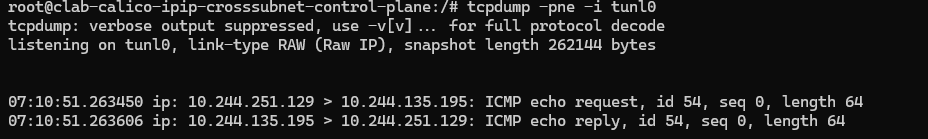

再抓包tunl0的包来看看

再抓包tunl0的包来看看

可以看到出去的包没有ip等地址。

可以看到出去的包没有ip等地址。

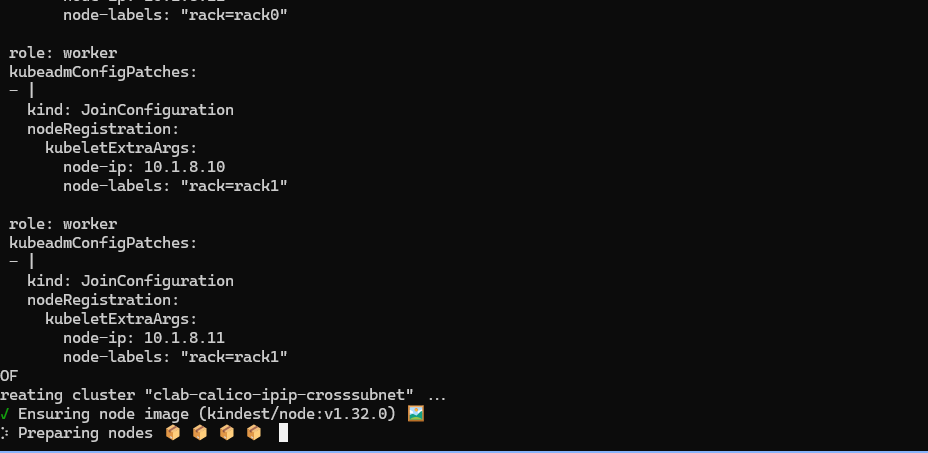

修改的install.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

|

#root@master01:~# cat install.sh

#!/bin/bash

date

set -v

# create registry container unless it already exists

reg_name='kind-registry'

reg_port='5001'

if [ "$(docker inspect -f '{{.State.Running}}' "${reg_name}" 2>/dev/null || true)" != 'true' ]; then

docker run \

-d --restart=always -p "127.0.0.1:${reg_port}:5000" --name "${reg_name}" \

registry:2

fi

# 1.prep noCNI env

cat <<EOF | kind create cluster --name=clab-calico-ipip-crosssubnet --image=kindest/node:v1.27.3 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."localhost:${reg_port}"]

endpoint = ["http://${reg_name}:5000"]

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# 定义节点使用的 pod 网段

podSubnet: "10.244.0.0/16"

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.10

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.11

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.10

node-labels: "rack=rack1"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.11

node-labels: "rack=rack1"

EOF

# connect the registry to the cluster network if not already connected

if [ "$(docker inspect -f='{{json .NetworkSettings.Networks.kind}}' "${reg_name}")" = 'null' ]; then

docker network connect "kind" "${reg_name}"

fi

# Document the local registry

# https://github.com/kubernetes/enhancements/tree/master/keps/sig-cluster-lifecycle/generic/1755-communicating-a-local-registry

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: local-registry-hosting

namespace: kube-public

data:

localRegistryHosting.v1: |

host: "localhost:${reg_port}"

help: "https://kind.sigs.k8s.io/docs/user/local-registry/"

EOF

# 2.remove taints

kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/master:NoSchedule-

kubectl get nodes -o wide

# 3.install necessary tools

# cd /usr/bin/

# curl -o calicoctl -O -L "https://gh.api.99988866.xyz/https://github.com/projectcalico/calico/releases/download/v3.23.2/calicoctl-linux-amd64"

# chmod +x calicoctl

for i in $(docker ps -a --format "table {{.Names}}" | grep calico)

do

echo $i

docker cp /root/calicoctl $i:/usr/bin/calicoctl

docker exec -it $i bash -c "sed -i -e 's/deb.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install iputils-ping libc6 net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

|

这是debian的镜像替换源文件

1

2

3

4

5

|

FROM debian:latest

RUN sed -i 's/deb.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list

RUN apt-get update

|

下载calicoctl执行文件

1

2

3

4

5

6

7

|

curl https://raw.githubusercontent.com/projectcalico/calico/v3.28.2/manifests/calico.yaml -O

curl https://raw.githubusercontent.com/projectcalico/calico/v3.28.2/manifests/calico.yaml -O calico.yaml

https://github.com/projectcalico/calico/releases/download/v3.23.5/calicoctl-linux-amd64

|

本文中采用的安装方式是manifest的安装方式。

参考文档:

参考文档:

复杂网络环境下使用叠加网络

暂未总结,请自行参考 vxlan-ipip 文档说明: https://docs.tigera.io/calico/latest/networking/configuring/vxlan-ipip

文章参考来源:

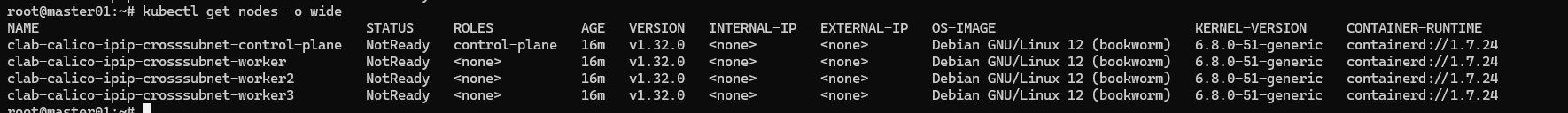

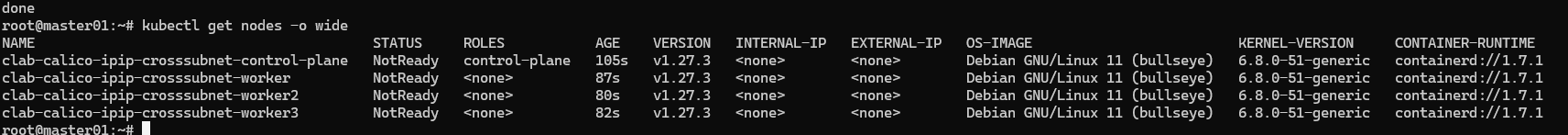

接下来检查集群状态

接下来检查集群状态

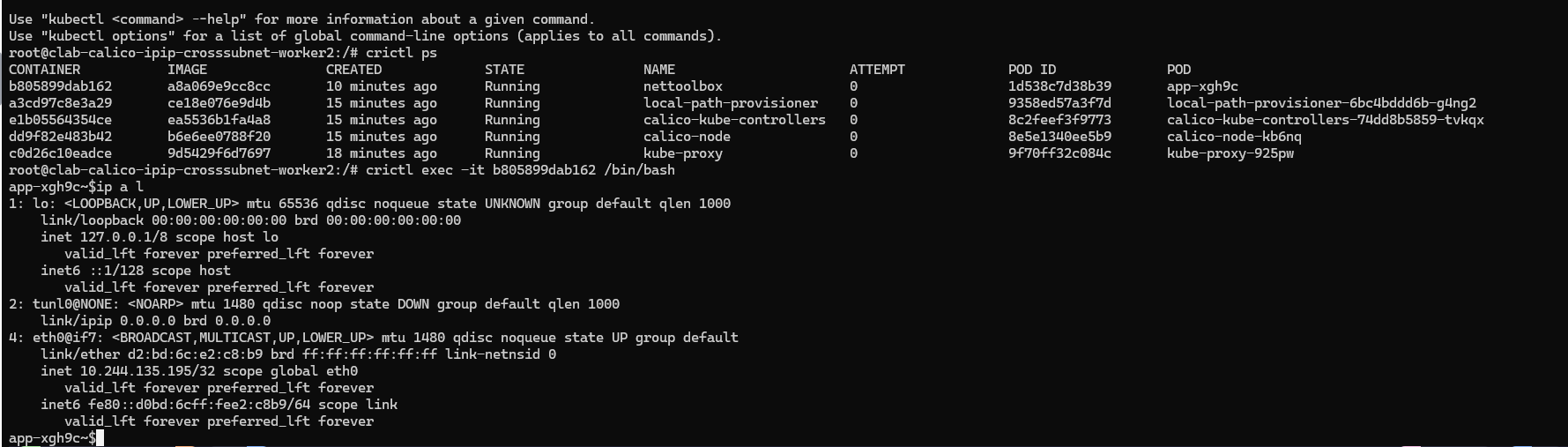

查看一下路由信息

查看一下路由信息

这个操作系统造成的问题是kubectl exec -it 会卡住。

估计版本太old了。

接下来换个kind集群实时,删除指定image

这个操作系统造成的问题是kubectl exec -it 会卡住。

估计版本太old了。

接下来换个kind集群实时,删除指定image

下载镜像

下载镜像

查看pod

查看pod

去master上看看路由策略

去master上看看路由策略

可以看到如果去往10.244.142.64/26 网段内的地址则通过net0接口传出去,

通过去往 10.244.127.128/26、10.244.135.192/26 网段内的地址,通过tunl0 接口传出去。

再去master节点上的pod看看

可以看到如果去往10.244.142.64/26 网段内的地址则通过net0接口传出去,

通过去往 10.244.127.128/26、10.244.135.192/26 网段内的地址,通过tunl0 接口传出去。

再去master节点上的pod看看

从master上的pod到worker2节点。

抓包位置为源pod所在宿主机(master节点)net0网卡:

从master上的pod到worker2节点。

抓包位置为源pod所在宿主机(master节点)net0网卡:

再抓包tunl0的包来看看

再抓包tunl0的包来看看

可以看到出去的包没有ip等地址。

可以看到出去的包没有ip等地址。

参考文档:

参考文档: