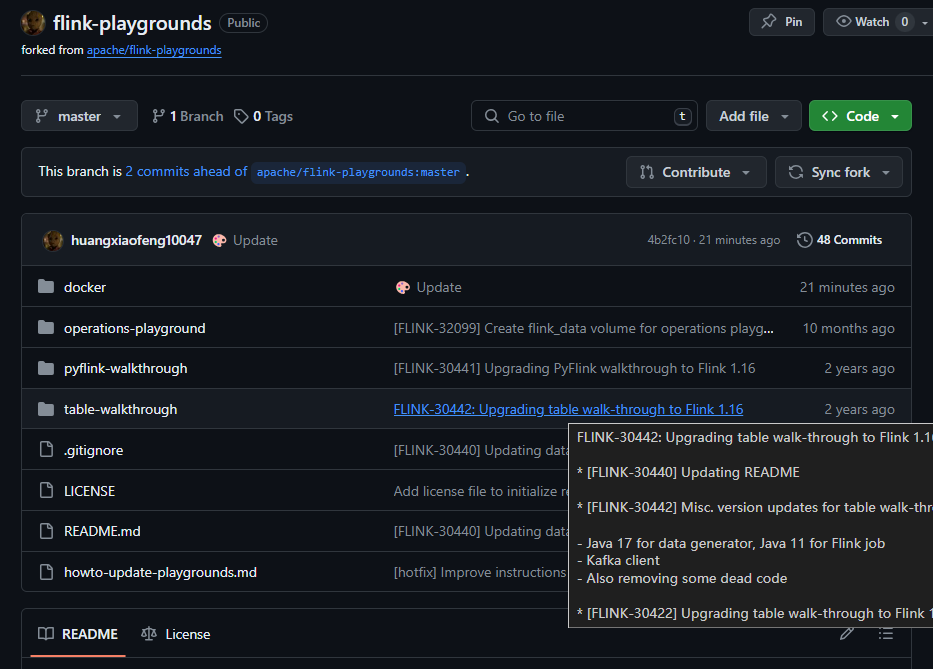

flink-playgrounds 的 github 库

https://github.com/huangxiaofeng10047/flink-playgrounds

不错:看到了 gitemoji 表情

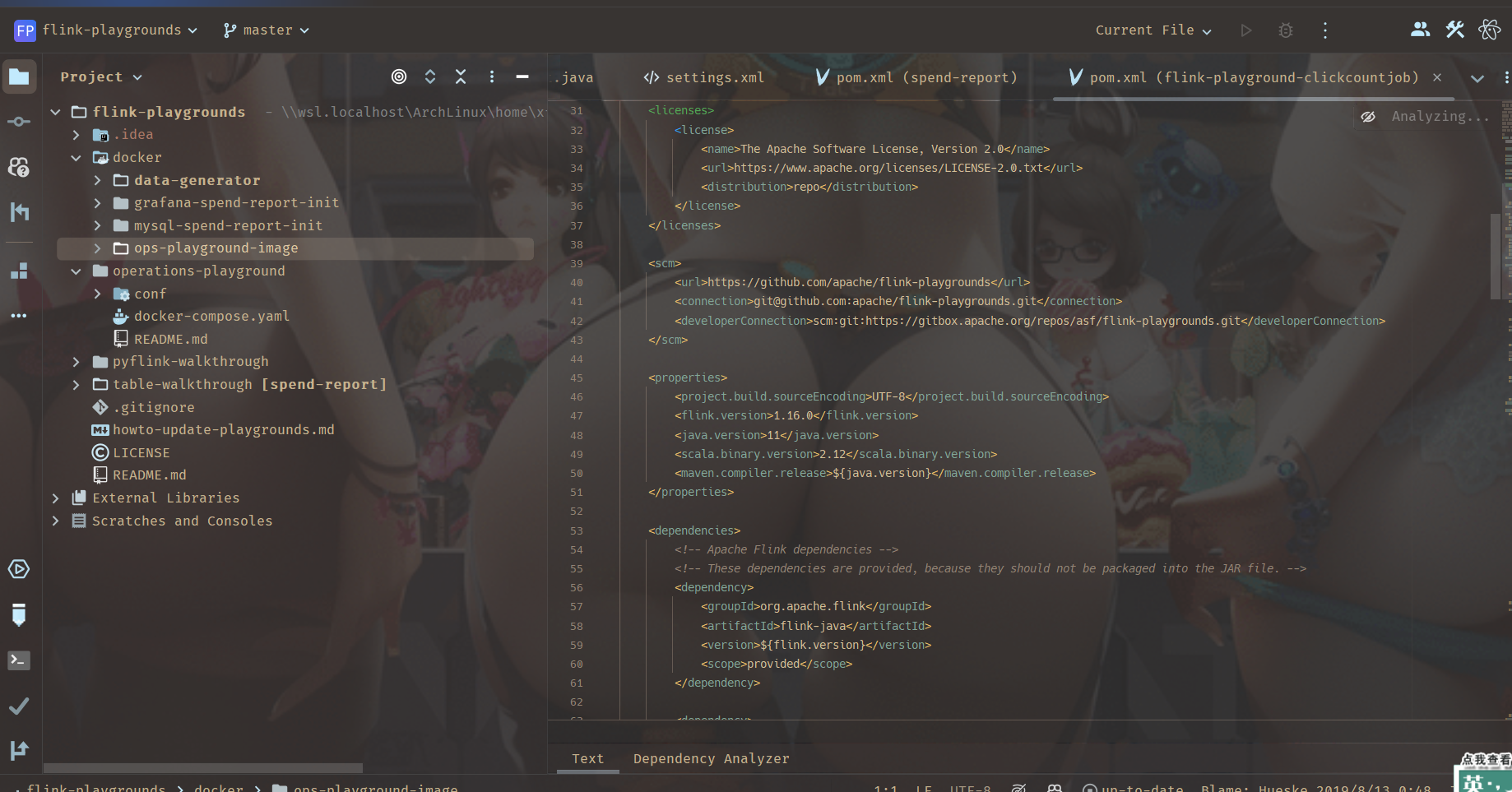

导入 idea 中看一下效果:

开始运行

|

|

开始运行任务

|

|

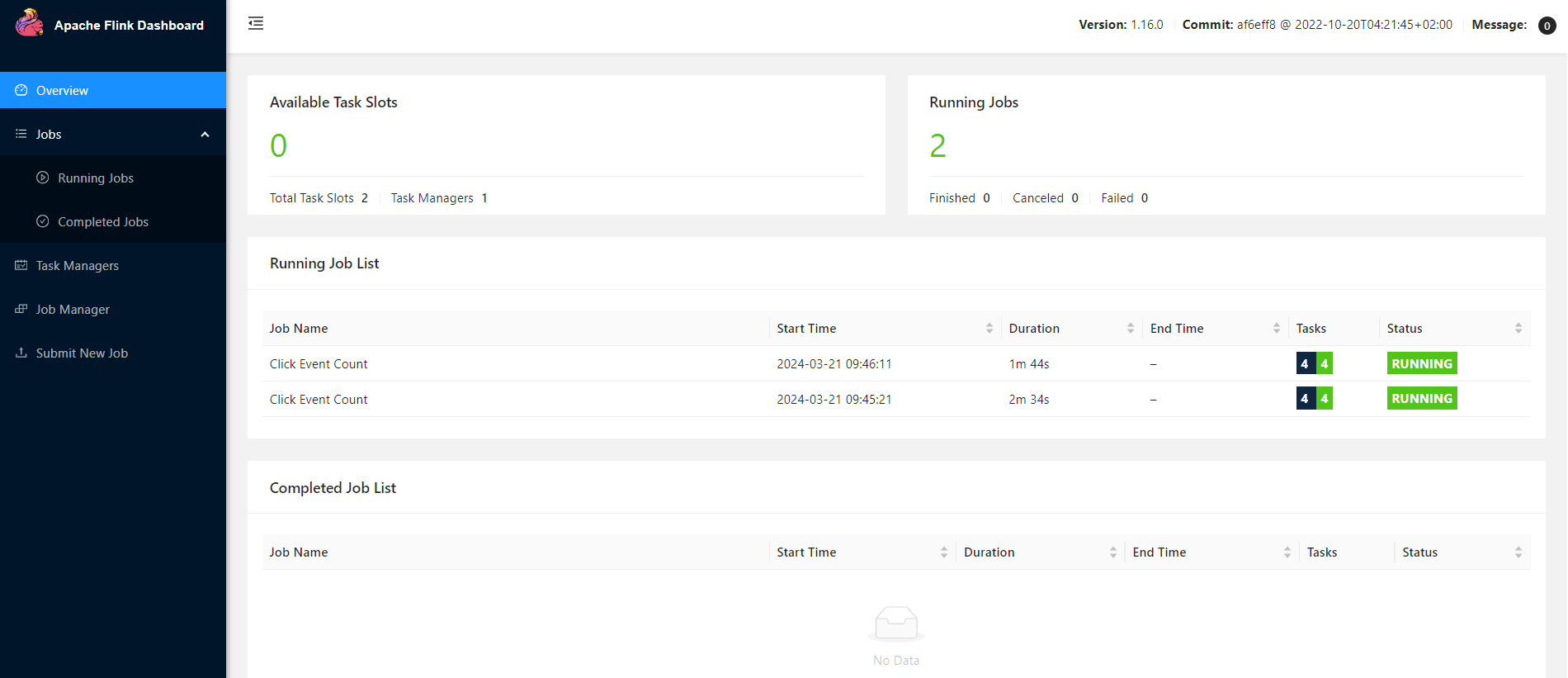

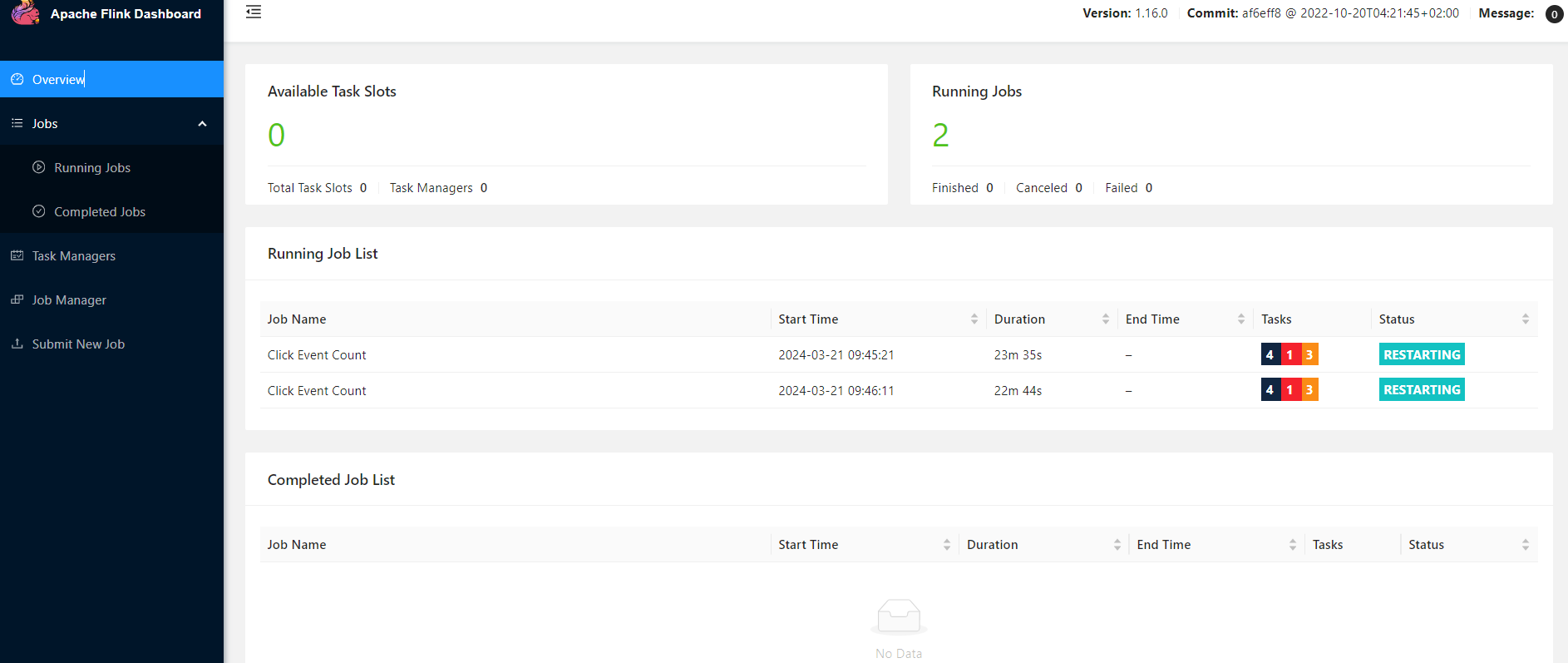

访问 url 看看 http://localhost:8081/#/overview

查看命令

|

|

查看帮助命令

|

|

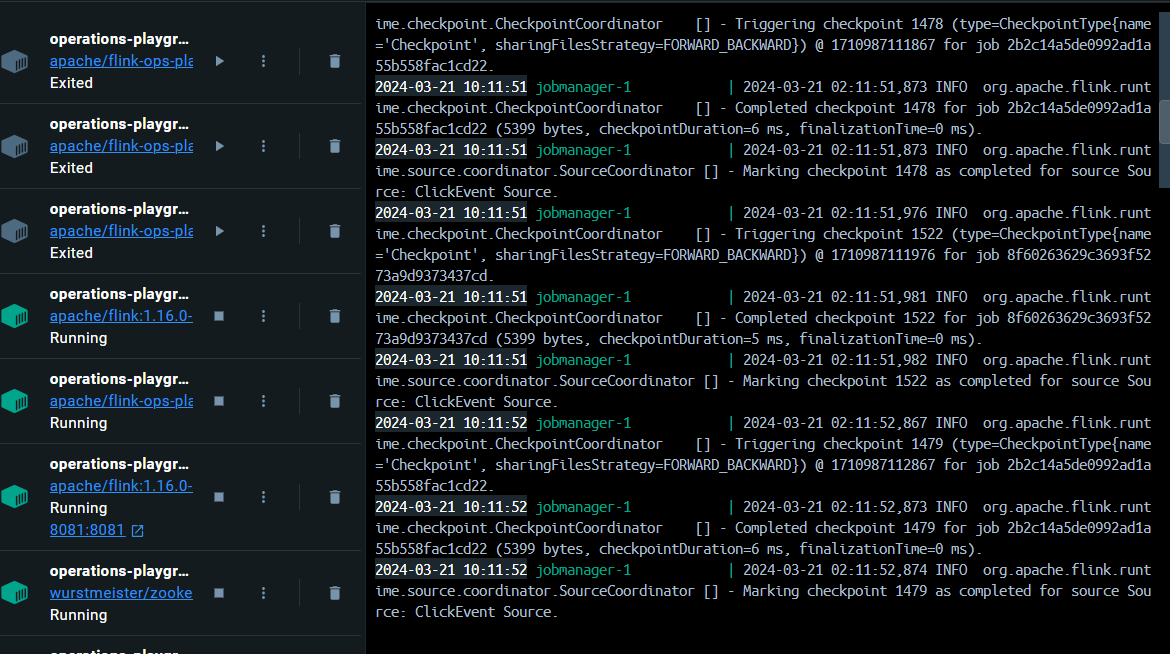

观察故障与恢复

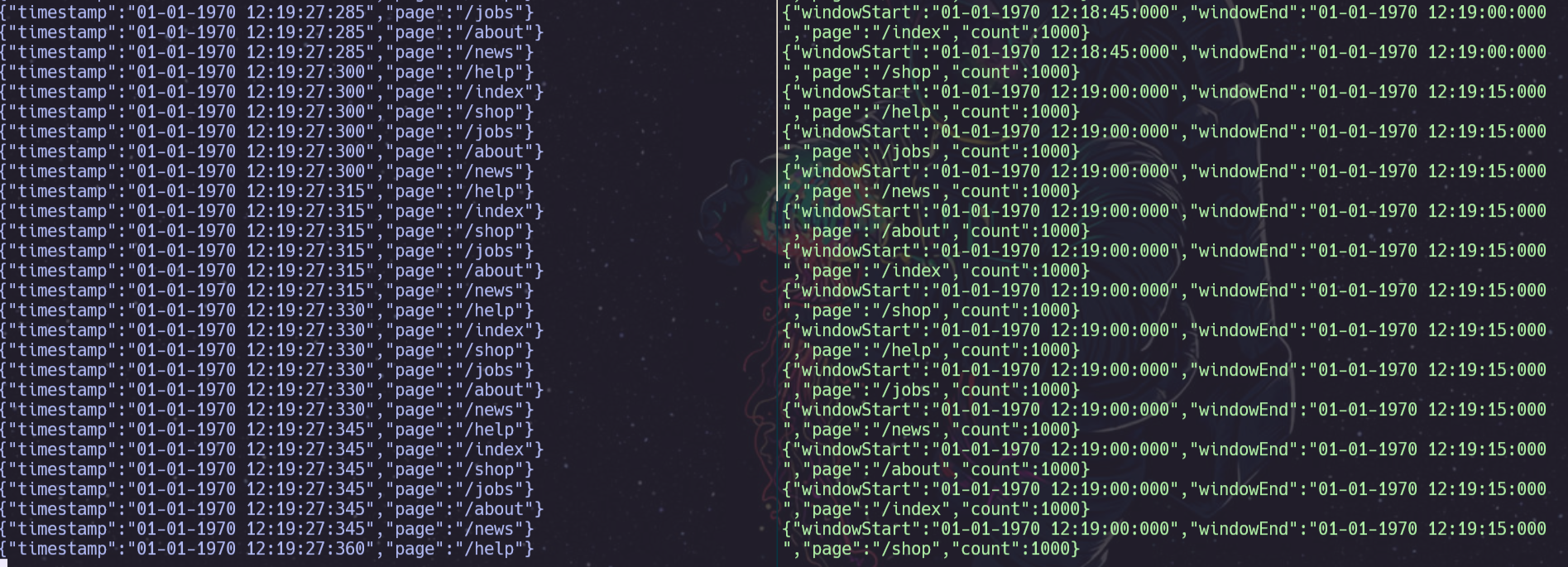

1、观察输出

|

|

2、模拟故障

这里模拟 TaskManager 进程的丢失

|

|

几分钟之后观察

重启一下看看,

|

|

再观察服务正常了。

注意这个 docker-compose run –no-deps client flink list 运行后,其实是启动了一个 flink 容器进行运行命令的,刚才一共跑了三次就有三个。

curl localhost:8081/jobs {“jobs”:[{“id”:“025d98f000d1068b800af3dc51bcd953”,“status”:“RUNNING”}]}%

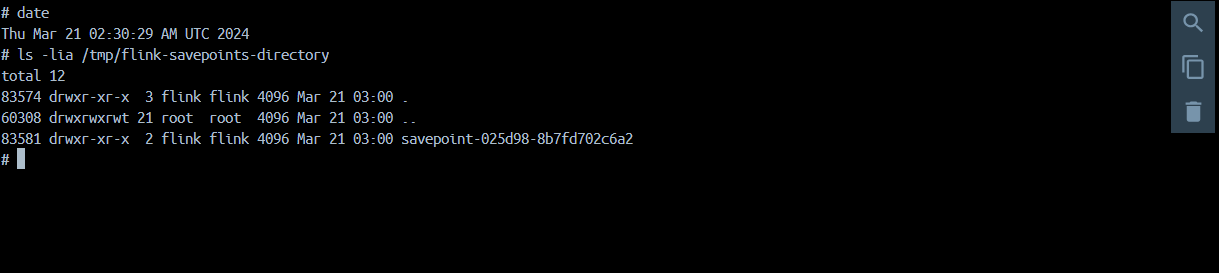

停止工作

要优雅地停止作业,你需要使用 CLI 或 REST API 的 “stop” 命令。为此,你需要该作业的 JobID,你可以通过列出所有正在运行的 Job 或从 WebUI 中获得。有了 JobID,你就可以继续停止该作业:

- CLI

|

|

Savepoint 已经被存储到 flink-conf.yaml 中配置的 state.savepoint.dir 中,它被安装在本地机器的 /tmp/flink-savepoints-directory/ 下。在下一步中,你将需要这个 Savepoint 的路径。在 REST API 的情况下,这个路径已经是响应的一部分,你将需要直接查看文件系统。

|

|

请求

|

|

预期的响应(美化了打印)

|

|

请求

|

|

预期的响应(美化了打印)

|

|

步骤 2a: 重启 Job,不做任何改变

现在你可以从该保存点重新启动升级后的作业(Job)。为了简单起见,你可以在不做任何更改的情况下重新启动它。

- CLI

命令

|

|

预期的输出

|

|

- REST API

请求

|

|

预期的响应(美化了打印)

|

|

请求

|

|

预期的输出

|

|

一旦 Job 再次 RUNNING,你会在 output 主题中看到,当 Job 在处理中断期间积累的积压时,记录以较高的速度产生。此外,你会看到在升级过程中没有丢失任何数据:所有窗口都存在,数量正好是 1000。

步骤 2b: 用不同的并行度重新启动作业(重新缩放)

另外,你也可以在重新提交时通过传递不同的并行性,从这个保存点重新缩放作业。

- CLI

|

|

预期的输出

|

|

- REST API

请求

|

|

预期的响应(美化了打印)

|

|

请求

|

|

预期的响应(美化了打印)

|

|

现在,作业(Job)已经被重新提交,但它不会启动,因为没有足够的 TaskSlots 在增加的并行度下执行它(2 个可用,需要 3 个)。使用:

|

|

你可以在 Flink 集群中添加一个带有两个 TaskSlots 的第二个 TaskManager,它将自动注册到 JobManager 中。添加 TaskManager 后不久,该任务(Job)应该再次开始运行。

一旦 Job 再次 “RUNNING”,你会在 output Topic 中看到在重新缩放过程中没有丢失数据:所有的窗口都存在,计数正好是 1000。

查询作业(Job)的指标 #

JobManager 通过其 REST API 公开系统和用户指标。

端点取决于这些指标的范围。可以通过 jobs/<job-id>/metrics 来列出一个作业的范围内的度量。指标的实际值可以通过 get query 参数进行查询。

请求

|

|

预期的响应(美化了打印; 没有占位符)

|

|

REST API 不仅可以用来查询指标,还可以检索运行中的作业状态的详细信息。

请求

|

|

预期的响应(美化了打印)

|

|

请查阅 REST API 参考资料,了解可能查询的完整列表,包括如何查询不同作用域的指标(如 TaskManager 指标)。

变体 #

你可能已经注意到,Click Event Count 应用程序总是以 --checkpointing 和 --event-time 程序参数启动。通过在 docker-compose.yaml 的客户端容器的命令中省略这些,你可以改变 Job 的行为。

--checkpointing启用了 checkpoint,这是 Flink 的容错机制。如果你在没有它的情况下运行,并通过故障和恢复,你应该会看到数据实际上已经丢失了。--event-time启用了你的 Job 的事件时间语义。当禁用时,作业将根据挂钟时间而不是 ClickEvent 的时间戳将事件分配给窗口。因此,每个窗口的事件数量将不再是精确的 1000。

Click Event Count 应用程序还有另一个选项,默认情况下是关闭的,你可以启用这个选项来探索这个作业在背压下的行为。你可以在 docker-compose.yaml 的客户端容器的命令中添加这个选项。