Real time data querying is becoming a modern standard. Who wants to wait until the next day or week when needing to take decision now?

Apache Flink SQL is an engine now offering SQL on bounded/unbounded streams of data. The streams can come from various sources and here we picked the popular Apache Kafka, which also has the separate ksqlDB.

This tutorial is based on the great Flink SQL demo Building an End-to-End Streaming Application but focuses on the end user querying experience.

Components

To keep things simple, all the pieces have been put together in a “one-click” Docker Compose project which contains:

- Flink cluster and ksqlDB from both configurations from the Flink SQL demo and ksqlDB quickstart

- The Flink SQL Gateway in order to be able to submit SQL queries via the Hue Editor. Previously explained in SQL Editor for Apache Flink SQL

- A Hue Editor already configured with the Flink and ksqlDB Editors

We also bumped the Flink version from 1.11.0 to 1.11.1 as the SQL Gateway requires it. As Flink can query various sources (Kafka, MySql, Elastic Search), some additional connector dependencies have also been pre-installed in the images.

Hue’s SQL Stream Editor

One-line setup

For fetching the Docker Compose configuration and starting everything:

|

|

Then those URLs will be up:

- http://localhost:8888/ Hue Editor

- http://localhost:8081/ Flink Dashboard

As well as the Flink SQL Gateway and ksqlDB APIs:

|

|

For stopping everything:

|

|

Query Experience

Notice that the Live SQL requires the New Editor which is in beta. In addition to soon offer multiple statements running at the same time on the same editor page and more robustness, it also bring the live result grid.

More improvements are on the way, in particular in the SQL autocomplete and Editor 2. In the future, the Task Server with Web Sockets will allow long running queries to run as separate tasks and prevent them from timing-out in the API server.

Note

In case you have an existing Hue Editor and want to point to the Flink and ksqlDB, just activate them via this config change:

|

|

Flink SQL

The Flink documentation as well as its community have a mine of information. Here are two examples to get started querying:

- A mocked stream of data

- Some real data going through a Kafka topic

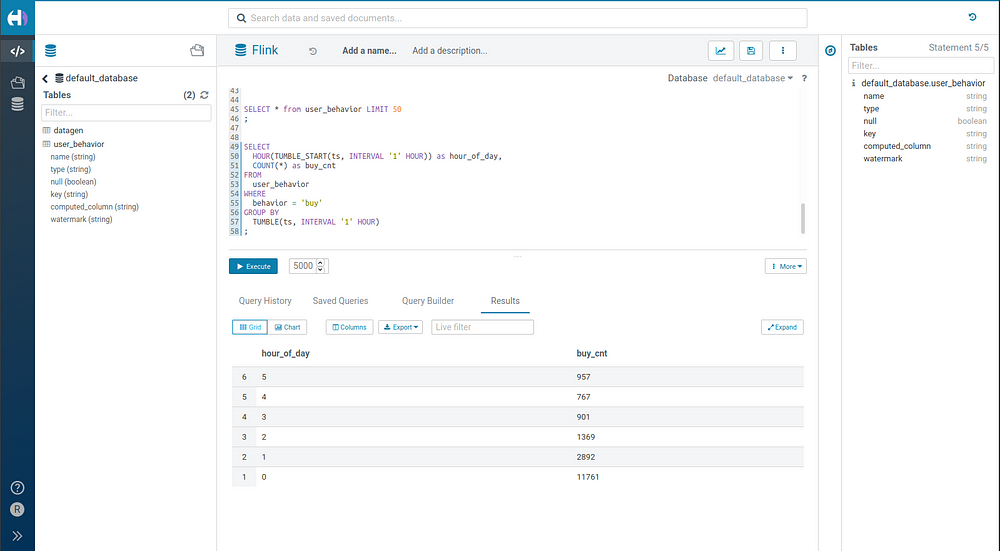

Querying live data via Flink

Hello World

This type of table is handy, it will generates records automatically:

|

|

That can then be queried:

|

|

Tumbling

One uniqueness of Flink is to offer SQL querying on windows of times or objects. The main scenario is then to Group the rolling blocks of records together and perform aggregations.

This is more realistic and coming from the FLink SQL demo. The stream of records is coming from the user_behavior Kafka topic:

|

|

Poke at some raw records:

|

|

Or perform a live count of the number of orders happening in each hour of the day:

|

|

ksql

One nicety of ksqDB is its close integration with Kafka, for example we can list the topics:

|

|

The SQL syntax is a bit different but here is one way to create a similar table as above:

|

|

And peek at it:

|

|

In another statement within Hue’s Editor or by booting the SQL shell:

|

|

You can also insert your own records and notice the live updates of the results:

|

|

In the next episodes, we will demo how to easily create tables directly from raw streams of data via the importer.

Any feedback or question? Feel free to comment here!

All these projects are also open source and welcome feedback and contributions. In the case of the Hue editor the Forum or Github issues are good places for that.

Onwards!

Romain