1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

wget https://doris-build-1308700295.cos.ap-beijing.myqcloud.com/regression/index/hacknernews_1m.csv.gz

curl --location-trusted -u root: -H "compress_type:gz" -T hacknernews_1m.csv.gz http://localhost:8030/api/test_inverted_index/hackernews_1m/_stream_load

{

"TxnId": 2,

"Label": "a8a3e802-2329-49e8-912b-04c800a461a6",

"TwoPhaseCommit": "false",

"Status": "Success",

"Message": "OK",

"NumberTotalRows": 1000000,

"NumberLoadedRows": 1000000,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 130618406,

"LoadTimeMs": 8988,

"BeginTxnTimeMs": 23,

"StreamLoadPutTimeMs": 113,

"ReadDataTimeMs": 4788,

"WriteDataTimeMs": 8811,

"CommitAndPublishTimeMs": 38

}

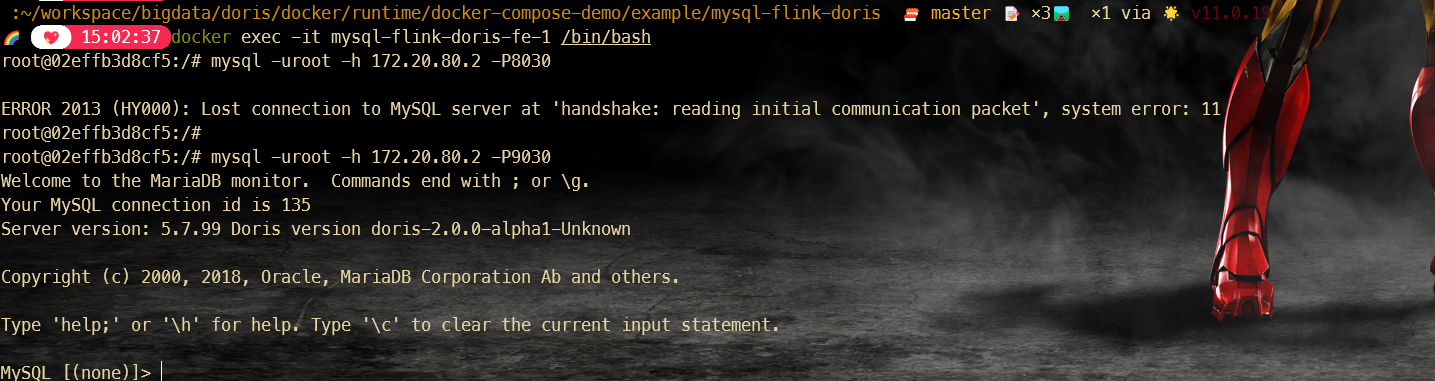

报错发现:

curl --location-trusted -u root: -H "compress_type:gz" -T hacknernews_1m.csv.gz http://10.7.20.42:32130/api/test_inverted_index/hackernews_1m/_stream_load

{

"TxnId": 2,

"Label": "2afbc117-1e66-4d87-a2df-8249b8fdfbf9",

"TwoPhaseCommit": "false",

"Status": "Fail",

"Message": "[ANALYSIS_ERROR]errCode = 2, detailMessage = transaction [2] is already aborted. abort reason: coordinate BE is down",

"NumberTotalRows": 1000000,

"NumberLoadedRows": 1000000,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 130618406,

"LoadTimeMs": 28038,

"BeginTxnTimeMs": 25,

"StreamLoadPutTimeMs": 218,

"ReadDataTimeMs": 4530,

"WriteDataTimeMs": 27761,

"CommitAndPublishTimeMs": 0

}

检查doris的log 可以看到时因为doris 的be超时,

再去查看be的log,发现是doris的warter-mark的作用。

|