手动安装 Kafka 依赖 下载 jar 包

通过网址下载:http://packages.confluent.io/archive/5.3/confluent-5.3.4-2.12.zip 解压后找到以下 jar 包,上传服务器 hadoop102

common-config-5.3.4.jar common-utils-5.3.4.jar kafka-avro-serializer-5.3.4.jar kafka-schema-registry-client-5.3.4.jar install 到 maven 本地仓库

|

|

安装 juicefs

|

|

|

|

|

|

安装 minio

|

|

启动命令

|

|

启动一个元数据存储 redis

|

|

启动 juicefs

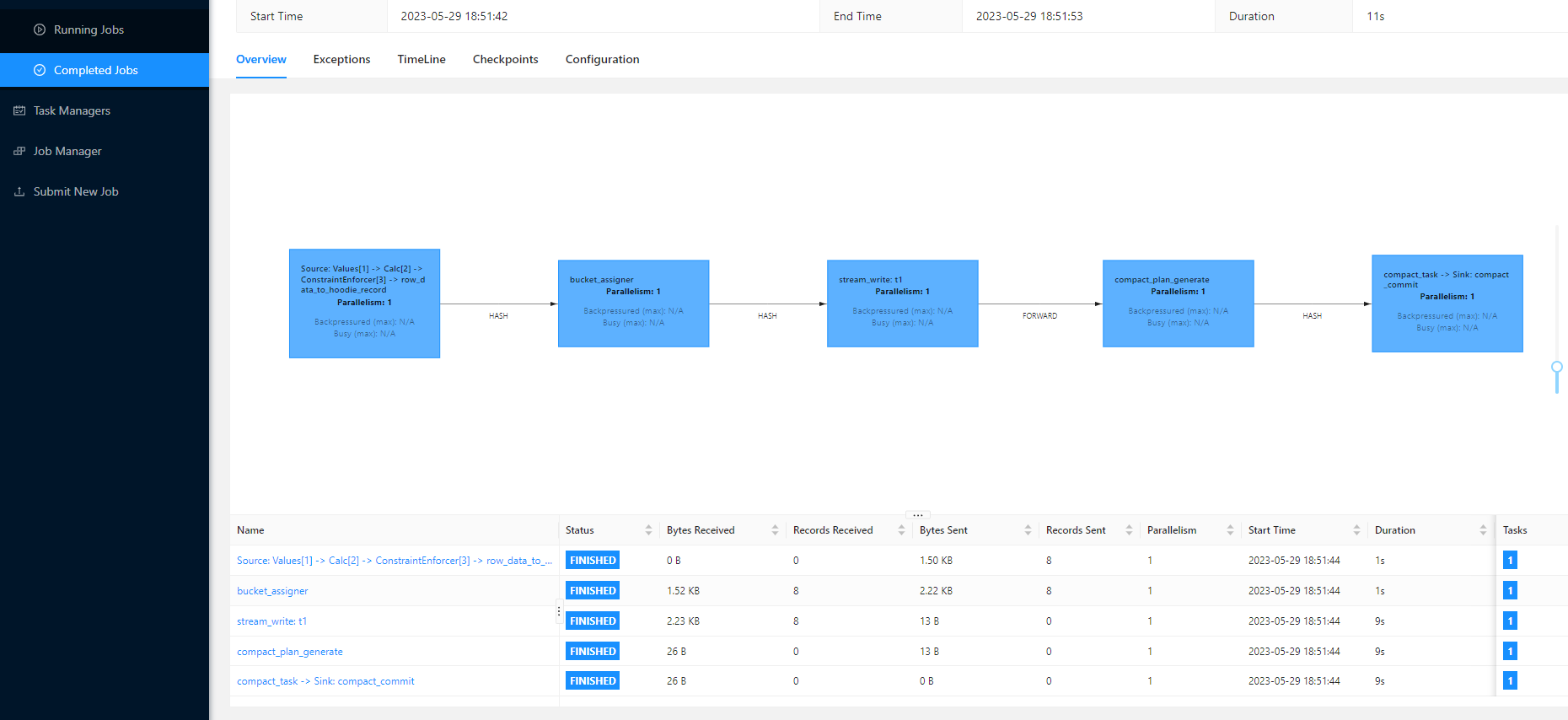

启动 flink

|

|

|

|

启动 flink sql

|

|

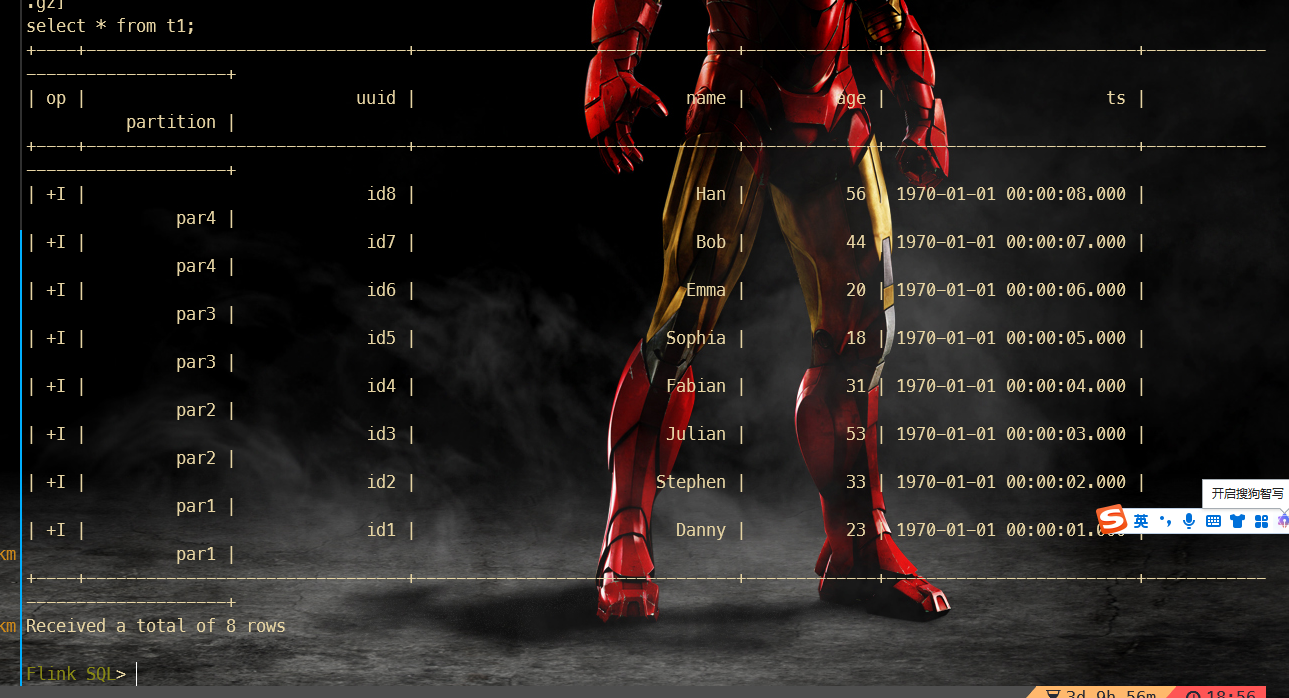

导入数据

|

|

注意,如果使用的是 jdk11 编译,就用 jdk11 运行,否则 flink 会报错

java.lang.NoSuchMethodError: java.nio.ByteBuffer.flip()Ljava/nio/ByteBuffer 解决办法

原因

编译使用的 jdk 版本(12)高于运行环境的 jre 版本(1.8)(1)

解决办法

指定 maven 编译版本,用 release 代替 source/target

Zing 的 jdk 不可以支持 flink。