helm install my-elasticsearch bitnami/elasticsearch

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/xfhuang/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/xfhuang/.kube/config

NAME: my-elasticsearch

LAST DEPLOYED: Wed May 24 18:24:23 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: elasticsearch

CHART VERSION: 19.5.12

APP VERSION: 8.6.2

WARNING

Elasticsearch requires some changes in the kernel of the host machine to

work as expected. If those values are not set in the underlying operating

system, the ES containers fail to boot with ERROR messages.

More information about these requirements can be found in the links below:

https://www.elastic.co/guide/en/elasticsearch/reference/current/file-descriptors.html

https://www.elastic.co/guide/en/elasticsearch/reference/current/vm-max-map-count.html

This chart uses a privileged initContainer to change those settings in the Kernel

by running: sysctl -w vm.max_map_count=262144 && sysctl -w fs.file-max=65536

** Please be patient while the chart is being deployed **

Elasticsearch can be accessed within the cluster on port 9200 at my-elasticsearch.default.svc.cluster.local

To access from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-elasticsearch 9200:9200 &

curl http://127.0.0.1:9200/

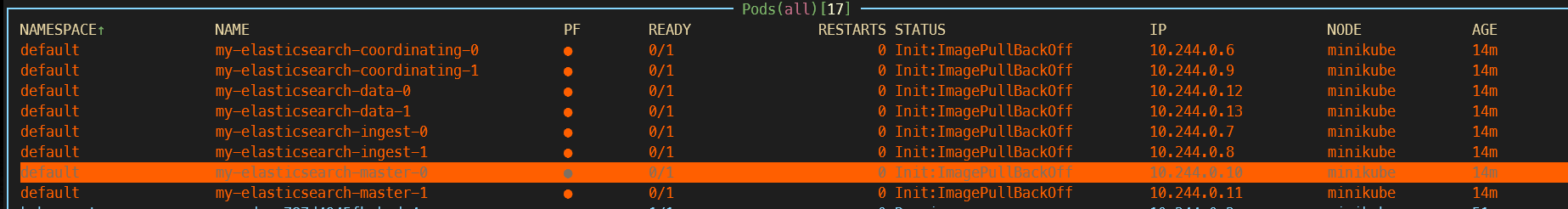

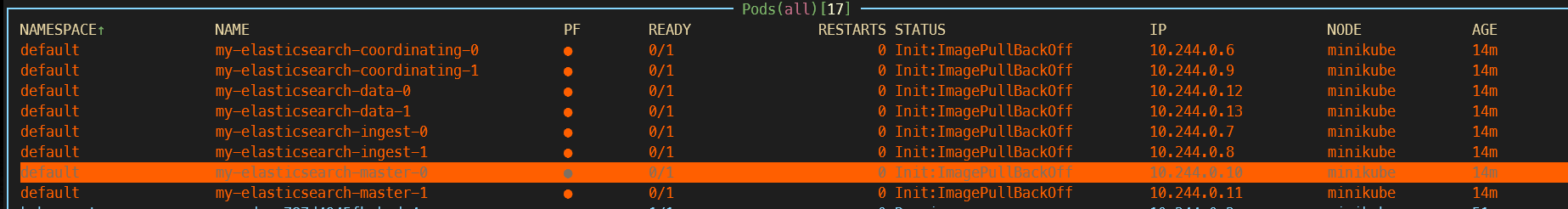

安装完后的样子

导入需要的镜像

1

2

3

4

5

6

7

8

9

10

|

docker pull docker.io/bitnami/bitnami-shell:11-debian-11-r87

11-debian-11-r87: Pulling from bitnami/bitnami-shell

21d94046049d: Retrying in 1 second

11-debian-11-r87: Pulling from bitnami/bitnami-shell

21d94046049d: Pull complete

Digest: sha256:89d8400519b71ff1e731cbe34b99696818c06c85341525a51d28c9cd703a0945

Status: Downloaded newer image for bitnami/bitnami-shell:11-debian-11-r87

docker.io/bitnami/bitnami-shell:11-debian-11-r87

[Wed May 24 2023 6:40PM (CST+0800)] [3:23] :~/workspace/tools/eck/2.7 took 4m17s

❯ minikube image load docker.io/bitnami/bitnami-shell:11-debian-11-r87

|

1

|

docker pull docker.io/bitnami/elasticsearch:8.6.2-debian-11-r2

|

参考文档:

https://artifacthub.io/packages/helm/bitnami/elasticsearch

部署 eck 的参考文档:

https://www.gooksu.com/2021/05/elastic-cloud-on-kubernetes-eck-on-minikube/

安装 es 集群的 https 服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

helm install elasticsearch \

--set name="logging" \

--set global.coordinating.name="coordinating-only" \

--set global.storageClass="nfs-client" \

--set security.enabled=true \

--set security.tls.autoGenerated=true \

--set security.elasticPassword="PASSWORD" \

bitnami/elasticsearch

#更新服务

helm upgrade elasticsearch \

--set global.kibanaEnabled=true \

--set kibana.elasticsearch.host=[https://elasticsearch-coordinating-only:9200/] \

bitnami/elasticsearch

helm upgrade elasticsearch \

-n logging-system \

--set name="logging" \

--set global.coordinating.name="coordinating-only" \

--set global.storageClass="cephfs-rook-pv" \

--set security.enabled=true \

--set security.tls.autoGenerated=true \

--set security.elasticPassword="PASSWORD" \

-f ./values.yaml ./elasticsearch

nfs-nfs-eck

curl -u elastic:PASSWORD -k https://elasticsearch:9200/

curl -u elastic:PASSWORD -k https://elasticsearch:9200/_cat/indices

|

安装 kibana

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

helm install kibana \

--set elasticsearch.security.auth.createSystemUser=true \

--set elasticsearch.security.auth.kibanaPassword="PASSWORD" \

--set global.storageClass="nfs-client" \

--set elasticsearch.security.auth.enabled=true \

--set elasticsearch.security.tls.enabled=true \

--set elasticsearch.security.tls.existingSecret=elasticsearch-coordinating-crt \

--set elasticsearch.hosts[0]=elasticsearch,elasticsearch.port=9200 \

--set elasticsearch.security.auth.elasticsearchPasswordSecret=elasticsearch\

--set elasticsearch.security.tls.usePemCerts=true \

--set elasticsearch.security.tls.passwordsSecret==elasticsearch-coordinating-crt \

bitnami/kibana

#离线安装命令

helm install kibana \

--set elasticsearch.security.auth.createSystemUser=true \

--set elasticsearch.security.auth.kibanaPassword="PASSWORD" \

--set global.storageClass="cephfs-rook-pv" \

--set elasticsearch.security.auth.enabled=true \

--set elasticsearch.security.tls.enabled=true \

--set elasticsearch.security.tls.existingSecret=elasticsearch-coordinating-crt \

--set elasticsearch.hosts[0]=elasticsearch,elasticsearch.port=9200 \

--set elasticsearch.security.auth.elasticsearchPasswordSecret=elasticsearch\

--set elasticsearch.security.tls.usePemCerts=true \

--set elasticsearch.security.tls.passwordsSecret==elasticsearch-coordinating-crt \

-f values-kibana.yaml ./kibana -n logging-system

|

需要注意 es 的各种配置,以上配置是配置支持 https 的 elasticsearch。进行 es 还愿时进行的操作。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

|

1、安装es需要的插件(需要重启服务)

/usr/share/elasticsearch/bin/elasticsearch-plugin install repository-s3

2、配饰访问minio的账号信息

/usr/share/elasticsearch/bin/elasticsearch-keystore add s3.client.default.access_key

用户

/usr/share/elasticsearch/bin/elasticsearch-keystore add s3.client.default.secret_key

PUT _snapshot/my_backup

{

"type":"s3",

"settings":{

"bucket":"hxftest",

"protocol":"http",

"disable_chunked_encoding":"true",

"endpoint":"10.7.20.26:32000",

"client":"default"

}

}

POST /_nodes/reload_secure_settings

PUT _snapshot/my_backup/test1?wait_for_completion

POST /_nodes/reload_secure_settings

PUT _snapshot/my_backup

{

"type":"s3",

"settings":{

"bucket":"esbackup",

"protocol":"http",

"disable_chunked_encoding":"true",

"endpoint":"10.246.131.15:32000",

"client":"default"

}

}

GET _snapshot/my_backup/_all?pretty=true

返回

{

"snapshots" : [

{

"snapshot" : "snapshot_remotes",

"uuid" : "naARO5HaTLS2xKxVoCNQUw",

"version_id" : 7120199,

"version" : "7.12.1",

"indices" : [

"remote_statistics"

],

"data_streams" : [ ],

"include_global_state" : false,

"state" : "IN_PROGRESS",

"start_time" : "2023-06-16T08:58:25.095Z",

"start_time_in_millis" : 1686905905095,

"end_time" : "1970-01-01T00:00:00.000Z",

"end_time_in_millis" : 0,

"duration_in_millis" : 0,

"failures" : [ ],

"shards" : {

"total" : 0,

"failed" : 0,

"successful" : 0

},

"feature_states" : [ ]

}

]

}

POST _snapshot/my_backup/test1/_restore

{

"indices": "demo",

"index_settings": {

"index.number_of_replicas": 1 //副本数

},

"rename_pattern": "demo",

"rename_replacement": "demo_s3"

}

|

备份所有索引

1

|

PUT _snapshot/my_backup/snapshot_test?wait_for_completion=true

|

删除索引操作:

1

2

|

#ctl exec -it elasticsearch-client-7bf748d697-gnrjz -n a -- curl -u elastic:l605eslS0mYOYEB4grNU -XDELETE http://elasticsearch-client.a:9200/demo_s3

{"acknowledged":true}

|

删除快照

1

2

|

# ctl exec -it elasticsearch-client-7bf748d697-gnrjz -n a -- curl -u elastic:l605eslS0mYOYEB4grNU -XDELETE http://elasticsearch-client.a:9200/_snapshot/my_backup

{"acknowledged":true}

|

查看

1

2

3

|

## 查看

# ctl exec -it elasticsearch-client-7bf748d697-gnrjz -n a -- curl -u elastic:l605eslS0mYOYEB4grNU -XGET http://elasticsearch-client.a:9200/_snapshot/s3_obs_repository/_all

{"snapshots":[]}

|

遇到问题:

在生产环境上部署报错如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

][WARN ][o.e.t.TcpTransport ] [elasticsearch-ingest-0] exception caught on transport layer [Netty4TcpChannel{localAddress=/10.234.144.59:34966, remoteAddress=elasticsearch-data-hl.logging-system.svc.cluster.local/10.233.155.5:9300, profile=default}], closing connection java.lang.Exception: java.lang.OutOfMemoryError: Cannot reserve 1048576 bytes of direct buffer memory (allocated: 66157226, limit: 67108864)

at org.elasticsearch.transport.netty4@8.6.2/org.elasticsearch.transport.netty4.Netty4MessageInboundHandler.exceptionCaught(Netty4MessageInboundHandler.java:75)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:346)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:325)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.fireExceptionCaught(AbstractChannelHandlerContext.java:317)

at io.netty.handler@4.1.84.Final/io.netty.handler.logging.LoggingHandler.exceptionCaught(LoggingHandler.java:214)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:346)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:325)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.fireExceptionCaught(AbstractChannelHandlerContext.java:317)

at io.netty.handler@4.1.84.Final/io.netty.handler.ssl.SslHandler.exceptionCaught(SslHandler.java:1105)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:346)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:325)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.fireExceptionCaught(AbstractChannelHandlerContext.java:317)

at io.netty.transport@4.1.84.Final/io.netty.channel.DefaultChannelPipeline$HeadContext.exceptionCaught(DefaultChannelPipeline.java:1377)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:346)

at io.netty.transport@4.1.84.Final/io.netty.channel.AbstractChannelHandlerContext.invokeExceptionCaught(AbstractChannelHandlerContext.java:325)

at io.netty.transport@4.1.84.Final/io.netty.channel.DefaultChannelPipeline.fireExceptionCaught(DefaultChannelPipeline.java:907)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.handleReadException(AbstractNioByteChannel.java:125)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:177)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.NioEventLoop.processSelectedKeysPlain(NioEventLoop.java:689)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:652)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at io.netty.common@4.1.84.Final/io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at io.netty.common@4.1.84.Final/io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at java.base/java.lang.Thread.run(Thread.java:833)

Caused by: java.lang.OutOfMemoryError: Cannot reserve 1048576 bytes of direct buffer memory (allocated: 66157226, limit: 67108864)

at java.base/java.nio.Bits.reserveMemory(Bits.java:178)

at java.base/java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:121)

at java.base/java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:332)

at org.elasticsearch.transport.netty4@8.6.2/org.elasticsearch.transport.netty4.CopyBytesSocketChannel.lambda$static$0(CopyBytesSocketChannel.java:58)

at java.base/java.lang.ThreadLocal$SuppliedThreadLocal.initialValue(ThreadLocal.java:305)

at java.base/java.lang.ThreadLocal.setInitialValue(ThreadLocal.java:195)

at java.base/java.lang.ThreadLocal.get(ThreadLocal.java:172)

at org.elasticsearch.transport.netty4@8.6.2/org.elasticsearch.transport.netty4.CopyBytesSocketChannel.getIoBuffer(CopyBytesSocketChannel.java:135)

at org.elasticsearch.transport.netty4@8.6.2/org.elasticsearch.transport.netty4.CopyBytesSocketChannel.doReadBytes(CopyBytesSocketChannel.java:115)

at io.netty.transport@4.1.84.Final/io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:151)

... 7 more

|

查看-XX:MaxDirectMemorySize=67108864,发现这个值就是默认 64m,尝试多种方法,后改变 heap 为 1024 后,成功启动。

未解之谜。

es 的 jdk17 启动参数为:

1

|

/opt/bitnami/java/bin/java -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -Djava.security.manager=allow -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j2.formatMsgNoLookups=true -Djava.locale.providers=SPI,COMPAT --add-opens=java.base/java.io=ALL-UNNAMED -XX:+UseG1GC -Djava.io.tmpdir=/tmp/elasticsearch-8542210862556778447 -XX:+HeapDumpOnOutOfMemoryError -XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -Xms1024m -Xmx1024m -XX:MaxDirectMemorySize=536870912 -XX:G1HeapRegionSize=4m -XX:InitiatingHeapOccupancyPercent=30 -XX:G1ReservePercent=15 -Des.distribution.type=tar --module-path /opt/bitnami/elasticsearch/lib --add-modules=jdk.net -m org.elasticsearch.server/org.elasticsearch.bootstrap.Elasticsearch

|

es 备份恢复索引:

参考文档

https://www.cnblogs.com/zisefeizhu/p/15021086.html

es 的 restore 索引

https://www.elastic.co/guide/cn/elasticsearch/guide/current/_restoring_from_a_snapshot.html

参考文档:

elasticsearch 备份恢复。

https://www.cnblogs.com/zisefeizhu/p/15021086.html

1.监控 Snapshot 状态

通过 GET 请求监控当前 Snapshot 状态,需要注意的是,如果你的 Snapshot 和索引 shard 分片很多、仓库存储的延迟很大,那么_current 请求可能会耗时很久。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

|

# 查看当前Snapshot状态

GET /_snapshot/my_fs_backup/_current

# 指定Snapshot查看

GET /_snapshot/my_fs_backup/snapshot_1

GET /_snapshot/my_fs_backup/snapshot_*

# 查看所有仓库(如果建了多个仓库的话)

GET /_snapshot/_all

GET /_snapshot/my_fs_backup,my_hdfs_backup

GET /_snapshot/my*

# 指定查看某一个Snapshot的进度详情

GET /_snapshot/my_fs_backup/snapshot_1/_status

# 返回结果较大,以下仅展示部分结果。更多结果说明参考:https://www.elastic.co/guide/en/elasticsearch/reference/current/get-snapshot-status-api.html

{

"snapshots": [

{

"snapshot": "snapshot_1",

"repository": "my_fs_backup",

"uuid": "HQHFSpPoQ1aY4ykm2o-a0Q",

"state": "SUCCESS",

"include_global_state": true,

"shards_stats": {

"initializing": 0,

"started": 0,

"finalizing": 0,

"done": 3,

"failed": 0,

"total": 3

},

"stats": {

"incremental": {

"file_count": 3,

"size_in_bytes": 624

},

"total": {

"file_count": 3,

"size_in_bytes": 624

},

"start_time_in_millis": 1630673216237,

"time_in_millis": 0

},

"indices": {

"index_1": {

"shards_stats": {

"initializing": 0,

"started": 0,

"finalizing": 0,

"done": 1,

"failed": 0,

"total": 1

},

"stats": {

"incremental": {

"file_count": 1,

"size_in_bytes": 208

},

"total": {

"file_count": 1,

"size_in_bytes": 208

},

"start_time_in_millis": 1630673216237,

"time_in_millis": 0

},

"shards": {

"0": {

# initializing:初始化检查集群状态是否可以创建快照

# started:数据正在被传输到仓库

# finalizing:数据传输完成,shard分片正在发送Snapshot元数据

# done:Snapshot创建完成

# failed:遇到错误失败的shard分片

"stage": "DONE",

"stats": {

"incremental": {

"file_count": 1,

"size_in_bytes": 208

},

"total": {

"file_count": 1,

"size_in_bytes": 208

},

"start_time_in_millis": 1630673216237,

"time_in_millis": 0

}

}

}

# 省略部分结果...

}

}

}

]

}

|

2.监控 Restore 恢复状态

当 Restore 恢复启动后,因为 Restore 在恢复索引的主分片,所以集群状态会变成 yellow,主分片恢复完成后 Elasticsearch 开始根据副本设置的策略恢复副本数,所有操作完成后集群才会恢复到 green 状态。也可以先把索引的副本数修改为 0,待主分片完成后再修改到目标副本数。Restor 恢复状态可以通过监控集群或者指定索引的 Recovery 状态。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

|

# 查看集群恢复状态,更多请参考集群恢复监控接口:https://www.elastic.co/guide/en/elasticsearch/reference/current/cat-recovery.html

GET /_cat/recovery/

# 查看索引的恢复状态,更多请参考索引恢复监控接口:https://www.elastic.co/guide/en/elasticsearch/reference/current/indices-recovery.html

GET /index_1/_recovery

# 返回结果

{

"restore_lakehouse": {

"shards": [

{

"id": 1,

"type": "SNAPSHOT",

"stage": "INDEX",

"primary": true,

"start_time_in_millis": 1630673216237,

"total_time_in_millis": 1513,

"source": {

"repository": "my_fs_backup",

"snapshot": "snapshot_3",

"version": "7.10.0",

"index": "index_1",

"restoreUUID": "fLtPIdOORr-3E7AtEQ3nFw"

},

"target": {

"id": "8Z7MmAUeToq6WGCxhVFk8A",

"host": "127.0.0.1",

"transport_address": "127.0.0.1:9300",

"ip": "127.0.0.1",

"name": "jt-hpzbook"

},

"index": {

"size": {

"total_in_bytes": 25729623,

"reused_in_bytes": 0,

"recovered_in_bytes": 23397681,

"percent": "90.9%"

},

"files": {

"total": 50,

"reused": 0,

"recovered": 43,

"percent": "86.0%"

},

"total_time_in_millis": 1488,

"source_throttle_time_in_millis": 0,

"target_throttle_time_in_millis": 0

},

"translog": {

"recovered": 0,

"total": 0,

"percent": "100.0%",

"total_on_start": 0,

"total_time_in_millis": 0

},

"verify_index": {

"check_index_time_in_millis": 0,

"total_time_in_millis": 0

}

}

# 其它shard详情省略...

]

}

}

|

其他数据同步工具

- Kettle: 免费开源的基于 java 的企业级 ETL 工具,功能强大简单易用,仅支持写入 Elasticsearch。更多参考:Kettle Gighub

- DataX: 是阿里云 DataWorks 数据集成。更多参考:Datax Github 的开源版本,多种异构数据源之间高效的数据同步功能,目前仅支持写入 Elasticsearch。

- Flinkx: 是一个基于 Flink 的批流统一的数据同步工具,支持 Elasticsearch 的读取和写入。更多参考:Flinkx Github

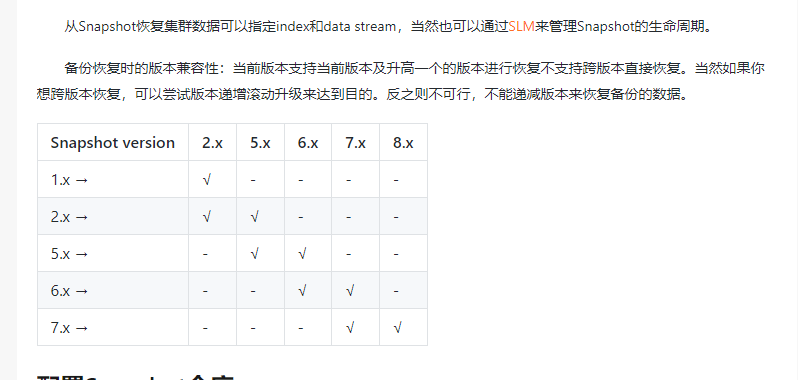

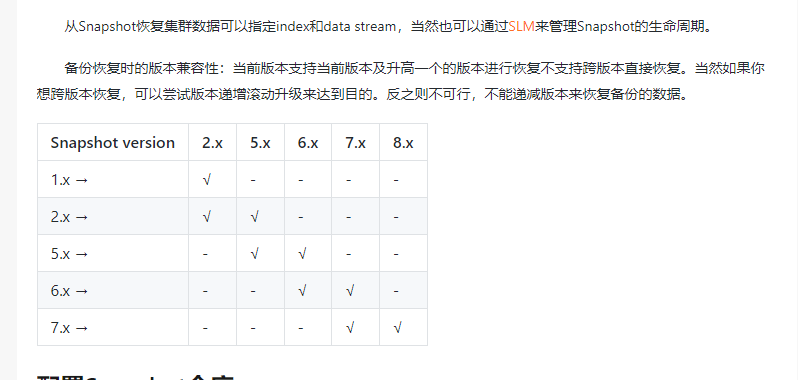

备份的恢复不能跨版本

恢复索引命令:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

POST _snapshot/my_backup2/snapshot_shenyu/_restore

返回

{

ac*:“true"

}

#查看进度

GET /_recovery/

返回如下:

{

"phy": {

"shards": [

{

"id": 0,

"type": "SNAPSHOT",

"stage": "INDEX",

"primary": true,

"start_time_in_millis": 1687156549430,

"total_time_in_millis": 84896,

"source": {

"repository": "my_backup2",

"snapshot": "snapshot_shenyu",

"version": "7.12.1",

"index": "phy",

"restoreUUID": "tlcnb19BRdmZq85I0XX0LA"

},

"target": {

"id": "dMsoIG4WS4qyqBa4hBN0ag",

"host": "elasticsearch-data-1.elasticsearch-data-hl.logging-system.svc.cluster.local",

"transport_address": "10.234.221.171:9300",

"ip": "10.234.221.171",

"name": "elasticsearch-data-1"

},

"index": {

|

取消一个恢复

要取消一个恢复,你需要删除正在恢复的索引。因为恢复进程其实就是分片恢复,发送一个 删除索引 API 修改集群状态,就可以停止恢复进程。比如:

k8s 报错

:

] spec: Forbidden: updates to statefulset spec for fields other than ‘replicas’, ’template’, ‘updateStrategy’, ‘persistentVolumeClaimRetentionPolicy’ and ‘minReadySeconds’ are forbidden

伸缩过程使用别的方式。

1

2

3

|

Keep the second terminal open, and, in another terminal window scale the StatefulSet:

kubectl scale statefulset/web --replicas=4

|

k8s 操作

1

2

|

master节点设置taint

kubectl taint nodes master1 node-role.kubernetes.io/master=:NoSchedule

|

1

|

kubectl patch node kube-node1 -p '{"spec":{"unschedulable":true}}'

|