title: 自己动手撸 k8s

date: 2023-02-21T08:54:38+08:00

lastmode: 2023-02-21T08:54:38+08:00

tags:

- k8s

- kubelet

- categories:

- k8s

- kubelet

卸载 k8s

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

kubeadm reset -f

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/lib/kubelet

rm -rf /var/etcd

yum clean all

yum erase -y kubelet kubectl kubeadm kubernetes-cni

rm -rf /etc/containers/

rm -rf /usr/local/bin/

rm -rf /etc/containerd/

rm -rf /usr/local/lib/systemd/system/containerd.service

rm -rf /usr/local/sbin/runc

rm -rf /opt/cni/bin/

sudo yum remove -y kubeadm kubectl kubelet kubernetes-cni kube*

sudo yum autoremove -y

systemctl stop kubelet

systemctl disable kubelet

sudo rm -rf ~/.kube

sudo rm -rf /etc/kubernetes/

sudo rm -rf /var/lib/kube*

|

彻底清理卸载 kubeadm、kubectl、kubelet

Debian / Ubuntu

1

2

|

sudo apt-get purge kubeadm kubectl kubelet kubernetes-cni kube*

sudo apt-get autoremove

|

- apt-get remove 会删除软件包而保留软件的配置文件

- apt-get purge 会同时清除软件包和软件的配置文件

CentOS / RHEL / Fedora

1

2

|

sudo yum remove -y kubeadm kubectl kubelet kubernetes-cni kube*

sudo yum autoremove -y

|

脚本安装 k8s

安装方式使用 virtuaenv

1

2

3

4

5

6

7

|

VENVDIR=kubespray-venv

KUBESPRAYDIR=kubespray

ANSIBLE_VERSION=2.12

virtualenv --python=$(which python3) $VENVDIR

source $VENVDIR/bin/activate

|

安装集群命令

1

|

sudo ansible-playbook -i inventory/mycluster/inventory.ini --private-key /root/.ssh/id_rsa cluster.yml -b -v

|

给节点的 containerd 添加内容

containerd.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

---

- hosts: kube_node

tasks:

- name: Insert/Update "Match User" configuration block in /tmp/sshd_config

ansible.builtin.blockinfile:

path: /etc/containerd/config.toml

block: |

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."10.7.20.12:5000"]

endpoint = ["http://10.7.20.12:5000"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."10.0.37.153:5000"]

endpoint = ["http://10.0.37.153:5000"]

create: yes

state: present

become: yes

- name: Reboot immediately for updated ostree

raw: "nohup bash -c 'sleep 5s && shutdown -r now'"

ignore_errors: true # noqa ignore-errors

ignore_unreachable: yes

|

重启命令

1

|

sudo ansible-playbook -i inventory/mycluster/inventory.ini --private-key /root/.ssh/id_rsa contained.yaml

|

安装 rook-ceph

1

|

kubectl apply -f crds.yaml -f common.yaml -f operator.yaml

|

需要给节点定义 label

1

2

3

4

5

6

7

|

3163 kubectl label k8s-node1 app.rook=storage

3164 kubectl label node k8s-node1 app.rook=storage

3165 kubectl label node k8s-node2 app.rook=storage

3166 kubectl label node k8s-node3 app.rook=storage

3178 kubectl label node k8s-node1 ceph=true

3179 kubectl label node k8s-node2 ceph=true

3180 kubectl label node k8s-node3 ceph=true

|

给节点安装服务

1

2

3

4

5

6

|

kubectl apply -f cluster.yaml -n rook-ceph

kubectl apply -f toolbox.yaml -n rook-ceph

kubectl apply -f myfs.yaml -n rook-ceph

kubectl apply -f pool.yaml -n rook-ceph

Alias tip: k apply -f pool.yaml -n rook-ceph

cephblockpool.ceph.rook.io/replicapool configured

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

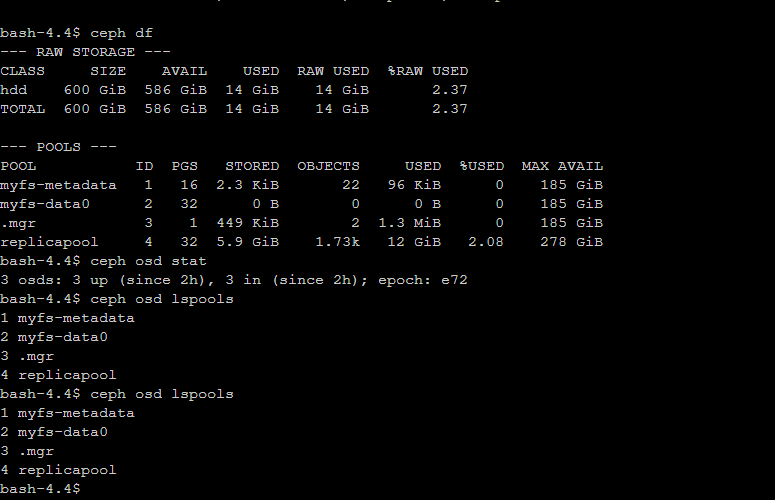

bash-4.4$ ceph status

cluster:

id: 67be90ee-c235-4ba3-9c76-d12ef161091d

health: HEALTH_WARN

clock skew detected on mon.b, mon.d

services:

mon: 3 daemons, quorum a,b,d (age 5m)

mgr: b(active, since 5m), standbys: a

mds: 1/1 daemons up, 1 hot standby

osd: 3 osds: 3 up (since 6m), 3 in (since 7m)

data:

volumes: 1/1 healthy

pools: 3 pools, 49 pgs

objects: 24 objects, 451 KiB

usage: 66 MiB used, 60 GiB / 60 GiB avail

pgs: 49 active+clean

io:

client: 853 B/s rd, 1 op/s rd, 0 op/s wr

bash-4.4$

|

cleanosd.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

|

#检查硬盘路径

fdisk -l

#删除硬盘分区信息

DISK="/dev/sdb"

sgdisk --zap-all $DISK

#清理硬盘数据(hdd硬盘使用dd,ssd硬盘使用blkdiscard,二选一)

dd if=/dev/zero of="$DISK" bs=1M count=100 oflag=direct,dsync

blkdiscard $DISK

#删除原osd的lvm信息(如果单个节点有多个osd,那么就不能用*拼配模糊删除,而根据lsblk -f查询出明确的lv映射信息再具体删除,参照第5项操作)

ls /dev/mapper/ceph-* | xargs -I% -- dmsetup remove %

rm -rf /dev/ceph-*

#重启,sgdisk –zzap-all需要重启后才生效

reboot

|

1

2

|

kubectl get storageclass

|

#基于 k3s 部署

1

2

3

|

export KUBEGEMS_VERSION=v1.23.4

k3s kubectl create namespace kubegems-installer

k3s kubectl apply -f https://github.com/kubegems/kubegems/raw/${KUBEGEMS_VERSION}/deploy/installer.yaml

|

1

2

3

4

5

6

7

8

9

|

$ k3s kubectl create namespace kubegems

$ export STORAGE_CLASS=local-path # 改为您使用的 storageClass

$ curl -sL https://github.com/kubegems/kubegems/raw/${KUBEGEMS_VERSION}/deploy/kubegems.yaml \

| sed -e "s/local-path/${STORAGE_CLASS}/g" \

> kubegems.yaml

$ k3s kubectl apply -f kubegems.yaml

|

#基于 kind 部署 kubegems

1

2

3

|

curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.17.0/kind-linux-amd64

chmod +x ./kind

sudo mv ./kind /usr/local/bin/kind

|

创建 kind-config.yaml

1

2

3

4

5

6

7

8

9

|

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: control-plane

- role: control-plane

- role: worker

- role: worker

- role: worker

|

创建集群,并命令为 kubegems

1

|

kind create cluster --name kubegems --config kind-config.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

⬢ [Systemd] ➜ helm install mysql bitnami/mysql -n db

NAME: mysql

LAST DEPLOYED: Wed Feb 22 16:35:17 2023

NAMESPACE: db

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: mysql

CHART VERSION: 9.5.0

APP VERSION: 8.0.32

** Please be patient while the chart is being deployed **

Tip:

Watch the deployment status using the command: kubectl get pods -w --namespace db

Services:

echo Primary: mysql.db.svc.cluster.local:3306

Execute the following to get the administrator credentials:

echo Username: root

MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace db mysql -o jsonpath="{.data.mysql-root-password}" | base64 -d)

To connect to your database:

1. Run a pod that you can use as a client:

kubectl run mysql-client --rm --tty -i --restart='Never' --image docker.io/bitnami/mysql:8.0.32-debian-11-r8 --namespace db --env MYSQL_ROOT_PASSWORD=$MYSQL_ROOT_PASSWORD --command -- bash

2. To connect to primary service (read/write):

mysql -h mysql.db.svc.cluster.local -uroot -p"$MYSQL_ROOT_PASSWORD"

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

# helm repo add bitnami https://charts.bitnami.com/bitnami

# helm repo update

# helm pull bitnami/mysql # mysql-9.2.0.tgz, MySQL 8.0.29

# helm show values ./mysql-9.2.0.tgz > mysql-9.2.0.helm-values.yaml

...(1)修改存储类:global.storageClass

...(2)修改运行模式:默认 standalone 模式,无需修改;

helm --namespace infra-database \\n install mysql bitnami/mysql -f values-test.yaml \\n --create-namespace

helm --namespace infra-database \

upgrade mysql bitnami/mysql -f values-test.yaml \

--create-namespace

helm --namespace infra-database \

upgrade mysql bitnami/mysql -f values-test.yaml

helm --namespace infra-database \

install mysql bitnami/mysql -f values-test.yaml \

--create-namespace

helm uninstall mysql -n infra-database

kubectl delete pvc data-mysql-0 -n infra-database

helm \

install kubegems kubegems/kubegems -f values-kubegems.yaml \

--create-namespace

MYSQL_ROOT_PASSWORD=d8jCvGgxx6

|

清理 kubgems 的 plugins

1

|

kubectl patch crd/plugins.plugins.kubegems.io -p '{"metadata":{"finalizers":[]}}' --type=merge

|

列出 k8s 的 pod 再用的 image

1

2

3

4

|

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" |\

tr -s '[[:space:]]' '\n' |\

sort |\

uniq -c

|

使用国内的镜像源安装

Debian / Ubuntu

1

2

3

4

5

6

7

8

9

10

11

|

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet kubeadm kubectl

## 另外,你也可以指定版本安装

## apt-get install kubectl=1.21.3-00 kubelet=1.21.3-00 kubeadm=1.21.3-00

|

CentOS / RHEL / Fedora

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable kubelet && systemctl start kubelet

## 另外,你也可以指定版本安装

## yum install -y kubelet-1.25.6 kubectl-kubelet-1.25.6 kubeadm-kubelet-1.25.6

|

ps: 由于官网未开放同步方式, 可能会有索引 gpg 检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装

设置 Node 的最大 Pod 数。

kubernetes 版本要求: 1.24+

步骤

-

找到对应的工作节点查看 kubelet 进程信息,查找 Config 文件所在目录。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Thu 2023-05-04 15:00:49 CST; 4min 45s ago

Docs: https://kubernetes.io/docs/

Main PID: 43623 (kubelet)

Tasks: 69

Memory: 192.0M

CGroup: /system.slice/kubelet.service

└─43623 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime=remote --container-runtime-endpoint=unix:///va...

...

|

-

修改配置文件/var/lib/kubelet/config.yaml,在配置文件最后一加上 maxPods: Number。

1

2

3

4

5

6

7

8

|

vim /var/lib/kubelet/config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

...

kind: KubeletConfiguration

...

volumeStatsAggPeriod: 0s

maxPods: 150 # 添加的配置内容,默认为110个,设置成150个。

|

-

修改保存之后,重新加载配置和重启 kubelet。

1

2

|

systemctl daemon-reload

systemctl restart kubelet

|

-

验证结果。(在 master 节点操作)

1

2

3

4

5

6

7

8

|

kubectl describe node node-name|grep -A6 "Capacity\|Allocatable"

Capacity:

...

pods: 150 # 已变成150个

Allocatable:

...

pods: 150 # 已变成150个

|

参考

配置文件内容:

https://kubernetes.io/zh-cn/docs/reference/config-api/kubelet-config.v1beta1/#kubelet-config-k8s-io-v1beta1-KubeletConfiguration

Ref

Kubespray v2.21.0 离线部署 Kubernetes v1.25.6 集群

今天部署了 k8s 1.29 的版本

发现 quay.io/calico/node:v3.27.2 这个包会报错,需要使用 v3.27.3 才可以

Defaulted container “calico-node” out of: calico-node, upgrade-ipam (init), install-cni (init), flexvol-driver (init)

calico-node: error while loading shared libraries: libpcap.so.0.8: cannot open shared object file: No such file or directory

今天安装遇到爆粗:

ERROR! the role ‘kubespray-defaults’ was not found

解决办法,目录权限问题,通过 sudo

1

2

3

4

|

WSL by default doesn't want to touch permissions of shared folders between linux and windows (e.g. `/mnt/c...`). There is a way to disable this standard behaviour though.

1. Enable chmod in WSL: [https://devblogs.microsoft.com/commandline/chmod-chown-wsl-improvements/](https://devblogs.microsoft.com/commandline/chmod-chown-wsl-improvements/)

2. Then `sudo chmod o-w`

|