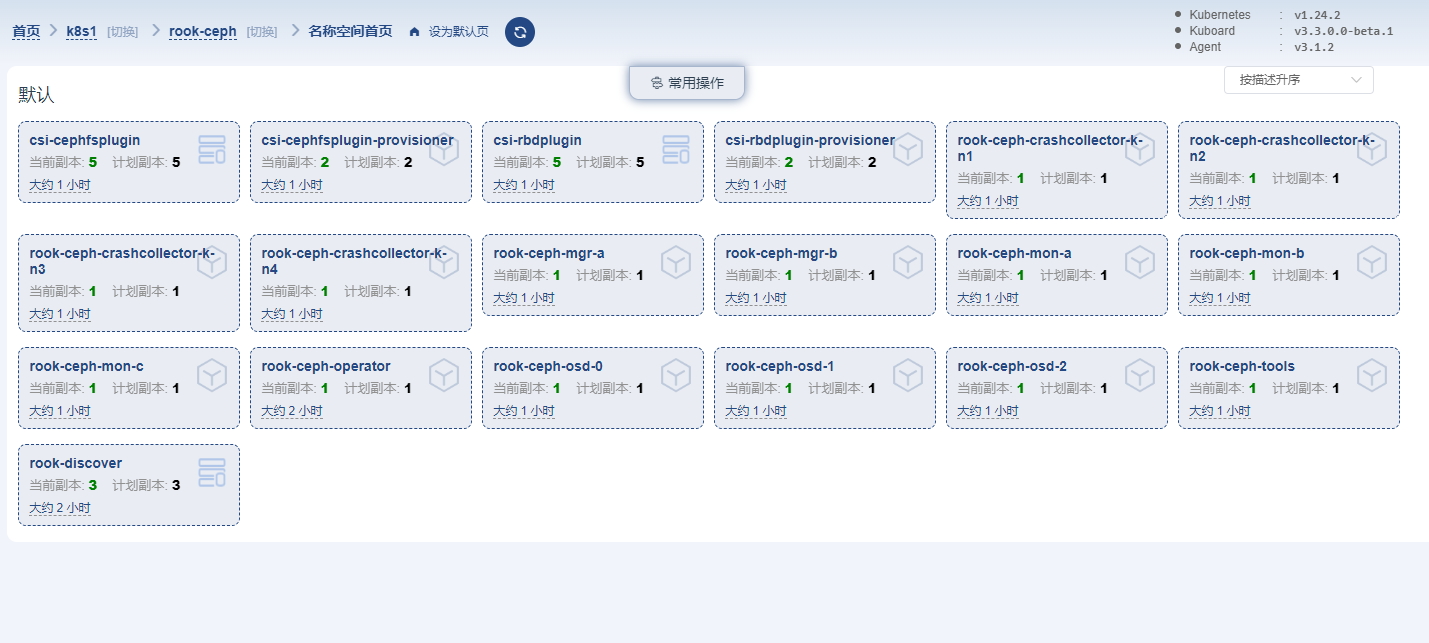

安装后的效果如图所示:

需要使用的文件为:

crds.yaml

commons.yaml

operator.yaml

cluster.yaml

其中修改配置文件:

修改 cluster.yaml 文件,配置 mon,mgr 等配置,修改 useAllNodes:false 和 useAllDevices:false.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

[root@k-m1 examples]# git diff cluster.yaml

diff --git a/deploy/examples/cluster.yaml b/deploy/examples/cluster.yaml

index 7740adc44..b76ddd602 100644

--- a/deploy/examples/cluster.yaml

+++ b/deploy/examples/cluster.yaml

@@ -223,8 +223,8 @@ spec:

mgr: system-cluster-critical

#crashcollector: rook-ceph-crashcollector-priority-class

storage: # cluster level storage configuration and selection

- useAllNodes: true

- useAllDevices: true

+ useAllNodes: false

+ useAllDevices: false

#deviceFilter:

config:

# crushRoot: "custom-root" # specify a non-default root label for the CRUSH map

@@ -235,14 +235,16 @@ spec:

# encryptedDevice: "true" # the default value for this option is "false"

# Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named

# nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label.

- # nodes:

- # - name: "172.17.4.201"

- # devices: # specific devices to use for storage can be specified for each node

- # - name: "sdb"

- # - name: "nvme01" # multiple osds can be created on high performance devices

- # config:

- # osdsPerDevice: "5"

- # - name: "/dev/disk/by-id/ata-ST4000DM004-XXXX" # devices can be specified using full udev paths

+ nodes:

+ - name: "k-n1"

+ devices: # specific devices to use for storage can be specified for each node

+ - name: "sda"

+ - name: "k-n2"

+ devices:

+ - name: "sda"

+ - name: "k-n3"

+ devices:

+ - name: "sda"

安装创建:

1

kubectl apply -f crds.yaml -f common.yaml -f operator.yaml -f cluster.yaml

需要 check 安装后的程序

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

[root@k-m1 examples]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-dsxb4 2/2 Running 0 53m

csi-cephfsplugin-k67dv 2/2 Running 0 53m

csi-cephfsplugin-provisioner-6889564d46-6k8vf 5/5 Running 0 53m

csi-cephfsplugin-provisioner-6889564d46-lqf5s 5/5 Running 0 53m

csi-cephfsplugin-pz9g6 2/2 Running 0 53m

csi-cephfsplugin-r47jg 2/2 Running 0 53m

csi-cephfsplugin-rfztn 2/2 Running 0 53m

csi-rbdplugin-2f72x 2/2 Running 0 53m

csi-rbdplugin-2l26p 2/2 Running 0 53m

csi-rbdplugin-ft8fh 2/2 Running 0 53m

csi-rbdplugin-lvf89 2/2 Running 0 53m

csi-rbdplugin-p4ssl 2/2 Running 0 53m

csi-rbdplugin-provisioner-5d9b8df7f9-jgvf6 5/5 Running 0 53m

csi-rbdplugin-provisioner-5d9b8df7f9-rhbgm 5/5 Running 0 53m

rook-ceph-crashcollector-k-n1-5d66864d9b-hmmq9 1/1 Running 0 51m

rook-ceph-crashcollector-k-n2-58f8744f4c-v6w5h 1/1 Running 0 51m

rook-ceph-crashcollector-k-n3-6664d4cfc4-79vlp 1/1 Running 0 51m

rook-ceph-crashcollector-k-n4-5cbddfbf5f-4nv9g 1/1 Running 0 52m

rook-ceph-mgr-a-585b5856cb-4z76c 3/3 Running 0 52m

rook-ceph-mgr-b-7df6967cb9-6mdl9 3/3 Running 0 52m

rook-ceph-mon-a-6fb8474b9-9x5xw 2/2 Running 0 53m

rook-ceph-mon-b-5846f87f44-d49dz 2/2 Running 0 52m

rook-ceph-mon-c-5c8f7f4698-zvhk9 2/2 Running 0 52m

rook-ceph-operator-86c6d96dbc-hbfkt 1/1 Running 1 (78m ago) 99m

rook-ceph-osd-0-65c89bdd8c-2bmqt 2/2 Running 0 51m

rook-ceph-osd-1-55f764bdcd-np6kj 2/2 Running 0 51m

rook-ceph-osd-2-659dc694d5-q8sfh 2/2 Running 0 51m

rook-ceph-osd-prepare-k-n1-2clwz 0/1 Completed 0 51m

rook-ceph-osd-prepare-k-n2-gt2w9 0/1 Completed 0 51m

rook-ceph-osd-prepare-k-n3-svffs 0/1 Completed 0 51m

rook-ceph-tools-6584b468fd-rg598 1/1 Running 0 49m

rook-discover-d2gmv 1/1 Running 1 (59m ago) 99m

rook-discover-ftkjg 1/1 Running 1 (60m ago) 99m

rook-discover-l5fsq 1/1 Running 1 (<invalid> ago) 99m

安装 ceph 客服端工具:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

[root@k-m1 examples]# kubectl create -f toolbox.yaml -n rook-ceph

deployment.apps/rook-ceph-tools created

[root@k-m1 examples]# kubectl get pod -n rook-ceph | grep tool

rook-ceph-tools-6584b468fd-gb2qn 1/1 Running 0 8s

[root@k-m1 examples]# kubectl exec -it rook-ceph-tools-fc5f9586c-g5q5d -n rook-ceph -- bash

Error from server (NotFound): pods "rook-ceph-tools-fc5f9586c-g5q5d" not found

[root@k-m1 examples]# kubectl exec -it rook-ceph-tools-6584b468fd-gb2qn -n rook-ceph -- bash

bash-4.4$ ceph status

cluster:

id: 5aafad3f-3e20-4535-873c-0e6ff5bbdade

health: HEALTH_WARN

mons a,c are low on available space

OSD count 0 < osd_pool_default_size 3

services:

mon: 3 daemons, quorum a,b,c (age 7m)

mgr: b(active, since 5m), standbys: a

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

部署 cephdashboard

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

[root@k-m1 examples]# kubectl get svc -n rook-ceph | grep dashboard

rook-ceph-mgr-dashboard ClusterIP 10.233.26.158 <none> 8443/TCP 57m

vim dashboard-np.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: rook-ceph-mgr

ceph_daemon_id: a

rook_cluster: rook-ceph

name: rook-ceph-mgr-dashboard-np

namespace: rook-ceph

spec:

ports:

- name: http-dashboard

port: 8443

protocol: TCP

targetPort: 8443

selector:

app: rook-ceph-mgr

ceph_daemon_id: a

rook_cluster: rook-ceph

sessionAffinity: None

type: NodePort

[root@k-m1 examples]# kubectl get svc -n rook-ceph | grep dashboard

rook-ceph-mgr-dashboard ClusterIP 10.233.26.158 <none> 8443/TCP 61m

rook-ceph-mgr-dashboard-np NodePort 10.233.36.43 <none> 8443:54219/TCP 3m8s

访问 https://10.7.20.26:54219

获取访问密码:

1

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

警告解决:https://docs.ceph.com/en/octopus/rados/operations/health-checks/

1

2

[root@k8s-master01 ceph]# kubectl exec -it rook-ceph-tools-fc5f9586c-g5q5d -n rook-ceph -- bash

[root@rook-ceph-tools-fc5f9586c-g5q5d /]# ceph config set mon auth_allow_insecure_global_id_reclaim false

清理:

1

2

https://www.cnblogs.com/hahaha111122222/p/15718855.html

https://blog.csdn.net/Hansyang84/article/details/114026876

Licensed under CC BY-NC-SA 4.0

最后更新于 Sep 10, 2025 02:16 UTC