安装 eck 的步骤

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

zsh 🌈 wget -L https://download.elastic.co/downloads/eck/2.4.0/operator.yaml

--2022-09-28 14:26:53-- https://download.elastic.co/downloads/eck/2.4.0/operator.yaml

Loaded CA certificate '/etc/ssl/certs/ca-certificates.crt'

Resolving download.elastic.co (download.elastic.co)... 34.120.127.130, 2600:1901:0:1d7::

Connecting to download.elastic.co (download.elastic.co)|34.120.127.130|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 15869 (15K) [binary/octet-stream]

Saving to: ‘operator.yaml’

operator.yaml 100%[============================>] 15.50K --.-KB/s in 0.02s

2022-09-28 14:26:55 (677 KB/s) - ‘operator.yaml’ saved [15869/15869]

k8s-eggjs/elasticsearch on main [📝🤷]

zsh 🌈 ls

crds.yaml operate.md operator.yaml

k8s-eggjs/elasticsearch on main [📝🤷]

zsh 🌈 kubectl apply -f ./

customresourcedefinition.apiextensions.k8s.io/agents.agent.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/apmservers.apm.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/beats.beat.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticmapsservers.maps.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticsearches.elasticsearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/enterprisesearches.enterprisesearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/kibanas.kibana.k8s.elastic.co created

namespace/elastic-system created

serviceaccount/elastic-operator created

secret/elastic-webhook-server-cert created

configmap/elastic-operator created

clusterrole.rbac.authorization.k8s.io/elastic-operator created

clusterrole.rbac.authorization.k8s.io/elastic-operator-view created

clusterrole.rbac.authorization.k8s.io/elastic-operator-edit created

clusterrolebinding.rbac.authorization.k8s.io/elastic-operator created

service/elastic-webhook-server created

statefulset.apps/elastic-operator created

validatingwebhookconfiguration.admissionregistration.k8s.io/elastic-webhook.k8s.elastic.co created

k8s-eggjs/elasticsearch on main [📝🤷]

zsh 🌈

|

观察是否创建成功

1

2

3

4

5

6

7

8

9

|

zsh 🌈 kubectl get all -n elastic-system

NAME READY STATUS RESTARTS AGE

pod/elastic-operator-0 1/1 Running 0 4m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-webhook-server ClusterIP 10.233.17.58 <none> 443/TCP 4m7s

NAME READY AGE

statefulset.apps/elastic-operator 1/1 4m7s

|

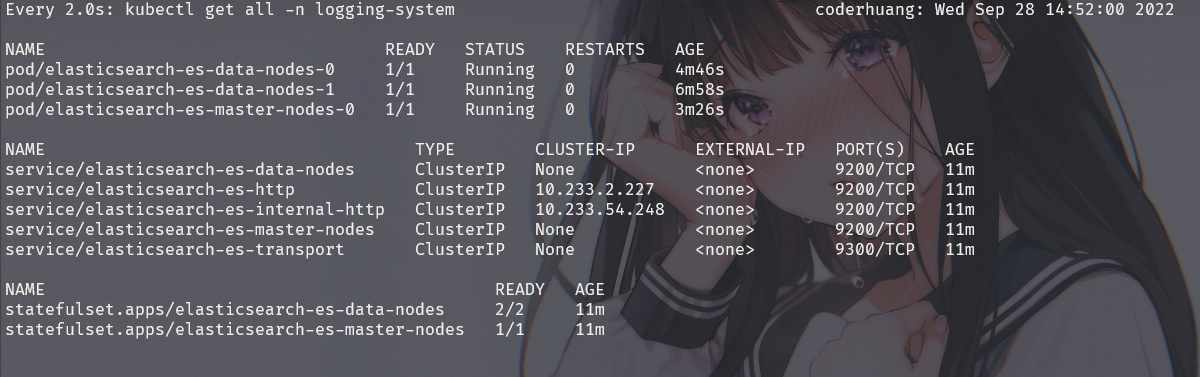

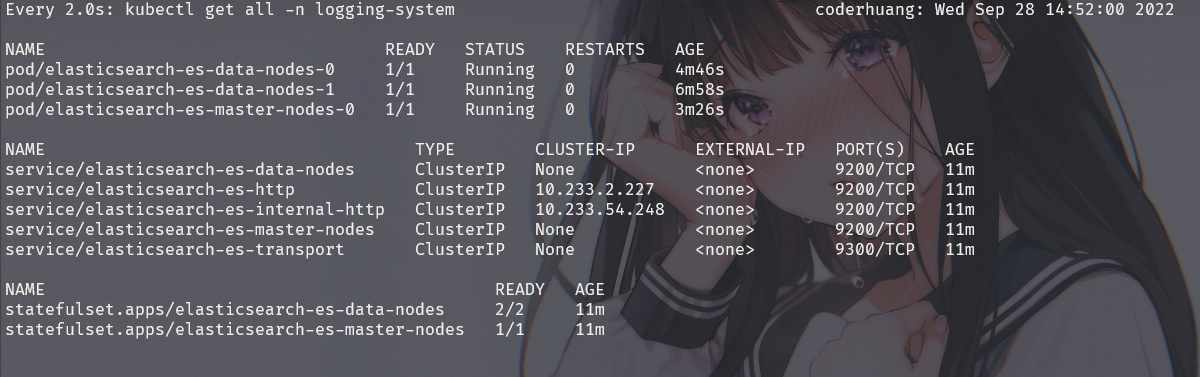

2、部署 elasticsearch

存储是通过节点本地存储的方式

(1) 创建存储类

1

2

3

4

5

6

7

8

|

kubectl apply -f es-data-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: es-data

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Retain

|

(2) 创建 PV

如果/var/lib/hawkeye/esdata 文件夹不存在,需要先进行创建。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

|

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-data-0

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

storageClassName: es-data

persistentVolumeReclaimPolicy: Retain

local:

path: /var/lib/hawkeye/esdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k-116-n1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-data-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

storageClassName: es-data

persistentVolumeReclaimPolicy: Retain

local:

path: /var/lib/hawkeye/esdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k-116-n2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-data-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

storageClassName: es-data

persistentVolumeReclaimPolicy: Retain

local:

path: /var/lib/hawkeye/esdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k-116-n3

|

(3)安装 pvc

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: elasticsearch-data-elasticsearch-es-master-nodes-0

namespace: logging-system

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: es-data

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: elasticsearch-data-elasticsearch-es-data-nodes-0

namespace: logging-system

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: es-data

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: elasticsearch-data-elasticsearch-es-data-nodes-1

namespace: logging-system

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: es-data

|

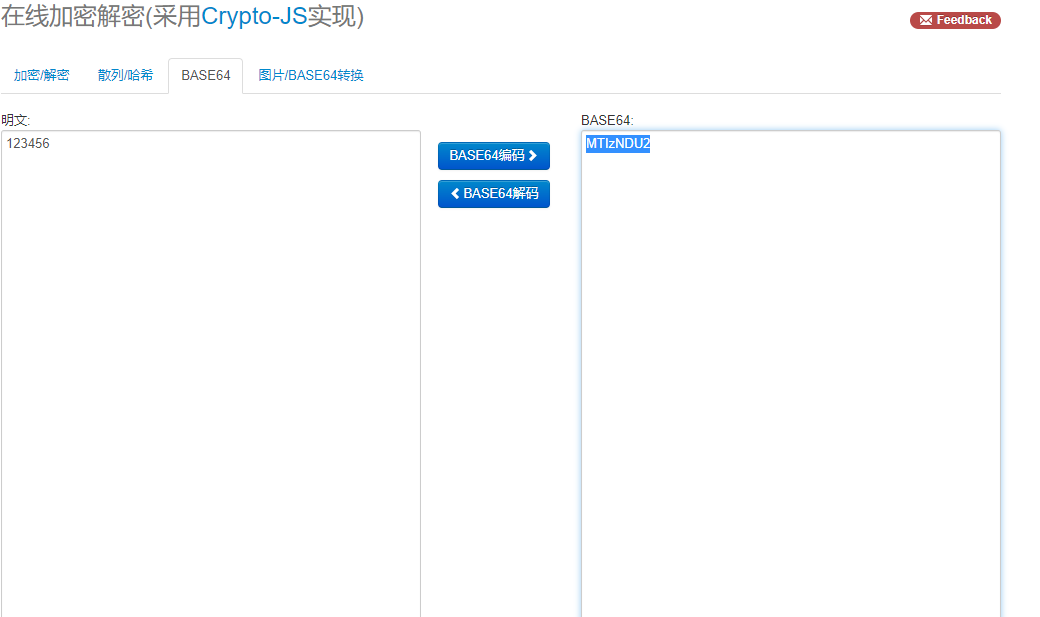

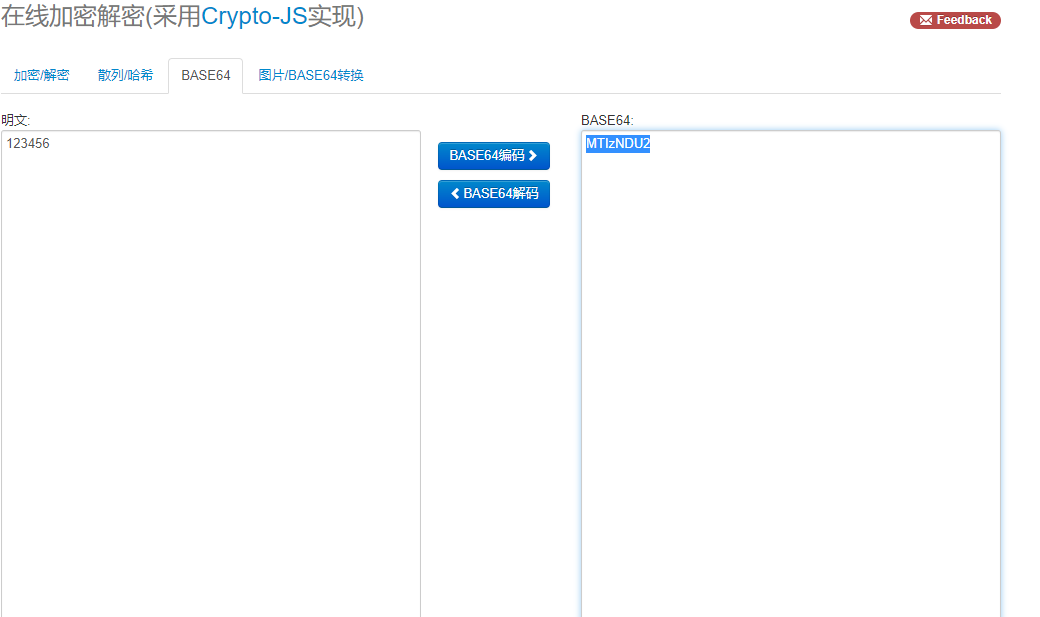

(4)创建集群密码,如果不创建,那么集群就会随机生成

1

2

3

4

5

6

7

8

9

10

11

12

|

apiVersion: v1

data:

elastic: yourSecret #(需要base64加密)

kind: Secret

metadata:

labels:

common.k8s.elastic.co/type: elasticsearch

eck.k8s.elastic.co/credentials: "true"

elasticsearch.k8s.elastic.co/cluster-name: elasticsearch

name: elasticsearch-es-elastic-user #名字不能改

namespace: logging-system

type: Opaque

|

(5)创建 es 集群

这里创建三个节点,两个数据节点,一个管理节点,

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

|

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: elasticsearch

namespace: logging-system

spec:

version: 7.2.0

image: 10.7.116.12:5000/elasticsearch/elasticsearch:7.2.0

nodeSets:

- name: master-nodes

count: 1

config:

node.master: true

node.data: false

podTemplate:

metadata:

namespace: logging-system

spec:

initContainers:

- name: sysctl

securityContext:

privileged: true

command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144']

#volumes:

#- name: elasticsearch-data

# emptyDir: {}

containers:

- name: elasticsearch

env:

- name: ES_JAVA_OPTS

value: -Xms1g -Xmx1g

resources:

requests:

memory: 2Gi

limits:

memory: 10Gi

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: es-data

- name: data-nodes

count: 2

config:

node.master: false

node.data: true

podTemplate:

metadata:

namespace: logging-system

spec:

initContainers:

- name: sysctl

securityContext:

privileged: true

command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144']

#volumes:

#- name: elasticsearch-data

# emptyDir: {}

containers:

- name: elasticsearch

env:

- name: ES_JAVA_OPTS

value: -Xms1g -Xmx1g

resources:

requests:

memory: 2Gi

limits:

memory: 10Gi

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: es-data

|

2、部署 kibana

2、部署 kibana

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: kibana

namespace: logging-system

spec:

version: 7.2.0

count: 1

elasticsearchRef:

name: elasticsearch

http:

tls:

selfSignedCertificate:

disabled: true

|

这样就可以登录到 kibana 中,用户名密码和 es 集群中的相同。

3、部署 fluentbit

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

|

vi fluentbit-clusterRoleBinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: fluentbit-read

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: fluentbit-read

subjects:

- kind: ServiceAccount

name: fluentbit

namespace: logging-system

vi fluentbit-clusterRole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentbit-read

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs: ["get", "list", "watch"]

vi fluentbit-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentbit-config

namespace: logging-system

data:

filter-kubernetes.conf: |

[FILTER]

Name record_modifier

Match *

Record hostname ${HOSTNAME}

fluent-bit.conf: |

[SERVICE]

# Set an interval of seconds before to flush records to a destination

Flush 5

# Instruct Fluent Bit to run in foreground or background mode.

Daemon Off

# Set the verbosity level of the service, values can be:

Log_Level info

# Specify an optional 'Parsers' configuration file

Parsers_File parsers.conf

# Plugins_File plugins.conf

# Enable/Disable the built-in Server for metrics

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-elasticsearch.conf

input-kubernetes.conf: |

[INPUT]

Name systemd

Tag host.*

Path /var/log/journal

DB /var/log/fluentbit/td.sys.pos

output-elasticsearch.conf: |

[OUTPUT]

Name es

Match kube.*

Host ${FLUENT_ELASTICSEARCH_HOST}

Port ${FLUENT_ELASTICSEARCH_PORT}

tls ${TLS_ENABLE}

tls.verify ${TLS_VERIFY}

HTTP_User ${ELASTICSEARCH_USERNAME}

HTTP_Passwd ${ELASTICSEARCH_PASSWORD}

# Replace_Dots On

Retry_Limit False

Index kube

Type kube

Buffer_Size 2M

Include_Tag_Key On

Tag_Key component

Logstash_Format On

Logstash_prefix umstor-monitor

parsers.conf: |

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>.*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache2

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^ ]*) +\S*)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache_error

Format regex

Regex ^\[[^ ]* (?<time>[^\]]*)\] \[(?<level>[^\]]*)\](?: \[pid (?<pid>[^\]]*)\])?( \[client (?<client>[^\]]*)\])? (?<message>.*)$

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name json

Format json

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

# Command | Decoder | Field | Optional Action

# =============|==================|=================

Decode_Field_As escaped log

[PARSER]

Name docker-daemon

Format regex

Regex time="(?<time>[^ ]*)" level=(?<level>[^ ]*) msg="(?<msg>[^ ].*)"

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name syslog-rfc5424

Format regex

Regex ^\<(?<pri>[0-9]{1,5})\>1 (?<time>[^ ]+) (?<host>[^ ]+) (?<ident>[^ ]+) (?<pid>[-0-9]+) (?<msgid>[^ ]+) (?<extradata>(\[(.*)\]|-)) (?<message>.+)$

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name syslog-rfc3164-local

Format regex

Regex ^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$

Time_Key time

Time_Format %b %d %H:%M:%S

Time_Keep On

[PARSER]

Name syslog-rfc3164

Format regex

Regex /^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$/

Time_Key time

Time_Format %b %d %H:%M:%S

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name mongodb

Format regex

Regex ^(?<time>[^ ]*)\s+(?<severity>\w)\s+(?<component>[^ ]+)\s+\[(?<context>[^\]]+)]\s+(?<message>.*?) *(?<ms>(\d+))?(:?ms)?$

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

Time_Key time

[PARSER]

# http://rubular.com/r/izM6olvshn

Name crio

Format Regex

Regex /^(?<time>.+)\b(?<stream>stdout|stderr)\b(?<log>.*)$/

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%N%:z

Time_Keep On

[PARSER]

Name kube-custom

Format regex

Regex var\.log\.containers\.(?<pod_name>[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*)_(?<namespace_name>[^_]+)_(?<container_name>.+)-(?<docker_id>[a-z0-9]{64})\.log$

[PARSER]

Name filter-kube-test

Format regex

Regex .*kubernetes.(?<pod_name>[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*)_(?<namespace_name>[^_]+)_(?<container_name>.+)-(?<docker_id>[a-z0-9]{64})\.log$

[PARSER]

# umstor for all log files

# http://rubular.com/r/IvZVElTgNl

Name umstor

Format regex

Regex ^(?<log_time>[^ ][-.\d\+:T]+[ ]*[.:\d]*)\s+(?<thread_id>\w+)\s+(?<log_level>-*\d+)\s+(?<message>.*)$

Time_Format %Y-%m-%d %H:%M:%S.%L

Time_Keep Off

Time_Key log_time

[PARSER]

# scrub for osd

Name umstor-scrub

Format regex

Regex ^(?<log_time>[^ ][-.\d\+:T]+[ ]*[.:\d]*)\s+(?<m>\w+)\s+(?<ret>-*\d+)\s+(?<message>.*)\s+(?<scrub_pg>\d+.\w+)\s+(?<scrub_status>scrub\s\w+)$

Time_Format %Y-%m-%d %H:%M:%S.%L

Time_Keep Off

Time_Key log_time

[PARSER]

# deep-scrub for osd

Name umstor-deep-scrub

Format regex

Regex ^(?<log_time>[^ ][-.\d\+:T]+[ ]*[.:\d]*)\s+(?<m>\w+)\s+(?<ret>-*\d+)\s+(?<message>.*)\s+(?<scrub_pg>\d+.\w+)\s+(?<scrub_status>deep-scrub\s\w+)$

Time_Format %Y-%m-%d %H:%M:%S.%L

Time_Keep Off

Time_Key log_time

[PARSER]

# log warning for osd, mon

Name umstor-log-warn

Format regex

Regex ^(?<log_time>[^ ][-.\d\+:T]+[ ]*[.:\d]*)\s+(?<m>\w+)\s+(?<ret>-*\d+)\s+(?<log_channel>[^ ]+)\s+\w+\s+(?<log_level>[\[WRN\]]+)\s+(?<message>.*)$

Time_Format %Y-%m-%d %H:%M:%S.%L

Time_Keep Off

Time_Key log_time

[PARSER]

# log debug for osd, mon

Name umstor-log-debug

Format regex

Regex ^(?<log_time>[^ ][-.\d\+:T]+[ ]*[.:\d]*)\s+(?<m>\w+)\s+(?<ret>-*\d+)\s+(?<log_channel>[^ ]+)\s+\w+\s+(?<log_level>[\[DBG\]]+)\s+(?<message>.*)$

Time_Format %Y-%m-%d %H:%M:%S.%L

Time_Keep Off

Time_Key log_time

vi fluentbit-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentbit

namespace: logging-system

labels:

k8s-app: fluentbit-logging

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluentbit-logging

kubernetes.io/cluster-service: "true"

template:

metadata:

labels:

k8s-app: fluentbit-logging

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/path: /api/v1/metrics/prometheus

spec:

containers:

- name: fluentbit

image: registry.umstor.io:5050/vendor/fluent-bit:1.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2020

name: http-metrics

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch-es-http"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME

value: "elastic"

- name: ELASTICSEARCH_PASSWORD

value: "r00tme" //这个密码需要修改

- name: TLS_ENABLE

value: "On"

- name: TLS_VERIFY

value: "Off"

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluentbit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluentbit-config

configMap:

name: fluentbit-config

serviceAccountName: fluentbit

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

effect: "NoSchedule"

|

1

2

3

4

5

6

|

vi fluentbit-serviceAccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentbit

namespace: logging-system

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

fluentbit-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: fluentbit-logging

name: fluentbit-logging

namespace: logging-system

spec:

clusterIP: None

ports:

- name: http-metrics

port: 2020

protocol: TCP

targetPort: http-metrics

type: ClusterIP

selector:

k8s-app: fluentbit-logging

|

1

2

3

4

5

6

7

8

9

10

11

|

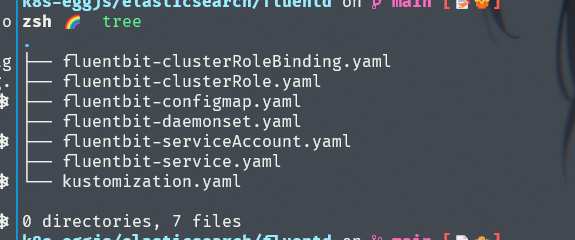

kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: logging-system

resources:

- fluentbit-clusterRoleBinding.yaml

- fluentbit-clusterRole.yaml

- fluentbit-daemonset.yaml

- fluentbit-serviceAccount.yaml

- fluentbit-service.yaml

- fluentbit-configmap.yaml

|

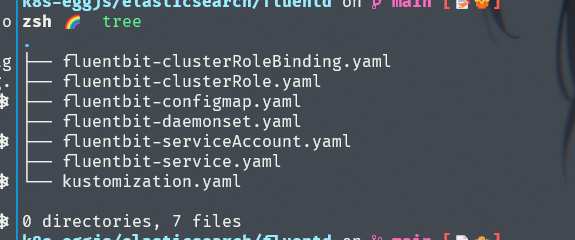

创建以后查看文件是否七个,如下图所示

其实这个还可以修改,设置为 cri 统计,上面的 systemd 获取方式不可以采集日志,可以配置 cri 方式来获取:

其实这个还可以修改,设置为 cri 统计,上面的 systemd 获取方式不可以采集日志,可以配置 cri 方式来获取:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

# CRI Parser

[PARSER]

# http://rubular.com/r/tjUt3Awgg4

Name cri

Format regex

Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L%z

[INPUT]

Name tail

Path /var/log/containers/*.log

Parser cri

Tag kube.*

Mem_Buf_Limit 5MB

Skip_Long_Lines On

|

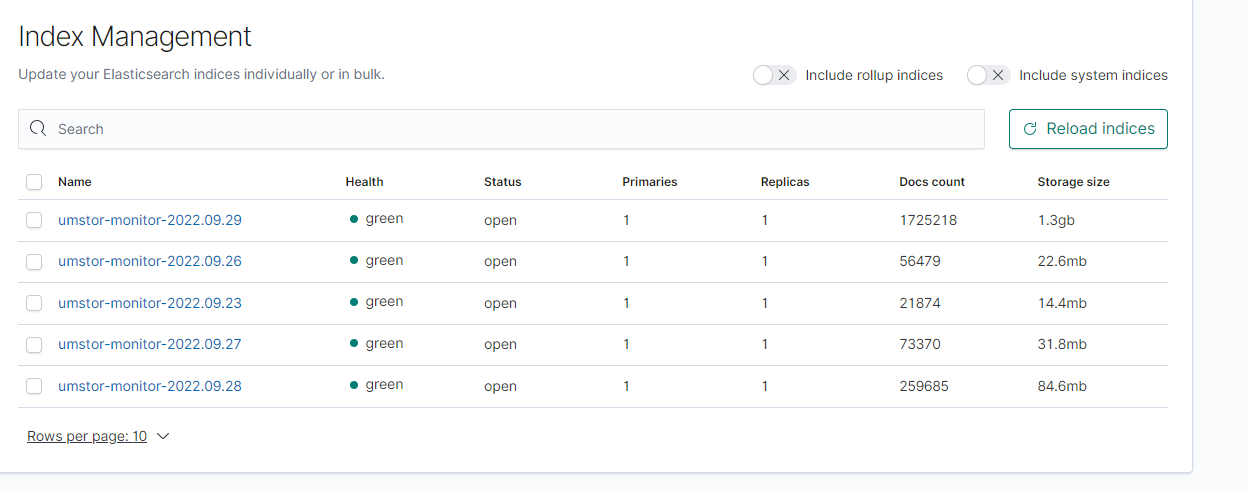

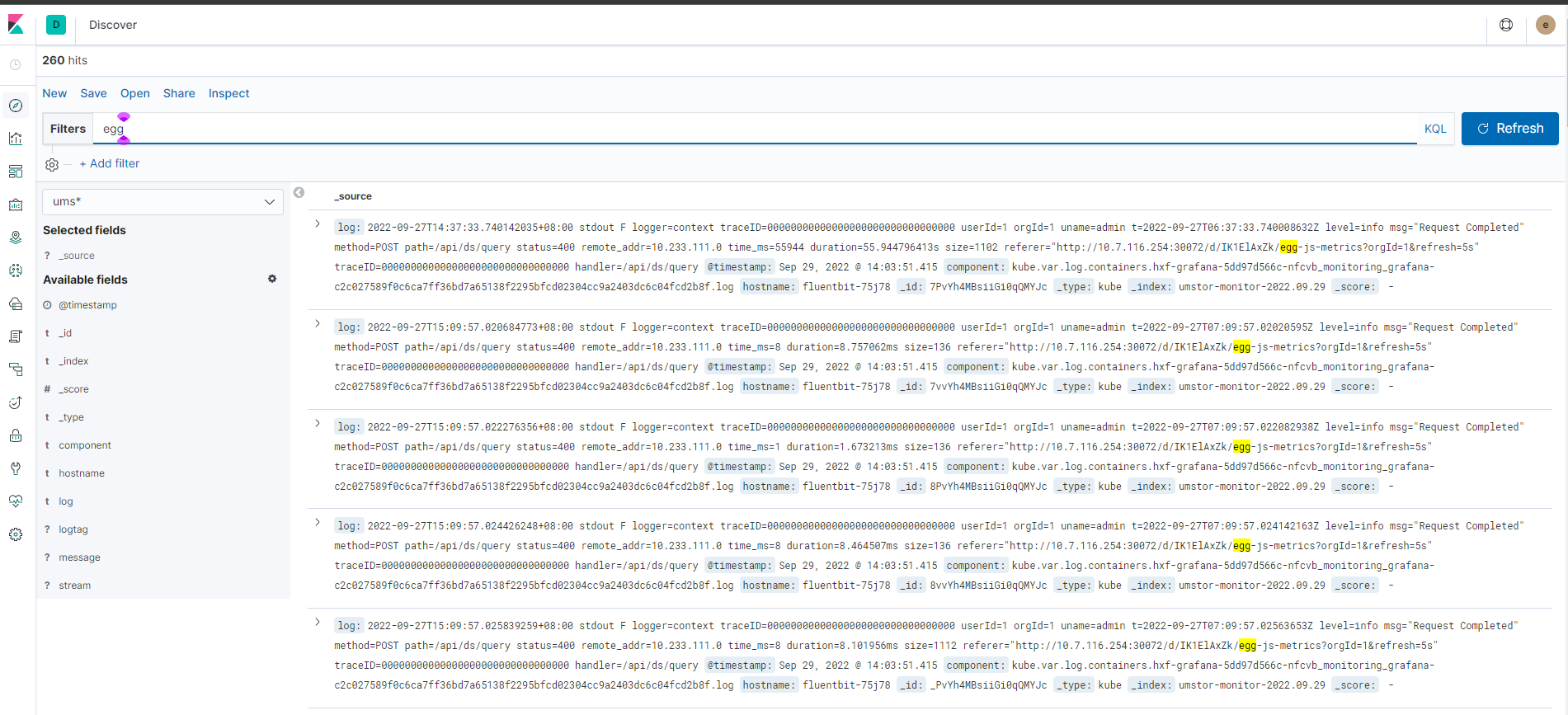

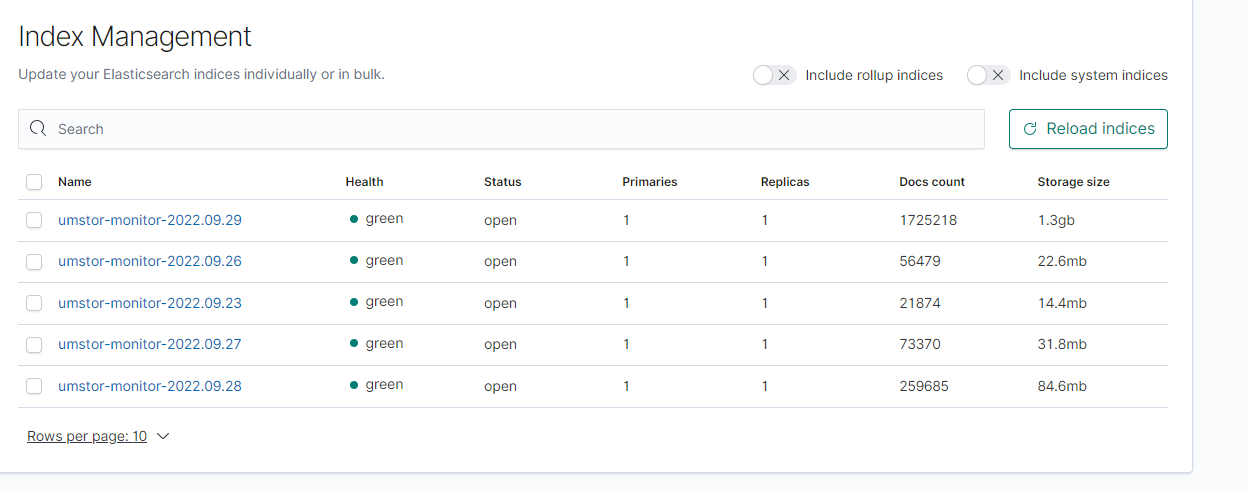

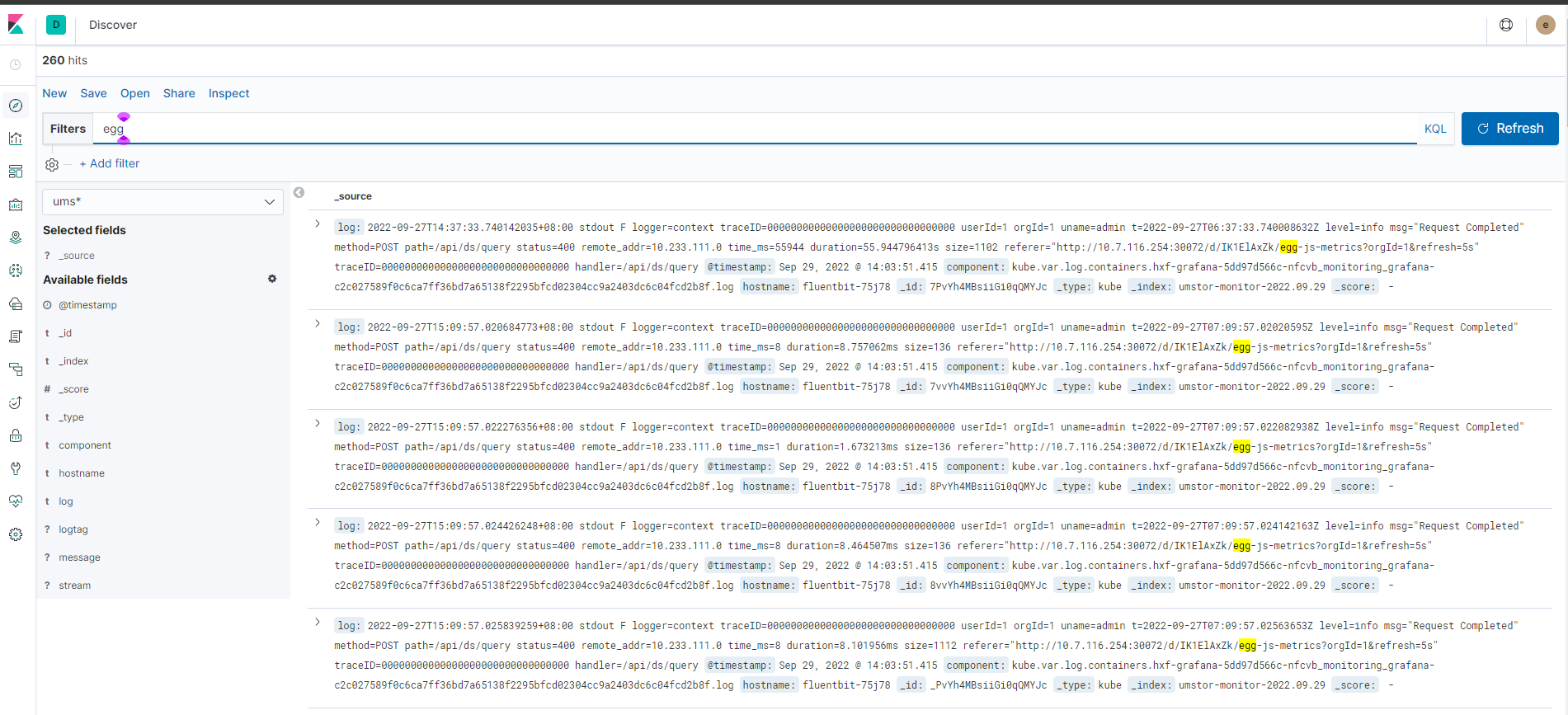

采集后的效果如下图所示

建立一个 index mange 就可以查看日志了

建立一个 index mange 就可以查看日志了

三、prometheus-elasticsearch-exporter

1.创建 deployment,es-exporter.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch-exporter

namespace: logging-system

labels:

app: elasticsearch-exporter

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch-exporter

template:

metadata:

labels:

app: elasticsearch-exporter

spec:

containers:

- name: elasticsearch-exporter

image: justwatch/elasticsearch_exporter:1.1.0

resources:

limits:

cpu: 300m

requests:

cpu: 200m

ports:

- containerPort: 9114

name: https

command:

- /bin/elasticsearch_exporter

- --es.all

- --web.telemetry-path=/_prometheus/metrics

- --es.ssl-skip-verify

- --es.uri=https://elastic:123456@elasticsearch-es-http:9200

securityContext:

capabilities:

drop:

- SETPCAP

- MKNOD

- AUDIT_WRITE

- CHOWN

- NET_RAW

- DAC_OVERRIDE

- FOWNER

- FSETID

- KILL

- SETGID

- SETUID

- NET_BIND_SERVICE

- SYS_CHROOT

- SETFCAP

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /healthz

port: 9114

initialDelaySeconds: 30

timeoutSeconds: 10

readinessProbe:

httpGet:

path: /healthz

port: 9114

initialDelaySeconds: 10

timeoutSeconds: 10

|

2.创建 es 对应 prometheus 的 serviceMonitor

es-serviceMonitor.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

es-app: es-exporter

release: hxf

name: es-client-node

namespace: logging-system

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 30s

honorLabels: true

port: https

path: /_prometheus/metrics

namespaceSelector:

matchNames:

- logging-system

jobLabel: k8s-app

selector:

matchLabels:

app: elasticsearch-exporter

|

3、创建 elasticsearch-exporter 对应的 service

es-endpoints.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch-exporter

name: elasticsearch-exporter

namespace: logging-system

spec:

ports:

- name: https

port: 9114

protocol: TCP

targetPort: 9114

selector:

app: elasticsearch-exporter

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

|

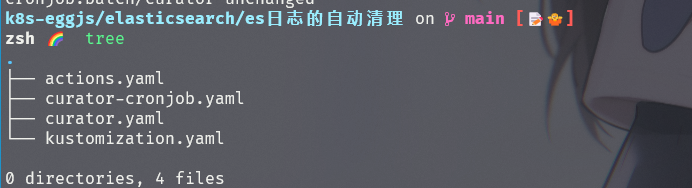

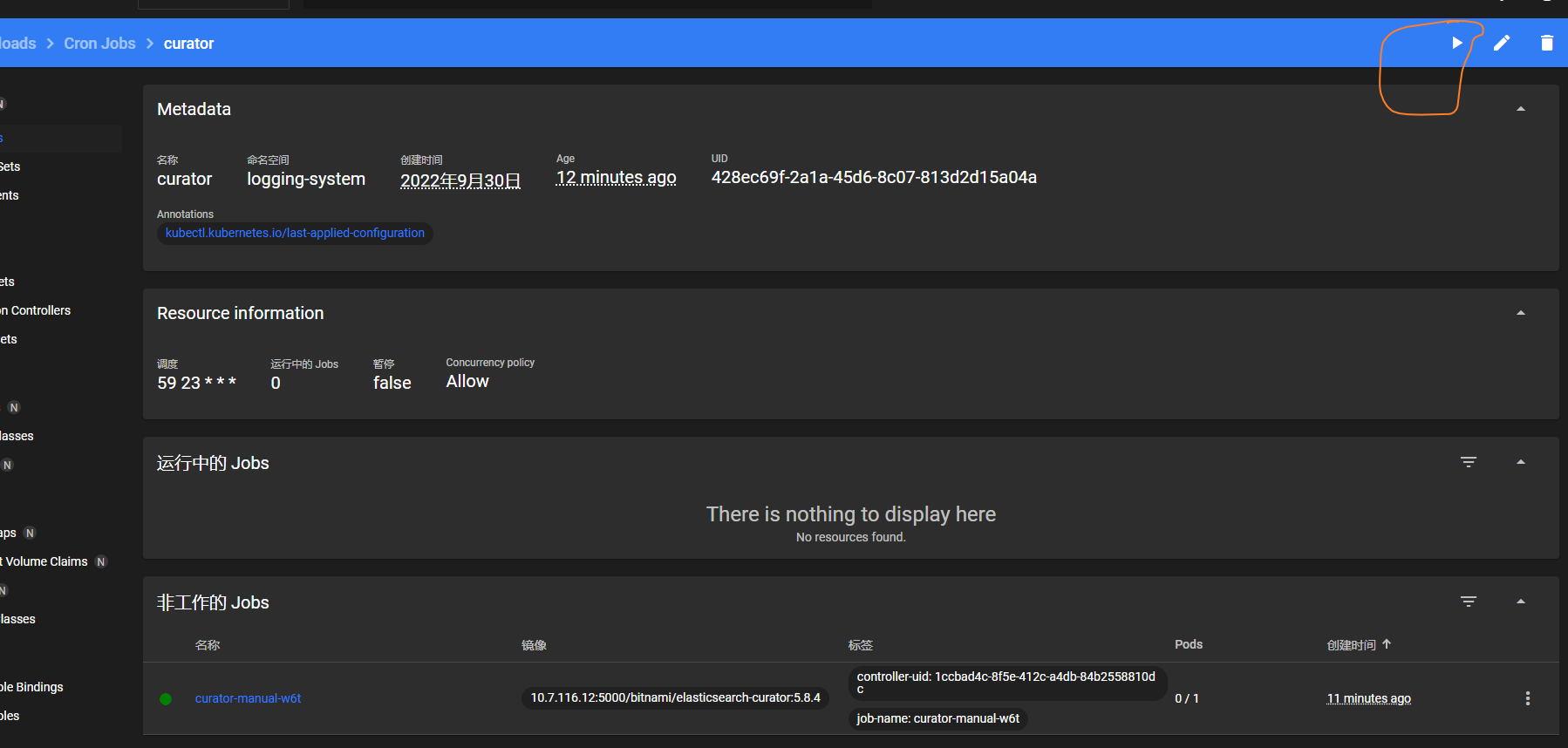

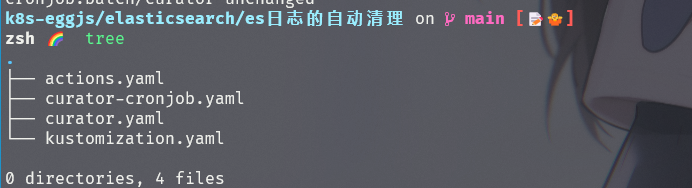

四、es 日志的自动清理

清理超出一天的,文件名称如下图所示,

文件名 actions.yaml

文件名 actions.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

|

cat <<EOF >./actions.yaml

actions:

1:

action: delete_indices

description: >-

Delete metric indices older than 21 days (based on index name), for

.monitoring-es-6-

.monitoring-kibana-6-

umstor-os-

umstor-sys-

umstor-monitor-

umstor-internal-

security-auditlog-

prefixed indices. Ignore the error if the filter does not result in an

actionable list of indices (ignore_empty_list) and exit cleanly.

options:

continue_if_exception: False

disable_action: False

ignore_empty_list: True

filters:

- filtertype: pattern

kind: regex

value: '^(\.monitoring-(es|kibana)-6-|umstor-(os|sys|internal|kube|monitor)-|security-auditlog-).*$'

- filtertype: age

source: name

direction: older

timestring: '%Y.%m.%d'

unit: days

unit_count: 1

2:

action: close

description: >-

Close metric indices older than 14 days (based on index name), for

.monitoring-es-6-

.monitoring-kibana-6-

umstor-os-

umstor-sys-

umstor-monitor-

umstor-internal-

security-auditlog-

prefixed indices. Ignore the error if the filter does not result in an

actionable list of indices (ignore_empty_list) and exit cleanly.

options:

continue_if_exception: True

disable_action: False

ignore_empty_list: True

filters:

- filtertype: pattern

kind: regex

value: '^(\.monitoring-(es|kibana)-6-|umstor-(os|sys|internal|kube|monitor)-|security-auditlog-).*$'

- filtertype: age

source: name

direction: older

timestring: '%Y.%m.%d'

unit: days

unit_count: 1

EOF

|

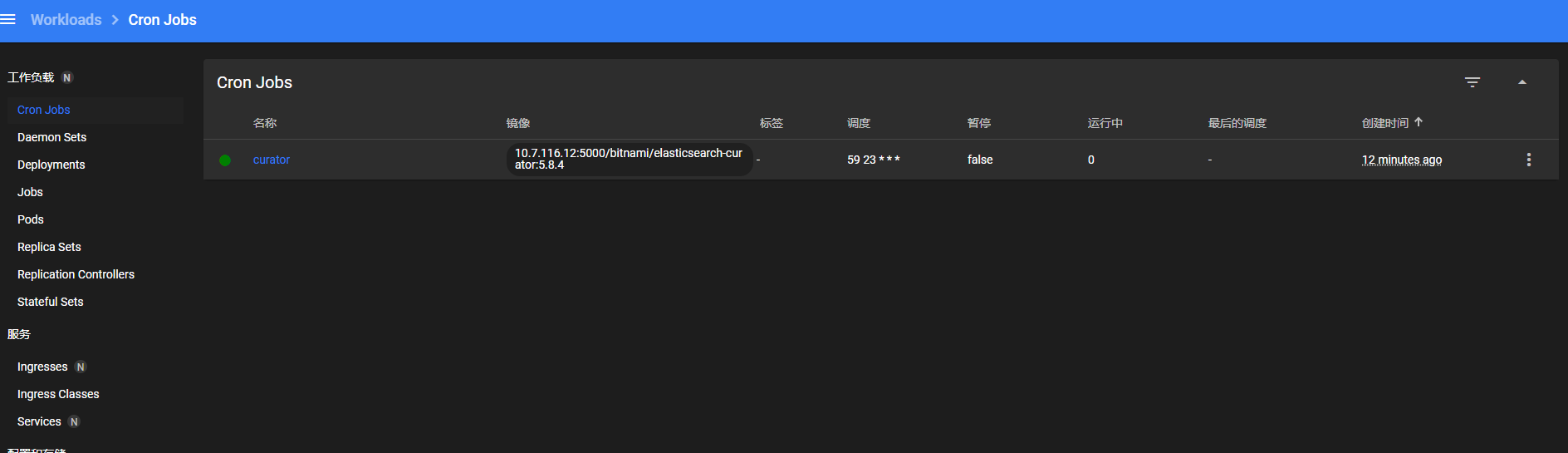

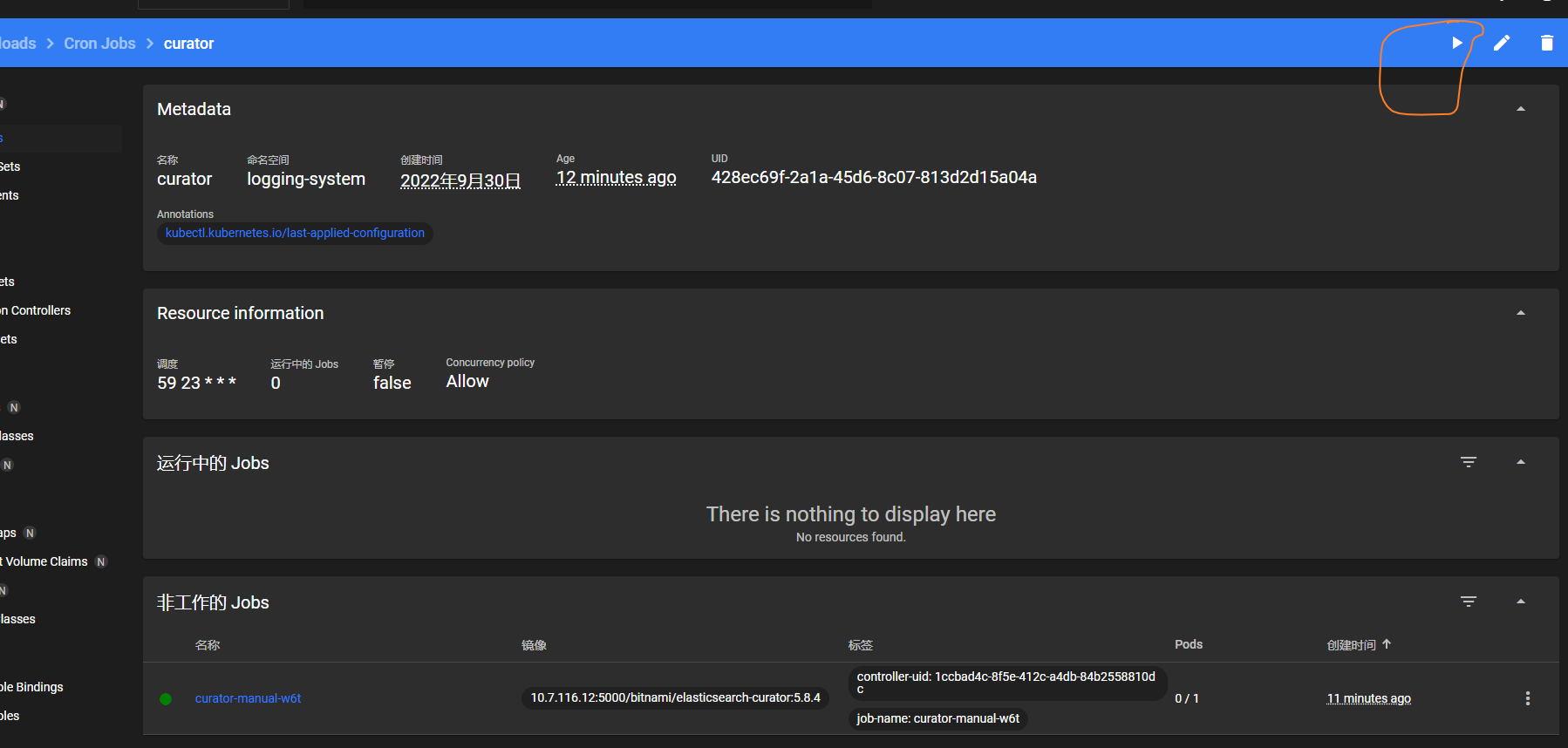

文件 curator-cronjob.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

cat <<EOF >./curator-cronjob.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: curator

spec:

schedule: 59 23 * * *

jobTemplate:

spec:

template:

spec:

containers:

- name: curator

image: 10.7.116.12:5000/bitnami/elasticsearch-curator:5.8.4

command:

- sh

- -c

- curator --config /etc/curator/curator.yaml /etc/curator/actions.yaml

volumeMounts:

- mountPath: /etc/curator/

name: curator-config

readOnly: true

- mountPath: /var/log/curator

name: curator-log

restartPolicy: OnFailure

volumes:

- configMap:

name: curator-config

name: curator-config

- hostPath:

path: /var/log/curator

name: curator-log

EOF

|

文件: curator.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

cat <<EOF>./curator.yaml

client:

hosts:

- elasticsearch-es-http

port: 9200

url_prefix:

use_ssl: True

certificate:

client_cert:

client_key:

ssl_no_validate: True

http_auth: elastic:123456

timeout: 30

master_only: False

logging:

loglevel: INFO

logfile: /var/log/curator/curator.log

logformat: default

blacklist: []

EOF

|

文件 kustmoization.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

cat <<EOF>./kustmoization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: logging-system

resources:

- curator-cronjob.yaml

generatorOptions:

disableNameSuffixHash: true

configMapGenerator:

- files:

- curator.yaml

- actions.yaml

name: curator-config

images:

- name: 10.7.116.12:5000/bitnami/elasticsearch-curator

newTag: "5.8.4"

EOF

|

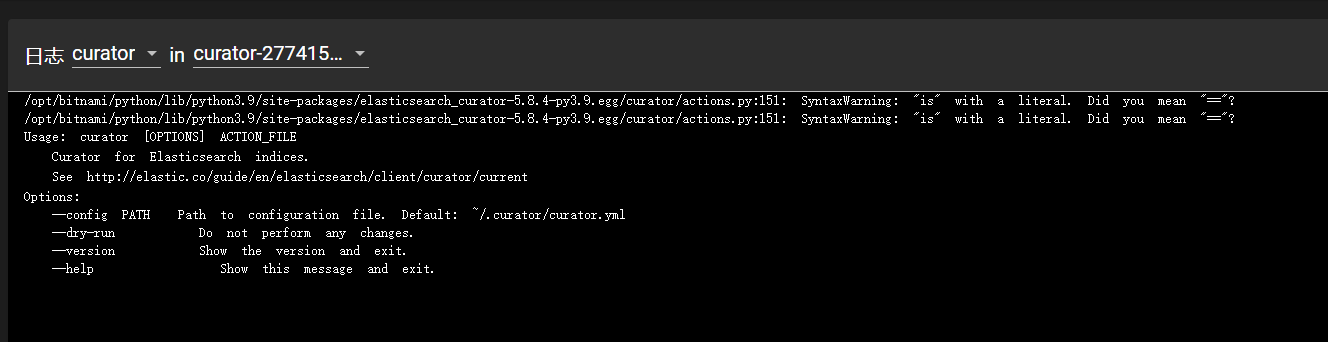

其中 actions.yml 定义的为规则。

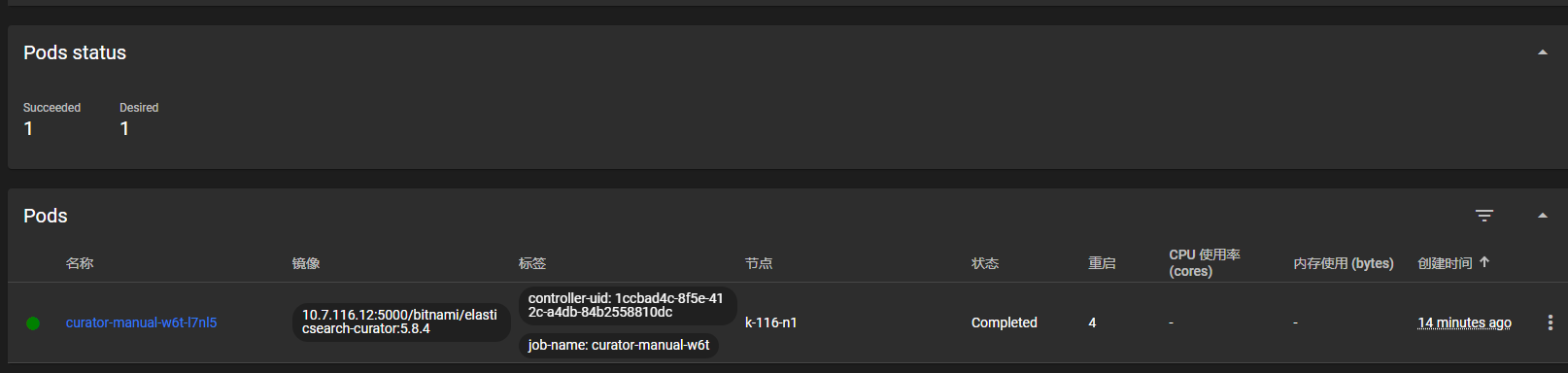

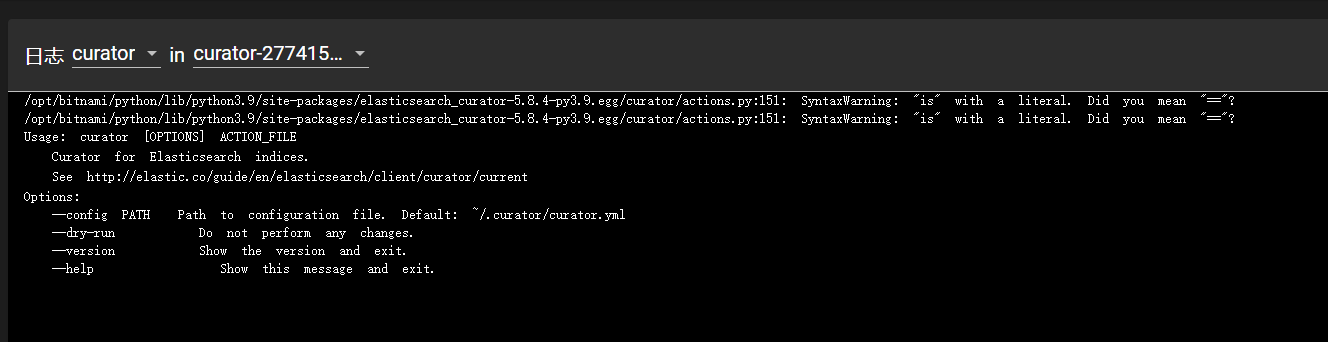

执行之后,查看日志得到如下消息

需要创建目录

需要创建目录

1

2

|

[root@k-116-n2 ~]# mkdir -p /var/log/curator

[root@k-116-n2 ~]# chmod 775 /var/log/curator

|

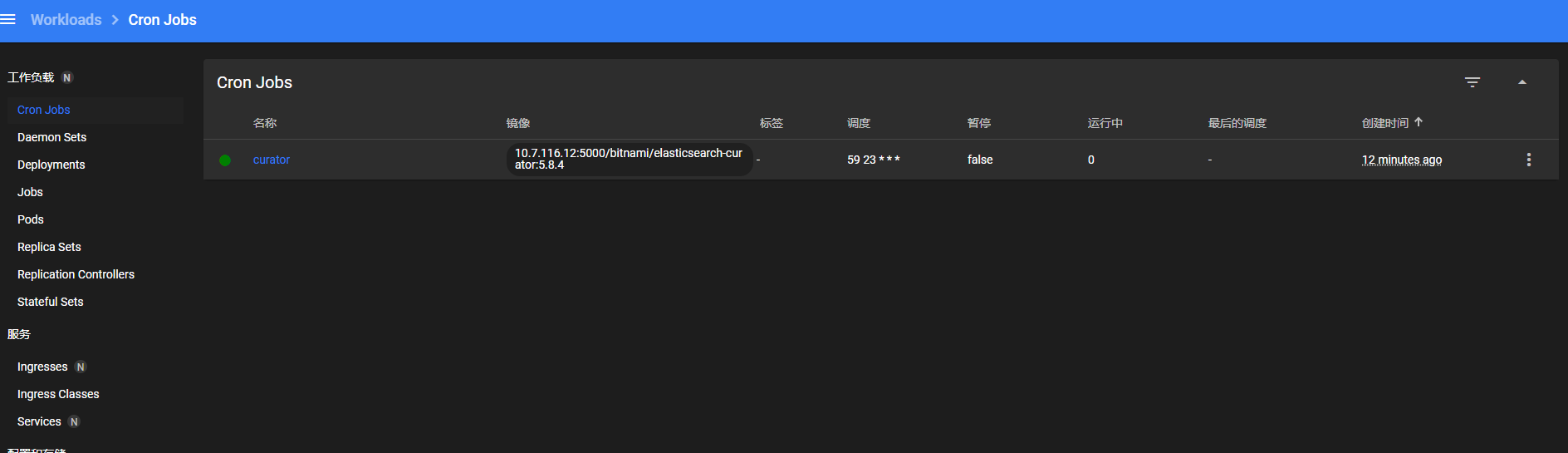

点击运行按钮,可以执行。

点击运行按钮,可以执行。

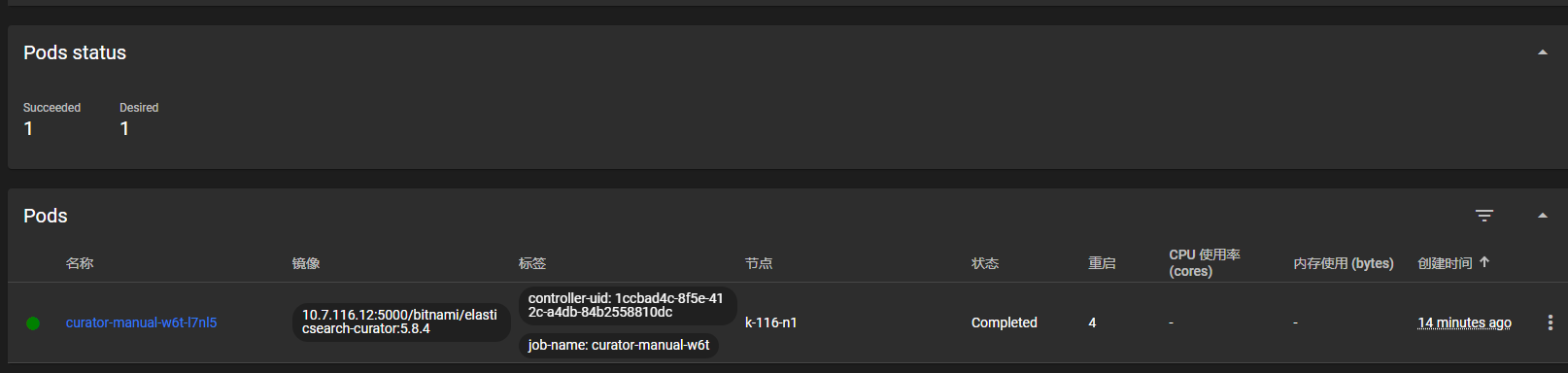

查看日志是否报错。

查看日志是否报错。

参考文档:

https://blog.csdn.net/qq_22765745/article/details/109002106

2、部署 kibana

2、部署 kibana 其实这个还可以修改,设置为 cri 统计,上面的 systemd 获取方式不可以采集日志,可以配置 cri 方式来获取:

其实这个还可以修改,设置为 cri 统计,上面的 systemd 获取方式不可以采集日志,可以配置 cri 方式来获取: 建立一个 index mange 就可以查看日志了

建立一个 index mange 就可以查看日志了

文件名 actions.yaml

文件名 actions.yaml 需要创建目录

需要创建目录 点击运行按钮,可以执行。

点击运行按钮,可以执行。

查看日志是否报错。

查看日志是否报错。